Practice Free SAA-C03 Exam Online Questions

A company wants to deploy its containerized application workloads to a VPC across three Availability Zones. The company needs a solution that is highly available across Availability Zones. The solution must require minimal changes to the application.

Which solution will meet these requirements with the LEAST operational overhead?

- A . Use Amazon Elastic Container Service (Amazon ECS). Configure Amazon ECS Service Auto Scaling to use target tracking scaling. Set the minimum capacity to 3. Set the task placement strategy type to spread with an Availability Zone attribute.

- B . Use Amazon Elastic Kubernetes Service (Amazon EKS) self-managed nodes. Configure Application Auto Scaling to use target tracking scaling. Set the minimum capacity to 3.

- C . Use Amazon EC2 Reserved Instances. Launch three EC2 instances in a spread placement group.

Configure an Auto Scaling group to use target tracking scaling. Set the minimum capacity to 3. - D . Use an AWS Lambda function. Configure the Lambda function to connect to a VPC. Configure Application Auto Scaling to use Lambda as a scalable target. Set the minimum capacity to 3.

A

Explanation:

The company wants to deploy its containerized application workloads to a VPC across three Availability Zones, with high availability and minimal changes to the application.

The solution that will meet these requirements with the least operational overhead is:

Use Amazon Elastic Container Service (Amazon ECS). Amazon ECS is a fully managed container orchestration service that allows you to run and scale containerized applications on AWS. Amazon ECS eliminates the need for you to install, operate, and scale your own cluster management infrastructure. Amazon ECS also integrates with other AWS services, such as VPC, ELB, CloudFormation, CloudWatch, IAM, and more.

Configure Amazon ECS Service Auto Scaling to use target tracking scaling. Amazon ECS Service Auto Scaling allows you to automatically adjust the number of tasks in your service based on the demand or custom metrics. Target tracking scaling is a policy type that adjusts the number of tasks in your service to keep a specified metric at a target value. For example, you can use target tracking scaling to maintain a target CPU utilization or request count per task for your service.

Set the minimum capacity to 3. This ensures that your service always has at least three tasks running across three Availability Zones, providing high availability and fault tolerance for your application.

Set the task placement strategy type to spread with an Availability Zone attribute. This ensures that your tasks are evenly distributed across the Availability Zones in your cluster, maximizing the availability of your service.

This solution will provide high availability across Availability Zones, require minimal changes to the application, and reduce the operational overhead of managing your own cluster infrastructure.

Reference: Amazon Elastic Container Service

Amazon ECS Service Auto Scaling

Target Tracking Scaling Policies for Amazon ECS Services

Amazon ECS Task Placement Strategies

A company is developing an application to support customer demands. The company wants to deploy the application on multiple Amazon EC2 Nitro-based instances within the same Availability Zone. The company also wants to give the application the ability to write to multiple block storage volumes in multiple EC2 Nitro-based instances simultaneously to achieve higher application availability.

Which solution will meet these requirements?

- A . Use General Purpose SSD (gp3) EBS volumes with Amazon Elastic Block Store (Amazon EBS) Multi-Attach.

- B . Use Throughput Optimized HDD (st1) EBS volumes with Amazon Elastic Block Store (Amazon EBS) Multi-Attach

- C . Use Provisioned IOPS SSD (io2) EBS volumes with Amazon Elastic Block Store (Amazon EBS) Multi-Attach.

- D . Use General Purpose SSD (gp2) EBS volumes with Amazon Elastic Block Store (Amazon E8S) Multi-Attach.

C

Explanation:

Understanding the Requirement: The application needs to write to multiple block storage volumes in multiple EC2 Nitro-based instances simultaneously to achieve higher availability.

Analysis of Options:

General Purpose SSD (gp3) with Multi-Attach: Supports Multi-Attach but does not provide the highest performance required for critical applications.

Throughput Optimized HDD (st1) with Multi-Attach: Not suitable for applications requiring high performance and low latency.

Provisioned IOPS SSD (io2) with Multi-Attach: Provides high performance and durability, suitable for applications requiring simultaneous writes and high availability.

General Purpose SSD (gp2) with Multi-Attach: Similar to gp3 but with less flexibility and performance.

Best Solution:

Provisioned IOPS SSD (io2) with Multi-Attach: This solution ensures the highest performance and availability for the application by allowing multiple EC2 instances to attach to and write to the same EBS volume simultaneously.

Reference: Amazon EBS Multi-Attach

Provisioned IOPS SSD (io2)

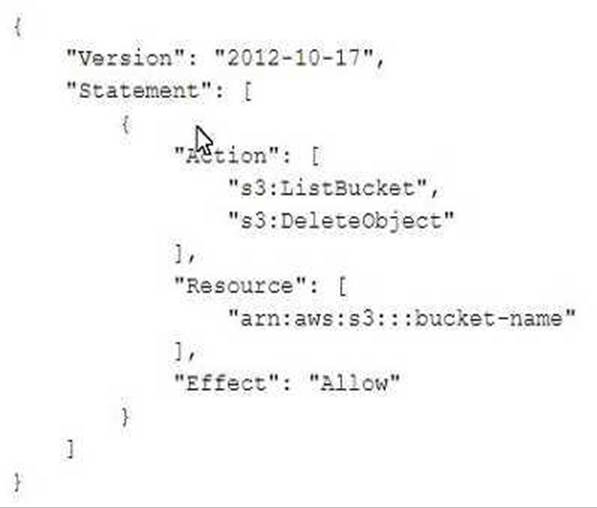

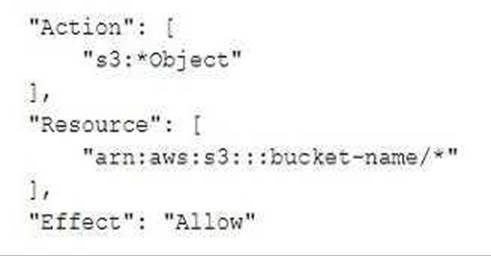

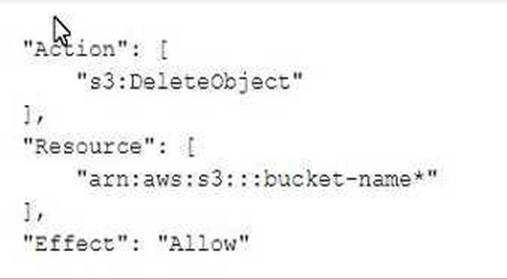

A group requires permissions to list an Amazon S3 bucket and delete objects from that bucket An administrator has created the following 1AM policy to provide access to the bucket and applied that policy to the group. The group is not able to delete objects in the bucket. The company follows least-privilege access rules.

A)

B)

C)

- A . Option A

- B . Option B

- C . Option C

- D . Option D

D

Explanation:

{

"Version": "2012-10-17",

"Statement": [

{

"Action": [

"s3:ListBucket",

"s3:DeleteObject"

],

"Resource": [

"arn:aws:s3:::<bucket-name>"

],

"Effect": "Allow",

},

{

"Action": "s3:*DeleteObject",

"Resource": [

"arn:aws:s3:::<bucket-name>/*" # <-. The policy clause kludge "added" to match the solution (Q248.1) example

],

"Effect": "Allow"

}

]

}

How can DynamoDB data be made available for long-term analytics with minimal operational overhead?

- A . Configure DynamoDB incremental exports to S3.

- B . Configure DynamoDB Streams to write records to S3.

- C . Configure EMR to copy DynamoDB data to S3.

- D . Configure EMR to copy DynamoDB data to HDFS.

A

Explanation:

Option A is the most automated and cost-efficient solution for exporting data to S3 for analytics.

Option B involves manual setup of Streams to S3.

Options C and D introduce complexity with EMR.

A company wants to move its application to a serverless solution. The serverless solution needs to analyze existing and new data by using SL. The company stores the data in an Amazon S3 bucket. The data requires encryption and must be replicated to a different AWS Region.

Which solution will meet these requirements with the LEAST operational overhead?

- A . Create a new S3 bucket. Load the data into the new S3 bucket. Use S3 Cross-Region Replication (CRR) to replicate encrypted objects to an S3 bucket in another Region. Use server-side encryption with AWS KMS multi-Region kays (SSE-KMS). Use Amazon Athena to query the data.

- B . Create a new S3 bucket. Load the data into the new S3 bucket. Use S3 Cross-Region Replication (CRR) to replicate encrypted objects to an S3 bucket in another Region. Use server-side encryption with AWS KMS multi-Region keys (SSE-KMS). Use Amazon RDS to query the data.

- C . Load the data into the existing S3 bucket. Use S3 Cross-Region Replication (CRR) to replicate encrypted objects to an S3 bucket in another Region. Use server-side encryption with Amazon S3

managed encryption keys (SSE-S3). Use Amazon Athena to query the data. - D . Load the data into the existing S3 bucket. Use S3 Cross-Region Replication (CRR) to replicate encrypted objects to an S3 bucket in another Region. Use server-side encryption with Amazon S3 managed encryption keys (SSE-S3). Use Amazon RDS to query the data.

A

Explanation:

This solution meets the requirements of a serverless solution, encryption, replication, and SQL analysis with the least operational overhead. Amazon Athena is a serverless interactive query service that can analyze data in S3 using standard SQL. S3 Cross-Region Replication (CRR) can replicate encrypted objects to an S3 bucket in another Region automatically. Server-side encryption with AWS KMS multi-Region keys (SSE-KMS) can encrypt the data at rest using keys that are replicated across multiple Regions. Creating a new S3 bucket can avoid potential conflicts with existing data or configurations.

Option B is incorrect because Amazon RDS is not a serverless solution and it cannot query data in S3 directly.

Option C is incorrect because server-side encryption with Amazon S3 managed encryption keys (SSE-S3) does not use KMS keys and it does not support multi-Region replication.

Option D is incorrect because Amazon RDS is not a serverless solution and it cannot query data in S3 directly. It is also incorrect for the same reason as option C.

Reference: https://docs.aws.amazon.com/AmazonS3/latest/userguide/replication-walkthrough-4.html

https://aws.amazon.com/blogs/storage/considering-four-different-replication-options-for-data-in-amazon-s3/

https://docs.aws.amazon.com/AmazonS3/latest/userguide/UsingEncryption.html https://aws.amazon.com/athena/

A company stores data in Amazon S3. According to regulations, the data must not contain personally identifiable information (PII). The company recently discovered that S3 buckets have some objects that contain PII. The company needs to automatically detect PII in S3 buckets and to notify the company’s security team.

Which solution will meet these requirements?

- A . Use Amazon Macie. Create an Amazon EventBridge rule to filter the SensitiveData event type from Macie findings and to send an Amazon Simple Notification Service (Amazon SNS) notification to the

security team. - B . Use Amazon GuardDuty. Create an Amazon EventBridge rule to filter the CRITICAL event type from GuardDuty findings and to send an Amazon Simple Notification Service (Amazon SNS) notification to the security team.

- C . Use Amazon Macie. Create an Amazon EventBridge rule to filter the SensitiveData:S3Object/Personal event type from Macie findings and to send an Amazon Simple Queue Service (Amazon SQS) notification to the security team.

- D . Use Amazon GuardDuty. Create an Amazon EventBridge rule to filter the CRITICAL event type from GuardDuty findings and to send an Amazon Simple Queue Service (Amazon SQS) notification to the security team.

A

Explanation:

Amazon Macie: Detects sensitive data such as PII in S3 buckets using machine learning.

EventBridge Rule: Filters Macie findings for specific sensitive data events (e.g., SensitiveData).

SNS Notification: Provides real-time alerts to the security team for immediate action.

Reference: Amazon Macie Documentation, Amazon EventBridge Documentation

A media company has an ecommerce website to sell music. Each music file is stored as an MP3 file. Premium users of the website purchase music files and download the files. The company wants to store music files on AWS. The company wants to provide access only to the premium users. The company wants to use the same URL for all premium users.

Which solution will meet these requirements?

- A . Store the MP3 files on a set of Amazon EC2 instances that have Amazon Elastic Block Store (Amazon EBS) volumes attached. Manage access to the files by creating an IAM user and an IAM policy for each premium user.

- B . Store all the MP3 files in an Amazon S3 bucket. Create a presigned URL for each MP3 file. Share the presigned URLs with the premium users.

- C . Store all the MP3 files in an Amazon S3 bucket. Create an Amazon CloudFront distribution that uses the S3 bucket as the origin. Generate CloudFront signed cookies for the music files. Share the signed cookies with the premium users.

- D . Store all the MP3 files in an Amazon S3 bucket. Create an Amazon CloudFront distribution that uses the S3 bucket as the origin. Use a CloudFront signed URL for each music file. Share the signed URLs with the premium users.

C

Explanation:

Comprehensive

Using CloudFront with signed cookies allows access control to the files based on the user’s credentials while providing a consistent URL for all users. This approach ensures high performance and scalability while keeping access restricted to authorized users. Using signed URLs (D) would require individual URLs for each file, which contradicts the requirement to use the same URL.

Reference: CloudFront Signed Cookies

Amazon S3 and CloudFront Integration

A company wants to measure the effectiveness of its recent marketing campaigns. The company performs batch processing on csv files of sales data and stores the results in an Amazon S3 bucket once every hour. The S3 bi petabytes of objects. The company runs one-time queries in Amazon Athena to determine which products are most popular on a particular date for a particular region Queries sometimes fail or take longer than expected to finish.

Which actions should a solutions architect take to improve the query performance and reliability? (Select TWO.)

- A . Reduce the S3 object sizes to less than 126 MB

- B . Partition the data by date and region n Amazon S3

- C . Store the files as large, single objects in Amazon S3.

- D . Use Amazon Kinosis Data Analytics to run the Queries as pan of the batch processing operation

- E . Use an AWS duo extract, transform, and load (ETL) process to convert the csv files into Apache Parquet format.

B, E

Explanation:

https://aws.amazon.com/blogs/big-data/top-10-performance-tuning-tips-for-amazon-athena/

This solution meets the requirements of measuring the effectiveness of marketing campaigns by performing batch processing on csv files of sales data and storing the results in an Amazon S3 bucket once every hour. An AWS duo ETL process can use services such as AWS Glue or AWS Data Pipeline to extract data from S3, transform it into a more efficient format such as Apache Parquet, and load it back into S3. Apache Parquet is a columnar storage format that can improve the query performance and reliability of Athena by reducing the amount of data scanned, improving compression ratio, and enabling predicate pushdown.

A company has launched an Amazon RDS for MySQL D6 instance Most of the connections to the database come from serverless applications. Application traffic to the database changes significantly at random intervals At limes of high demand, users report that their applications experience database connection rejection errors.

Which solution will resolve this issue with the LEAST operational overhead?

- A . Create a proxy in RDS Proxy Configure the users’ applications to use the DB instance through RDS Proxy

- B . Deploy Amazon ElastCache for Memcached between the users’ application and the DB instance

- C . Migrate the DB instance to a different instance class that has higher I/O capacity. Configure the users’ applications to use the new DB instance.

- D . Configure Multi-AZ for the DB instance Configure the users’ application to switch between the DB instances.

A

Explanation:

Many applications, including those built on modern serverless architectures, can have a large number of open connections to the database server and may open and close database connections at a high rate, exhausting database memory and compute resources. Amazon RDS Proxy allows applications to pool and share connections established with the database, improving database efficiency and application scalability. (https://aws.amazon.com/pt/rds/proxy/)

A company is planning to migrate an on-premises online transaction processing (OLTP) database that uses MySQL to an AWS managed database management system. Several reporting and analytics applications use the on-premises database heavily on weekends and at the end of each month. The cloud-based solution must be able to handle read-heavy surges during weekends and at the end of each month.

Which solution will meet these requirements?

- A . Migrate the database to an Amazon Aurora MySQL cluster. Configure Aurora Auto Scaling to use replicas to handle surges.

- B . Migrate the database to an Amazon EC2 instance that runs MySQL. Use an EC2 instance type that has ephemeral storage. Attach Amazon EBS Provisioned IOPS SSD (io2) volumes to the instance.

- C . Migrate the database to an Amazon RDS for MySQL database. Configure the RDS for MySQL database for a Multi-AZ deployment, and set up auto scaling.

- D . Migrate from the database to Amazon Redshift. Use Amazon Redshift as the database for both OLTP and analytics applications.

A

Explanation: