Practice Free Professional Data Engineer Exam Online Questions

If you want to create a machine learning model that predicts the price of a particular stock based on its recent price history, what type of estimator should you use?

- A . Unsupervised learning

- B . Regressor

- C . Classifier

- D . Clustering estimator

B

Explanation:

Regression is the supervised learning task for modeling and predicting continuous, numeric variables. Examples include predicting real-estate prices, stock price movements, or student test scores.

Classification is the supervised learning task for modeling and predicting categorical variables.

Examples include predicting employee churn, email spam, financial fraud, or student letter grades.

Clustering is an unsupervised learning task for finding natural groupings of observations (i.e. clusters) based on the inherent structure within your dataset. Examples include customer segmentation, grouping similar items in e-commerce, and social network analysis.

Reference: https://elitedatascience.com/machine-learning-algorithms

You work for a large real estate firm and are preparing 6 TB of home sales data lo be used for machine learning You will use SOL to transform the data and use BigQuery ML lo create a machine learning model. You plan to use the model for predictions against a raw dataset that has not been transformed.

How should you set up your workflow in order to prevent skew at prediction time?

- A . When creating your model, use BigQuerys TRANSFORM clause to define preprocessing stops. At prediction time, use BigQuery"s ML. EVALUATE clause without specifying any transformations on the raw input data.

- B . When creating your model, use BigQuery’s TRANSFORM clause to define preprocessing steps Before requesting predictions, use a saved query to transform your raw input data, and then use ML. EVALUATE

- C . Use a BigOuery to define your preprocessing logic. When creating your model, use the view as your model training data. At prediction lime, use BigQuery’s ML EVALUATE clause without specifying any transformations on the raw input data.

- D . Preprocess all data using Dataflow. At prediction time, use BigOuery"s ML. EVALUATE clause without specifying any further transformations on the input data.

A

Explanation:

https://cloud.google.com/bigquery-ml/docs/bigqueryml-transform Using the TRANSFORM clause, you can specify all preprocessing during model creation. The preprocessing is automatically applied during the prediction and evaluation phases of machine learning

You want to store your team’s shared tables in a single dataset to make data easily accessible to various analysts. You want to make this data readable but unmodifiable by analysts. At the same time, you want to provide the analysts with individual workspaces in the same project, where they can create and store tables for their own use, without the tables being accessible by other analysts.

What should you do?

- A . Give analysts the BigQuery Data Viewer role at the project level Create one other dataset, and give the analysts the BigQuery Data Editor role on that dataset.

- B . Give analysts the BigQuery Data Viewer role at the project level Create a dataset for each analyst, and give each analyst the BigQuery Data Editor role at the project level.

- C . Give analysts the BigQuery Data Viewer role on the shared dataset. Create a dataset for each analyst, and give each analyst the BigQuery Data Editor role at the dataset level for their assigned dataset

- D . Give analysts the BigQuery Data Viewer role on the shared dataset Create one other dataset and give the analysts the BigQuery Data Editor role on that dataset.

C

Explanation:

The BigQuery Data Viewer role allows users to read data and metadata from tables and views, but not to modify or delete them. By giving analysts this role on the shared dataset, you can ensure that they can access the data for analysis, but not change it. The BigQuery Data Editor role allows users to create, update, and delete tables and views, as well as read and write data. By giving analysts this role at the dataset level for their assigned dataset, you can provide them with individual workspaces where they can store their own tables and views, without affecting the shared dataset or other analysts’ datasets. This way, you can achieve both data protection and data isolation for your team.

Reference: BigQuery IAM roles and permissions

Basic roles and permissions

You want to store your team’s shared tables in a single dataset to make data easily accessible to various analysts. You want to make this data readable but unmodifiable by analysts. At the same time, you want to provide the analysts with individual workspaces in the same project, where they can create and store tables for their own use, without the tables being accessible by other analysts.

What should you do?

- A . Give analysts the BigQuery Data Viewer role at the project level Create one other dataset, and give the analysts the BigQuery Data Editor role on that dataset.

- B . Give analysts the BigQuery Data Viewer role at the project level Create a dataset for each analyst, and give each analyst the BigQuery Data Editor role at the project level.

- C . Give analysts the BigQuery Data Viewer role on the shared dataset. Create a dataset for each analyst, and give each analyst the BigQuery Data Editor role at the dataset level for their assigned dataset

- D . Give analysts the BigQuery Data Viewer role on the shared dataset Create one other dataset and give the analysts the BigQuery Data Editor role on that dataset.

C

Explanation:

The BigQuery Data Viewer role allows users to read data and metadata from tables and views, but not to modify or delete them. By giving analysts this role on the shared dataset, you can ensure that they can access the data for analysis, but not change it. The BigQuery Data Editor role allows users to create, update, and delete tables and views, as well as read and write data. By giving analysts this role at the dataset level for their assigned dataset, you can provide them with individual workspaces where they can store their own tables and views, without affecting the shared dataset or other analysts’ datasets. This way, you can achieve both data protection and data isolation for your team.

Reference: BigQuery IAM roles and permissions

Basic roles and permissions

You want to use Google Stackdriver Logging to monitor Google BigQuery usage. You need an instant notification to be sent to your monitoring tool when new data is appended to a certain table using an insert job, but you do not want to receive notifications for other tables.

What should you do?

- A . Make a call to the Stackdriver API to list all logs, and apply an advanced filter.

- B . In the Stackdriver logging admin interface, and enable a log sink export to BigQuery.

- C . In the Stackdriver logging admin interface, enable a log sink export to Google Cloud Pub/Sub, and subscribe to the topic from your monitoring tool.

- D . Using the Stackdriver API, create a project sink with advanced log filter to export to Pub/Sub, and subscribe to the topic from your monitoring tool.

You are building an application to share financial market data with consumers, who will receive data feeds. Data is collected from the markets in real time.

Consumers will receive the data in the following ways:

– Real-time event stream

– ANSI SQL access to real-time stream and historical data

– Batch historical exports

Which solution should you use?

- A . Cloud Dataflow, Cloud SQL, Cloud Spanner

- B . Cloud Pub/Sub, Cloud Storage, BigQuery

- C . Cloud Dataproc, Cloud Dataflow, BigQuery

- D . Cloud Pub/Sub, Cloud Dataproc, Cloud SQL

You want to process payment transactions in a point-of-sale application that will run on Google Cloud Platform. Your user base could grow exponentially, but you do not want to manage infrastructure scaling.

Which Google database service should you use?

- A . Cloud SQL

- B . BigQuery

- C . Cloud Bigtable

- D . Cloud Datastore

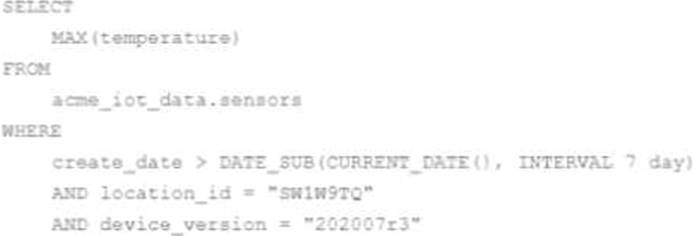

You are collecting loT sensor data from millions of devices across the world and storing the data in BigQuery.

Your access pattern is based on recent data tittered by location_id and device_version with the following query:

You want to optimize your queries for cost and performance.

How should you structure your data?

- A . Partition table data by create_date, location_id and device_version

- B . Partition table data by create_date cluster table data by tocation_id and device_version

- C . Cluster table data by create_date location_id and device_version

- D . Cluster table data by create_date, partition by location and device_version

Which of the following is not possible using primitive roles?

- A . Give a user viewer access to BigQuery and owner access to Google Compute Engine instances.

- B . Give UserA owner access and UserB editor access for all datasets in a project.

- C . Give a user access to view all datasets in a project, but not run queries on them.

- D . Give GroupA owner access and GroupB editor access for all datasets in a project.

C

Explanation:

Primitive roles can be used to give owner, editor, or viewer access to a user or group, but they can’t be used to separate data access permissions from job-running permissions.

Reference: https://cloud.google.com/bigquery/docs/access-control#primitive_iam_roles

You have a BigQuery table that ingests data directly from a Pub/Sub subscription. The ingested data is encrypted with a Google-managed encryption key. You need to meet a new organization policy that requires you to use keys from a centralized Cloud Key Management Service (Cloud KMS) project to encrypt data at rest.

What should you do?

- A . Create a new BigOuory table by using customer-managed encryption keys (CMEK), and migrate the data from the old BigQuery table.

- B . Create a new BigOuery table and Pub/Sub topic by using customer-managed encryption keys (CMEK), and migrate the data from the old Bigauery table.

- C . Create a new Pub/Sub topic with CMEK and use the existing BigQuery table by using Google-managed encryption key.

- D . Use Cloud KMS encryption key with Dataflow to ingest the existing Pub/Sub subscription to the existing BigQuery table.

A

Explanation:

To use CMEK for BigQuery, you need to create a key ring and a key in Cloud KMS, and then specify the key resource name when creating or updating a BigQuery table. You cannot change the encryption type of an existing table, so you need to create a new table with CMEK and copy the data from the old table with Google-managed encryption key.

Reference: Customer-managed Cloud KMS keys | BigQuery | Google Cloud

Creating and managing encryption keys | Cloud KMS Documentation | Google Cloud