Practice Free Professional Data Engineer Exam Online Questions

You have spent a few days loading data from comma-separated values (CSV) files into the Google BigQuery table CLICK_STREAM. The column DT stores the epoch time of click events. For convenience, you chose a simple schema where every field is treated as the STRING type. Now, you want to compute web session durations of users who visit your site, and you want to change its data type to the TIMESTAMP. You want to minimize the migration effort without making future queries computationally expensive.

What should you do?

- A . Delete the table CLICK_STREAM, and then re-create it such that the column DT is of the TIMESTAMP type. Reload the data.

- B . Add a column TS of the TIMESTAMP type to the table CLICK_STREAM, and populate the numeric values from the column TS for each row. Reference the column TS instead of the column DT from now on.

- C . Create a view CLICK_STREAM_V, where strings from the column DT are cast into TIMESTAMP values. Reference the view CLICK_STREAM_V instead of the table CLICK_STREAM from now on.

- D . Add two columns to the table CLICK STREAM: TS of the TIMESTAMP type and IS_NEW of the BOOLEAN type. Reload all data in append mode. For each appended row, set the value of IS_NEW to true. For future queries, reference the column TS instead of the column DT, with the WHERE clause ensuring that the value of IS_NEW must be true.

- E . Construct a query to return every row of the table CLICK_STREAM, while using the built-in function to cast strings from the column DT into TIMESTAMP values. Run the query into a destination table NEW_CLICK_STREAM, in which the column TS is the TIMESTAMP type. Reference the table NEW_CLICK_STREAM instead of the table CLICK_STREAM from now on. In the future, new data is loaded into the table NEW_CLICK_STREAM.

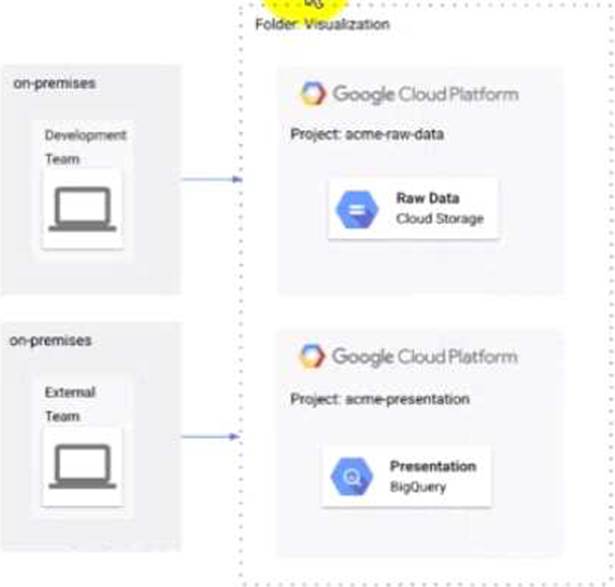

The Development and External teams nave the project viewer Identity and Access Management (1AM) role m a folder named Visualization. You want the Development Team to be able to read data from both Cloud Storage and BigQuery, but the External Team should only be able to read data from BigQuery.

What should you do?

- A . Remove Cloud Storage IAM permissions to the External Team on the acme-raw-data project

- B . Create Virtual Private Cloud (VPC) firewall rules on the acme-raw-data protect that deny all Ingress traffic from the External Team CIDR range

- C . Create a VPC Service Controls perimeter containing both protects and BigQuery as a restricted API Add the External Team users to the perimeter s Access Level

- D . Create a VPC Service Controls perimeter containing both protects and Cloud Storage as a restricted API. Add the Development Team users to the perimeter’s Access Level

Assign the Identity and Access Management bigquerydatapolicy.maskedReader role for the BigQuery tables to the analysts

Explanation:

Cloud DLP is a service that helps you discover, classify, and protect your sensitive data. It supports various de-identification techniques, such as masking, redaction, tokenization, and encryption. Format-preserving encryption (FPE) with FFX is a technique that encrypts sensitive data while preserving its original format and length. This allows you to join the encrypted data on the same field without revealing the actual values. FPE with FFX also supports partial encryption, which means you can encrypt only a portion of the data, such as the domain name of an email address. By using Cloud DLP to de-identify the email field with FPE with FFX, you can ensure that the analysts can join the booking and user profile data on the email field without accessing the PII. You can create a pipeline to de-identify the email field by using recordTransformations in Cloud DLP, which allows you to specify the fields and the de-identification transformations to apply to them. You can then load the de-identified data into a BigQuery table for analysis.

Reference: De-identify sensitive data | Cloud Data Loss Prevention Documentation Format-preserving encryption with FFX | Cloud Data Loss Prevention Documentation De-identify and re-identify data with the Cloud DLP API De-identify data in a pipeline

You create an important report for your large team in Google Data Studio 360. The report uses Google BigQuery as its data source. You notice that visualizations are not showing data that is less than 1 hour old.

What should you do?

- A . Disable caching by editing the report settings.

- B . Disable caching in BigQuery by editing table details.

- C . Refresh your browser tab showing the visualizations.

- D . Clear your browser history for the past hour then reload the tab showing the virtualizations.

A

Explanation:

Reference https://support.google.com/datastudio/answer/7020039?hl=en

You operate a logistics company, and you want to improve event delivery reliability for vehicle-based

sensors. You operate small data centers around the world to capture these events, but leased lines that provide connectivity from your event collection infrastructure to your event processing infrastructure are unreliable, with unpredictable latency. You want to address this issue in the most cost-effective way.

What should you do?

- A . Deploy small Kafka clusters in your data centers to buffer events.

- B . Have the data acquisition devices publish data to Cloud Pub/Sub.

- C . Establish a Cloud Interconnect between all remote data centers and Google.

- D . Write a Cloud Dataflow pipeline that aggregates all data in session windows.

An online retailer has built their current application on Google App Engine. A new initiative at the company mandates that they extend their application to allow their customers to transact directly via the application.

They need to manage their shopping transactions and analyze combined data from multiple datasets using a business intelligence (BI) tool. They want to use only a single database for this purpose.

Which Google Cloud database should they choose?

- A . BigQuery

- B . Cloud SQL

- C . Cloud BigTable

- D . Cloud Datastore

C

Explanation:

Reference: https://cloud.google.com/solutions/business-intelligence/

Which Java SDK class can you use to run your Dataflow programs locally?

- A . LocalRunner

- B . DirectPipelineRunner

- C . MachineRunner

- D . LocalPipelineRunner

B

Explanation:

DirectPipelineRunner allows you to execute operations in the pipeline directly, without any optimization. Useful for small local execution and tests

Reference: https://cloud.google.com/dataflow/java-sdk/JavaDoc/com/google/cloud/dataflow/sdk/runners/DirectPipelineRunner

You are testing a Dataflow pipeline to ingest and transform text files. The files are compressed gzip, errors are written to a dead-letter queue, and you are using Sidelnputs to join data.

You noticed that the pipeline is taking longer to complete than expected, what should you do to expedite the Dataflow job?

- A . Switch to compressed Avro files

- B . Reduce the batch size

- C . Retry records that throw an error

- D . Use CoGroupByKey instead of the Sidelnput

You are a retailer that wants to integrate your online sales capabilities with different in-home assistants, such as Google Home. You need to interpret customer voice commands and issue an order to the backend systems.

Which solutions should you choose?

- A . Cloud Speech-to-Text API

- B . Cloud Natural Language API

- C . Dialogflow Enterprise Edition

- D . Cloud AutoML Natural Language

You are a retailer that wants to integrate your online sales capabilities with different in-home assistants, such as Google Home. You need to interpret customer voice commands and issue an order to the backend systems.

Which solutions should you choose?

- A . Cloud Speech-to-Text API

- B . Cloud Natural Language API

- C . Dialogflow Enterprise Edition

- D . Cloud AutoML Natural Language