Practice Free Professional Data Engineer Exam Online Questions

You have uploaded 5 years of log data to Cloud Storage A user reported that some data points in the log data are outside of their expected ranges, which indicates errors You need to address this issue and be able to run the process again in the future while keeping the original data for compliance reasons.

What should you do?

- A . Import the data from Cloud Storage into BigQuery Create a new BigQuery table, and skip the rows with errors.

- B . Create a Compute Engine instance and create a new copy of the data in Cloud Storage Skip the rows with errors

- C . Create a Cloud Dataflow workflow that reads the data from Cloud Storage, checks for values outside the expected range, sets the value to an appropriate default, and writes the updated records to a new dataset in Cloud Storage

- D . Create a Cloud Dataflow workflow that reads the data from Cloud Storage, checks for values outside the expected range, sets the value to an appropriate default, and writes the updated records to the same dataset in Cloud Storage

What are all of the BigQuery operations that Google charges for?

- A . Storage, queries, and streaming inserts

- B . Storage, queries, and loading data from a file

- C . Storage, queries, and exporting data

- D . Queries and streaming inserts

A

Explanation:

Google charges for storage, queries, and streaming inserts. Loading data from a file and exporting data are free operations.

Reference: https://cloud.google.com/bigquery/pricing

What are all of the BigQuery operations that Google charges for?

- A . Storage, queries, and streaming inserts

- B . Storage, queries, and loading data from a file

- C . Storage, queries, and exporting data

- D . Queries and streaming inserts

A

Explanation:

Google charges for storage, queries, and streaming inserts. Loading data from a file and exporting data are free operations.

Reference: https://cloud.google.com/bigquery/pricing

You need to look at BigQuery data from a specific table multiple times a day. The underlying table you are querying is several petabytes in size, but you want to filter your data and provide simple aggregations to downstream users. You want to run queries faster and get up-to-date insights quicker.

What should you do?

- A . Run a scheduled query to pull the necessary data at specific intervals daily.

- B . Create a materialized view based off of the query being run.

- C . Use a cached query to accelerate time to results.

- D . Limit the query columns being pulled in the final result.

B

Explanation:

Materialized views are precomputed views that periodically cache the results of a query for increased performance and efficiency. BigQuery leverages precomputed results from materialized views and whenever possible reads only changes from the base tables to compute up-to-date results. Materialized views can significantly improve the performance of workloads that have the characteristic of common and repeated queries. Materialized views can also optimize queries with high computation cost and small dataset results, such as filtering and aggregating large tables. Materialized views are refreshed automatically when the base tables change, so they always return fresh data. Materialized views can also be used by the BigQuery optimizer to process queries to the base tables, if any part of the query can be resolved by querying the materialized view.

Reference: Introduction to materialized views

Create materialized views

BigQuery Materialized View Simplified: Steps to Create and 3 Best Practices

Materialized view in Bigquery

You are building a model to make clothing recommendations. You know a user’s fashion preference is likely to change over time, so you build a data pipeline to stream new data back to the model as it becomes available.

How should you use this data to train the model?

- A . Continuously retrain the model on just the new data.

- B . Continuously retrain the model on a combination of existing data and the new data.

- C . Train on the existing data while using the new data as your test set.

- D . Train on the new data while using the existing data as your test set.

C

Explanation:

https://cloud.google.com/automl-tables/docs/prepare

You designed a database for patient records as a pilot project to cover a few hundred patients in three clinics. Your design used a single database table to represent all patients and their visits, and you used self-joins to generate reports. The server resource utilization was at 50%. Since then, the scope of the project has expanded. The database must now store 100 times more patient records. You can no longer run the reports, because they either take too long or they encounter errors with insufficient compute resources.

How should you adjust the database design?

- A . Add capacity (memory and disk space) to the database server by the order of 200.

- B . Shard the tables into smaller ones based on date ranges, and only generate reports with prespecified date ranges.

- C . Normalize the master patient-record table into the patient table and the visits table, and create other necessary tables to avoid self-join.

- D . Partition the table into smaller tables, with one for each clinic. Run queries against the smaller table pairs, and use unions for consolidated reports.

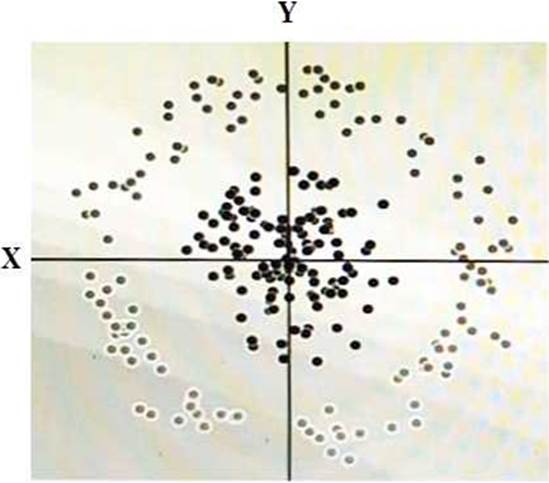

You have some data, which is shown in the graphic below. The two dimensions are X and Y, and the shade of each dot represents what class it is. You want to classify this data accurately using a linear algorithm.

To do this you need to add a synthetic feature.

What should the value of that feature be?

- A . X^2+Y^2

- B . X^2

- C . Y^2

- D . cos(X)

Which of the following is not true about Dataflow pipelines?

- A . Pipelines are a set of operations

- B . Pipelines represent a data processing job

- C . Pipelines represent a directed graph of steps

- D . Pipelines can share data between instances

D

Explanation:

The data and transforms in a pipeline are unique to, and owned by, that pipeline. While your program can create multiple pipelines, pipelines cannot share data or transforms

Reference: https://cloud.google.com/dataflow/model/pipelines

You are designing a data warehouse in BigQuery to analyze sales data for a telecommunication service provider. You need to create a data model for customers, products, and subscriptions All customers, products, and subscriptions can be updated monthly, but you must maintain a historical record of all data. You plan to use the visualization layer for current and historical reporting. You need to ensure that the data model is simple, easy-to-use. and cost-effective.

What should you do?

- A . Create a normalized model with tables for each entity. Use snapshots before updates to track historical data

- B . Create a normalized model with tables for each entity. Keep all input files in a Cloud Storage bucket to track historical data

- C . Create a denormalized model with nested and repeated fields Update the table and use snapshots to track historical data

- D . Create a denormalized, append-only model with nested and repeated fields Use the ingestion timestamp to track historical data.

D

Explanation:

– A denormalized, append-only model simplifies query complexity by eliminating the need for joins. – Adding data with an ingestion timestamp allows for easy retrieval of both current and historical states. – Instead of updating records, new records are appended, which maintains historical information without the need to create separate snapshots.

You are building a data pipeline on Google Cloud. You need to prepare data using a casual method for a machine-learning process. You want to support a logistic regression model. You also need to monitor and

adjust for null values, which must remain real-valued and cannot be removed.

What should you do?

- A . Use Cloud Dataprep to find null values in sample source data. Convert all nulls to ‘none’ using a Cloud

Dataproc job. - B . Use Cloud Dataprep to find null values in sample source data. Convert all nulls to 0 using a Cloud Dataprep job.

- C . Use Cloud Dataflow to find null values in sample source data. Convert all nulls to ‘none’ using a Cloud

Dataprep job. - D . Use Cloud Dataflow to find null values in sample source data. Convert all nulls to using a custom script.