Practice Free Professional Cloud Developer Exam Online Questions

You have an application deployed in production. When a new version is deployed, you want to ensure that all production traffic is routed to the new version of your application. You also want to keep the previous version deployed so that you can revert to it if there is an issue with the new version.

Which deployment strategy should you use?

- A . Blue/green deployment

- B . Canary deployment

- C . Rolling deployment

- D . Recreate deployment

You work for a financial services company that has a container-first approach. Your team develops microservices applications You have a Cloud Build pipeline that creates a container image, runs regression tests, and publishes the image to Artifact Registry You need to ensure that only containers that have passed the regression tests are deployed to Google Kubernetes Engine (GKE) clusters You have already enabled Binary Authorization on the GKE clusters.

What should you do next?

- A . Deploy Voucher Server and Voucher Client Components. After a container image has passed the regression tests, run Voucher Client as a step in the Cloud Build pipeline.

- B . Set the Pod Security Standard level to Restricted for the relevant namespaces Digitally sign the container

images that have passed the regression tests as a step in the Cloud Build pipeline. - C . Create an attestor and a policy. Create an attestation for the container images that have passed the regression tests as a step in the Cloud Build pipeline.

- D . Create an attestor and a policy Run a vulnerability scan to create an attestation for the container image as a step in the Cloud Build pipeline.

Your existing application keeps user state information in a single MySQL database. This state information is very user-specific and depends heavily on how long a user has been using an application. The MySQL database is causing challenges to maintain and enhance the schema for various users.

Which storage option should you choose?

- A . Cloud SQL

- B . Cloud Storage

- C . Cloud Spanner

- D . Cloud Datastore/Firestore

A

Explanation:

Reference: https://cloud.google.com/solutions/migrating-mysql-to-cloudsql-concept

You have a mixture of packaged and internally developed applications hosted on a Compute Engine instance that is running Linux. These applications write log records as text in local files. You want the logs to be written to Cloud Logging.

What should you do?

- A . Pipe the content of the files to the Linux Syslog daemon.

- B . Install a Google version of fluentd on the Compute Engine instance.

- C . Install a Google version of collectd on the Compute Engine instance.

- D . Using cron, schedule a job to copy the log files to Cloud Storage once a day.

B

Explanation:

Reference: https://cloud.google.com/logging/docs/agent/logging/configuration

For this question refer to the HipLocal case study.

HipLocal wants to reduce the latency of their services for users in global locations. They have created read replicas of their database in locations where their users reside and configured their service to read traffic using those replicas.

How should they further reduce latency for all database interactions with the least amount of effort?

- A . Migrate the database to Bigtable and use it to serve all global user traffic.

- B . Migrate the database to Cloud Spanner and use it to serve all global user traffic.

- C . Migrate the database to Firestore in Datastore mode and use it to serve all global user traffic.

- D . Migrate the services to Google Kubernetes Engine and use a load balancer service to better scale the application.

HipLocal has connected their Hadoop infrastructure to GCP using Cloud Interconnect in order to

query data stored on persistent disks.

Which IP strategy should they use?

- A . Create manual subnets.

- B . Create an auto mode subnet.

- C . Create multiple peered VPCs.

- D . Provision a single instance for NAT.

Your application is composed of a set of loosely coupled services orchestrated by code executed on Compute Engine. You want your application to easily bring up new Compute Engine instances that find and use a specific version of a service.

How should this be configured?

- A . Define your service endpoint information as metadata that is retrieved at runtime and used to connect to the desired service.

- B . Define your service endpoint information as label data that is retrieved at runtime and used to connect to the desired service.

- C . Define your service endpoint information to be retrieved from an environment variable at runtime and used to connect to the desired service.

- D . Define your service to use a fixed hostname and port to connect to the desired service. Replace the service at the endpoint with your new version.

A

Explanation:

https://cloud.google.com/service-infrastructure/docs/service-metadata/reference/rest#service-endpoint

You recently migrated a monolithic application to Google Cloud by breaking it down into microservices. One of the microservices is deployed using Cloud Functions. As you modernize the application, you make a change to the API of the service that is backward-incompatible. You need to support both existing callers who use the original API and new callers who use the new API.

What should you do?

- A . Leave the original Cloud Function as-is and deploy a second Cloud Function with the new API. Use a load balancer to distribute calls between the versions.

- B . Leave the original Cloud Function as-is and deploy a second Cloud Function that includes only the changed API. Calls are automatically routed to the correct function.

- C . Leave the original Cloud Function as-is and deploy a second Cloud Function with the new API. Use Cloud Endpoints to provide an API gateway that exposes a versioned API.

- D . Re-deploy the Cloud Function after making code changes to support the new API. Requests for both versions of the API are fulfilled based on a version identifier included in the call.

D

Explanation:

Reference: https://cloud.google.com/endpoints/docs/openapi/versioning-an-api

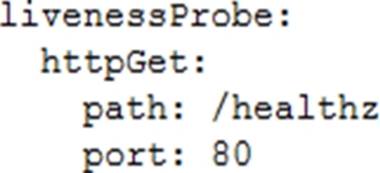

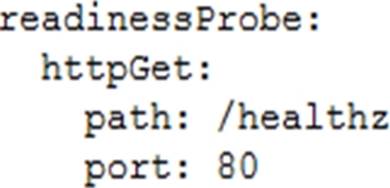

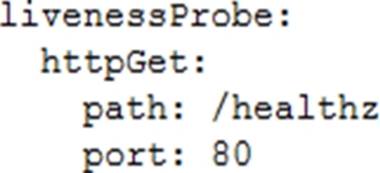

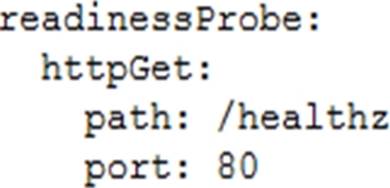

You are planning to deploy your application in a Google Kubernetes Engine (GKE) cluster. The application exposes an HTTP-based health check at /healthz. You want to use this health check endpoint to determine whether traffic should be routed to the pod by the load balancer.

Which code snippet should you include in your Pod configuration?

A)

B)

C)

D)

- A . Option A

- B . Option B

- C . Option C

- D . Option D

B

Explanation:

For the GKE ingress controller to use your readiness Probes as health checks, the Pods for an Ingress must exist at the time of Ingress creation. If your replicas are scaled to 0, the default health check will apply.

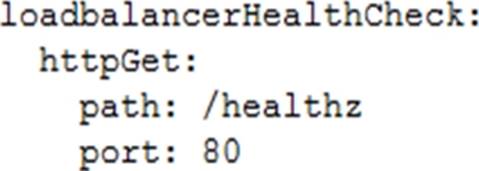

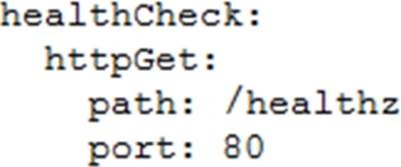

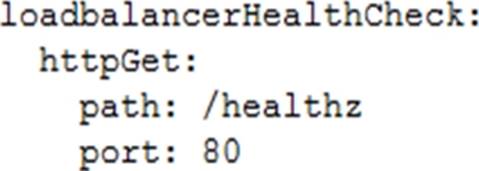

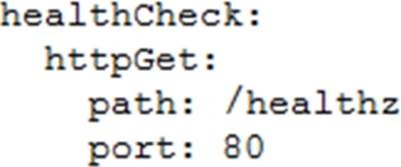

You are planning to deploy your application in a Google Kubernetes Engine (GKE) cluster. The application exposes an HTTP-based health check at /healthz. You want to use this health check endpoint to determine whether traffic should be routed to the pod by the load balancer.

Which code snippet should you include in your Pod configuration?

A)

B)

C)

D)

- A . Option A

- B . Option B

- C . Option C

- D . Option D

B

Explanation:

For the GKE ingress controller to use your readiness Probes as health checks, the Pods for an Ingress must exist at the time of Ingress creation. If your replicas are scaled to 0, the default health check will apply.