Practice Free MLS-C01 Exam Online Questions

A Data Scientist is developing a machine learning model to classify whether a financial transaction is fraudulent. The labeled data available for training consists of 100,000 non-fraudulent observations and 1,000 fraudulent observations.

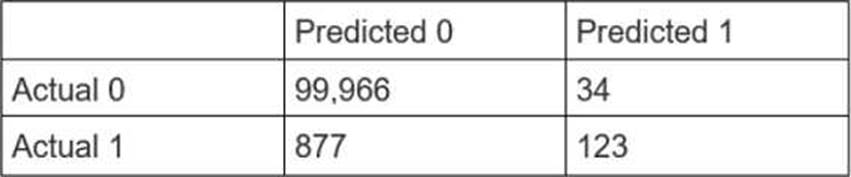

The Data Scientist applies the XGBoost algorithm to the data, resulting in the following confusion matrix when the trained model is applied to a previously unseen validation dataset.

The accuracy of the model is 99.1%, but the Data Scientist has been asked to reduce the number of false negatives.

Which combination of steps should the Data Scientist take to reduce the number of false positive predictions by the model? (Select TWO.)

- A . Change the XGBoost eval_metric parameter to optimize based on rmse instead of error.

- B . Increase the XGBoost scale_pos_weight parameter to adjust the balance of positive and negative weights.

- C . Increase the XGBoost max_depth parameter because the model is currently underfitting the data.

- D . Change the XGBoost evaljnetric parameter to optimize based on AUC instead of error.

- E . Decrease the XGBoost max_depth parameter because the model is currently overfitting the data.

B, D

Explanation:

The XGBoost algorithm is a popular machine learning technique for classification problems. It is based on the idea of boosting, which is to combine many weak learners (decision trees) into a strong learner (ensemble model).

The XGBoost algorithm can handle imbalanced data by using the scale_pos_weight parameter, which controls the balance of positive and negative weights in the objective function. A typical value to consider is the ratio of negative cases to positive cases in the data. By increasing this parameter, the algorithm will pay more attention to the minority class (positive) and reduce the number of false negatives.

The XGBoost algorithm can also use different evaluation metrics to optimize the model performance. The default metric is error, which is the misclassification rate. However, this metric can be misleading for imbalanced data, as it does not account for the different costs of false positives and false negatives. A better metric to use is AUC, which is the area under the receiver operating characteristic (ROC) curve. The ROC curve plots the true positive rate against the false positive rate for different threshold values. The AUC measures how well the model can distinguish between the two classes, regardless of the threshold. By changing the eval_metric parameter to AUC, the algorithm will try to maximize the AUC score and reduce the number of false negatives.

Therefore, the combination of steps that should be taken to reduce the number of false negatives are to increase the scale_pos_weight parameter and change the eval_metric parameter to AUC.

Reference: XGBoost Parameters

XGBoost for Imbalanced Classification

A Machine Learning Specialist is working with multiple data sources containing billions of records that need to be joined.

What feature engineering and model development approach should the Specialist take with a dataset this large?

- A . Use an Amazon SageMaker notebook for both feature engineering and model development

- B . Use an Amazon SageMaker notebook for feature engineering and Amazon ML for model development

- C . Use Amazon EMR for feature engineering and Amazon SageMaker SDK for model development

- D . Use Amazon ML for both feature engineering and model development.

C

Explanation:

Amazon EMR is a service that can process large amounts of data efficiently and cost-effectively. It can run distributed frameworks such as Apache Spark, which can perform feature engineering on big data. Amazon SageMaker SDK is a Python library that can interact with Amazon SageMaker service to train and deploy machine learning models. It can also use Amazon EMR as a data source for training data.

References:

Amazon EMR

Amazon SageMaker SDK

A machine learning (ML) specialist needs to extract embedding vectors from a text series. The goal is to provide a ready-to-ingest feature space for a data scientist to develop downstream ML predictive models. The text consists of curated sentences in English. Many sentences use similar words but in different contexts. There are questions and answers among the sentences, and the embedding space must differentiate between them.

Which options can produce the required embedding vectors that capture word context and sequential QA information? (Choose two.)

- A . Amazon SageMaker seq2seq algorithm

- B . Amazon SageMaker BlazingText algorithm in Skip-gram mode

- C . Amazon SageMaker Object2Vec algorithm

- D . Amazon SageMaker BlazingText algorithm in continuous bag-of-words (CBOW) mode

- E . Combination of the Amazon SageMaker BlazingText algorithm in Batch Skip-gram mode with a custom recurrent neural network (RNN)

B, E

Explanation:

To capture word context and sequential QA information, the embedding vectors need to consider

both the order and the meaning of the words in the text.

Option B, Amazon SageMaker BlazingText algorithm in Skip-gram mode, is a valid option because it can learn word embeddings that capture the semantic similarity and syntactic relations between words based on their co-occurrence in a window of words. Skip-gram mode can also handle rare words better than continuous bag-of-words (CBOW) mode1.

Option E, combination of the Amazon SageMaker BlazingText algorithm in Batch Skip-gram mode with a custom recurrent neural network (RNN), is another valid option because it can leverage the advantages of Skip-gram mode and also use an RNN to model the sequential nature of the text. An RNN can capture the temporal dependencies and long-term dependencies between words, which are important for QA tasks2.

Option A, Amazon SageMaker seq2seq algorithm, is not a valid option because it is designed for sequence-to-sequence tasks such as machine translation, summarization, or chatbots. It does not produce embedding vectors for text series, but rather generates an output sequence given an input sequence3.

Option C, Amazon SageMaker Object2Vec algorithm, is not a valid option because it is designed for learning embeddings for pairs of objects, such as text-image, text-text, or image-image. It does not produce embedding vectors for text series, but rather learns a similarity function between pairs of objects4.

Option D, Amazon SageMaker BlazingText algorithm in continuous bag-of-words (CBOW) mode, is not a valid option because it does not capture word context as well as Skip-gram mode. CBOW mode predicts a word given its surrounding words, while Skip-gram mode predicts the surrounding words given a word. CBOW mode is faster and more suitable for frequent words, but Skip-gram mode can learn more meaningful embeddings for rare words1.

References:

1: Amazon SageMaker BlazingText

2: Recurrent Neural Networks (RNNs)

3: Amazon SageMaker Seq2Seq

4: Amazon SageMaker Object2Vec

A manufacturing company has structured and unstructured data stored in an Amazon S3 bucket. A Machine Learning Specialist wants to use SQL to run queries on this data.

Which solution requires the LEAST effort to be able to query this data?

- A . Use AWS Data Pipeline to transform the data and Amazon RDS to run queries.

- B . Use AWS Glue to catalogue the data and Amazon Athena to run queries.

- C . Use AWS Batch to run ETL on the data and Amazon Aurora to run the queries.

- D . Use AWS Lambda to transform the data and Amazon Kinesis Data Analytics to run queries.

B

Explanation:

Using AWS Glue to catalogue the data and Amazon Athena to run queries is the solution that requires the least effort to be able to query the data stored in an Amazon S3 bucket using SQL. AWS Glue is a service that provides a serverless data integration platform for data preparation and transformation. AWS Glue can automatically discover, crawl, and catalogue the data stored in various sources, such as Amazon S3, Amazon RDS, Amazon Redshift, etc. AWS Glue can also use AWS KMS to encrypt the data at rest on the Glue Data Catalog and Glue ETL jobs. AWS Glue can handle both structured and unstructured data, and support various data formats, such as CSV, JSON, Parquet, etc. AWS Glue can also use built-in or custom classifiers to identify and parse the data schema and format1 Amazon Athena is a service that provides an interactive query engine that can run SQL queries directly on data stored in Amazon S3. Amazon Athena can integrate with AWS Glue to use the Glue Data Catalog as a central metadata repository for the data sources and tables. Amazon Athena can also use AWS KMS to encrypt the data at rest on Amazon S3 and the query results. Amazon Athena can query both structured and unstructured data, and support various data formats, such as CSV, JSON, Parquet, etc. Amazon Athena can also use partitions and compression to optimize the query performance and reduce the query cost23

The other options are not valid or require more effort to query the data stored in an Amazon S3 bucket using SQL. Using AWS Data Pipeline to transform the data and Amazon RDS to run queries is not a good option, as it involves moving the data from Amazon S3 to Amazon RDS, which can incur additional time and cost. AWS Data Pipeline is a service that can orchestrate and automate data movement and transformation across various AWS services and on-premises data sources. AWS Data Pipeline can be integrated with Amazon EMR to run ETL jobs on the data stored in Amazon S3. Amazon RDS is a service that provides a managed relational database service that can run various database engines, such as MySQL, PostgreSQL, Oracle, etc. Amazon RDS can use AWS KMS to encrypt the data at rest and in transit. Amazon RDS can run SQL queries on the data stored in the database tables45 Using AWS Batch to run ETL on the data and Amazon Aurora to run the queries is not a good option, as it also involves moving the data from Amazon S3 to Amazon Aurora, which can incur additional time and cost. AWS Batch is a service that can run batch computing workloads on AWS. AWS Batch can be integrated with AWS Lambda to trigger ETL jobs on the data stored in Amazon S3. Amazon Aurora is a service that provides a compatible and scalable relational database engine that can run MySQL or PostgreSQL. Amazon Aurora can use AWS KMS to encrypt the data at rest and in transit. Amazon Aurora can run SQL queries on the data stored in the database tables. Using AWS Lambda to transform the data and Amazon Kinesis Data Analytics to run queries is not a good option, as it is not suitable for querying data stored in Amazon S3 using SQL. AWS Lambda is a service that can run serverless functions on AWS. AWS Lambda can be integrated with Amazon S3 to trigger data transformation functions on the data stored in Amazon S3. Amazon Kinesis Data Analytics is a service that can analyze streaming data using SQL or Apache Flink. Amazon Kinesis Data Analytics can be integrated with Amazon Kinesis Data Streams or Amazon Kinesis Data Firehose to ingest streaming data sources, such as web logs, social media, IoT devices, etc. Amazon Kinesis Data Analytics is not designed for querying data stored in Amazon S3 using SQL.

A company uses sensors on devices such as motor engines and factory machines to measure parameters, temperature and pressure. The company wants to use the sensor data to predict equipment malfunctions and reduce services outages.

The Machine learning (ML) specialist needs to gather the sensors data to train a model to predict

device malfunctions. The ML spoctafst must ensure that the data does not contain outliers before training the ..el.

What can the ML specialist meet these requirements with the LEAST operational overhead?

- A . Load the data into an Amazon SagcMaker Studio notebook. Calculate the first and third quartile Use a SageMaker Data Wrangler data (low to remove only values that are outside of those quartiles.

- B . Use an Amazon SageMaker Data Wrangler bias report to find outliers in the dataset Use a Data Wrangler data flow to remove outliers based on the bias report.

- C . Use an Amazon SageMaker Data Wrangler anomaly detection visualization to find outliers in the dataset. Add a transformation to a Data Wrangler data flow to remove outliers.

- D . Use Amazon Lookout for Equipment to find and remove outliers from the dataset.

C

Explanation:

Amazon SageMaker Data Wrangler is a tool that helps data scientists and ML developers to prepare data for ML. One of the features of Data Wrangler is the anomaly detection visualization, which uses an unsupervised ML algorithm to identify outliers in the dataset based on statistical properties. The ML specialist can use this feature to quickly explore the sensor data and find any anomalous values that may affect the model performance. The ML specialist can then add a transformation to a Data Wrangler data flow to remove the outliers from the dataset. The data flow can be exported as a script or a pipeline to automate the data preparation process. This option requires the least operational overhead compared to the other options.

References:

Amazon SageMaker Data Wrangler – Amazon Web Services (AWS) Anomaly Detection Visualization – Amazon SageMaker Transform Data – Amazon SageMaker

A data scientist is training a large PyTorch model by using Amazon SageMaker. It takes 10 hours on average to train the model on GPU instances. The data scientist suspects that training is not converging and that resource utilization is not optimal.

What should the data scientist do to identify and address training issues with the LEAST development effort?

- A . Use CPU utilization metrics that are captured in Amazon CloudWatch. Configure a CloudWatch alarm to stop the training job early if low CPU utilization occurs.

- B . Use high-resolution custom metrics that are captured in Amazon CloudWatch. Configure an AWS Lambda function to analyze the metrics and to stop the training job early if issues are detected.

- C . Use the SageMaker Debugger vanishing_gradient and LowGPUUtilization built-in rules to detect issues and to launch the StopTrainingJob action if issues are detected.

- D . Use the SageMaker Debugger confusion and feature_importance_overweight built-in rules to detect issues and to launch the StopTrainingJob action if issues are detected.

C

Explanation:

The solution C is the best option to identify and address training issues with the least development effort.

The solution C involves the following steps:

Use the SageMaker Debugger vanishing_gradient and LowGPUUtilization built-in rules to detect issues. SageMaker Debugger is a feature of Amazon SageMaker that allows data scientists to monitor, analyze, and debug machine learning models during training. SageMaker Debugger provides a set of built-in rules that can automatically detect common issues and anomalies in model training, such as vanishing or exploding gradients, overfitting, underfitting, low GPU utilization, and more1. The data scientist can use the vanishing_gradient rule to check if the gradients are becoming too small and causing the training to not converge. The data scientist can also use the LowGPUUtilization rule to check if the GPU resources are underutilized and causing the training to be inefficient2.

Launch the StopTrainingJob action if issues are detected. SageMaker Debugger can also take actions

based on the status of the rules. One of the actions is StopTrainingJob, which can terminate the training job if a rule is in an error state. This can help the data scientist to save time and money by stopping the training early if issues are detected3.

The other options are not suitable because:

Option A: Using CPU utilization metrics that are captured in Amazon CloudWatch and configuring a CloudWatch alarm to stop the training job early if low CPU utilization occurs will not identify and address training issues effectively. CPU utilization is not a good indicator of model training performance, especially for GPU instances. Moreover, CloudWatch alarms can only trigger actions based on simple thresholds, not complex rules or conditions4.

Option B: Using high-resolution custom metrics that are captured in Amazon CloudWatch and configuring an AWS Lambda function to analyze the metrics and to stop the training job early if issues are detected will incur more development effort than using SageMaker Debugger. The data scientist will have to write the code for capturing, sending, and analyzing the custom metrics, as well as for invoking the Lambda function and stopping the training job. Moreover, this solution may not be able to detect all the issues that SageMaker Debugger can5.

Option D: Using the SageMaker Debugger confusion and feature_importance_overweight built-in rules and launching the StopTrainingJob action if issues are detected will not identify and address training issues effectively. The confusion rule is used to monitor the confusion matrix of a classification model, which is not relevant for a regression model that predicts prices. The feature_importance_overweight rule is used to check if some features have too much weight in the model, which may not be related to the convergence or resource utilization issues2.

References:

1: Amazon SageMaker Debugger

2: Built-in Rules for Amazon SageMaker Debugger

3: Actions for Amazon SageMaker Debugger

4: Amazon CloudWatch Alarms

5: Amazon CloudWatch Custom Metrics

A company is converting a large number of unstructured paper receipts into images. The company wants to create a model based on natural language processing (NLP) to find relevant entities such as date, location, and notes, as well as some custom entities such as receipt numbers.

The company is using optical character recognition (OCR) to extract text for data labeling. However, documents are in different structures and formats, and the company is facing challenges with setting up the manual workflows for each document type. Additionally, the company trained a named entity recognition (NER) model for custom entity detection using a small sample size. This model has a very low confidence score and will require retraining with a large dataset.

Which solution for text extraction and entity detection will require the LEAST amount of effort?

- A . Extract text from receipt images by using Amazon Textract. Use the Amazon SageMaker BlazingText algorithm to train on the text for entities and custom entities.

- B . Extract text from receipt images by using a deep learning OCR model from the AWS Marketplace.

Use the NER deep learning model to extract entities. - C . Extract text from receipt images by using Amazon Textract. Use Amazon Comprehend for entity detection, and use Amazon Comprehend custom entity recognition for custom entity detection.

- D . Extract text from receipt images by using a deep learning OCR model from the AWS Marketplace. Use Amazon Comprehend for entity detection, and use Amazon Comprehend custom entity recognition for custom entity detection.

C

Explanation:

The best solution for text extraction and entity detection with the least amount of effort is to use Amazon Textract and Amazon Comprehend.

These services are:

Amazon Textract for text extraction from receipt images. Amazon Textract is a machine learning service that can automatically extract text and data from scanned documents. It can handle different structures and formats of documents, such as PDF, TIFF, PNG, and JPEG, without any preprocessing steps. It can also extract key-value pairs and tables from documents1

Amazon Comprehend for entity detection and custom entity detection. Amazon Comprehend is a natural language processing service that can identify entities, such as dates, locations, and notes, from unstructured text. It can also detect custom entities, such as receipt numbers, by using a custom entity recognizer that can be trained with a small amount of labeled data2

The other options are not suitable because they either require more effort for text extraction, entity detection, or custom entity detection. For example:

Option A uses the Amazon SageMaker BlazingText algorithm to train on the text for entities and custom entities. BlazingText is a supervised learning algorithm that can perform text classification and word2vec. It requires users to provide a large amount of labeled data, preprocess the data into a specific format, and tune the hyperparameters of the model3

Option B uses a deep learning OCR model from the AWS Marketplace and a NER deep learning model for text extraction and entity detection. These models are pre-trained and may not be suitable for the specific use case of receipt processing. They also require users to deploy and manage the models on Amazon SageMaker or Amazon EC2 instances4

Option D uses a deep learning OCR model from the AWS Marketplace for text extraction. This model has the same drawbacks as option B. It also requires users to integrate the model output with Amazon Comprehend for entity detection and custom entity detection.

Reference:

1: Amazon Textract C Extract text and data from documents

2: Amazon Comprehend C Natural Language Processing (NLP) and Machine Learning (ML)

3: BlazingText – Amazon SageMaker

4: AWS Marketplace: OCR

A Machine Learning Specialist is attempting to build a linear regression model.

Given the displayed residual plot only, what is the MOST likely problem with the model?

- A . Linear regression is inappropriate. The residuals do not have constant variance.

- B . Linear regression is inappropriate. The underlying data has outliers.

- C . Linear regression is appropriate. The residuals have a zero mean.

- D . Linear regression is appropriate. The residuals have constant variance.

A

Explanation:

A residual plot is a type of plot that displays the values of a predictor variable in a regression model along the x-axis and the values of the residuals along the y-axis. This plot is used to assess whether or not the residuals in a regression model are normally distributed and whether or not they exhibit heteroscedasticity. Heteroscedasticity means that the variance of the residuals is not constant across different values of the predictor variable. This violates one of the assumptions of linear regression and can lead to biased estimates and unreliable predictions. The displayed residual plot shows a clear pattern of heteroscedasticity, as the residuals spread out as the fitted values increase. This indicates that linear regression is inappropriate for this data and a different model should be used.

References:

Regression – Amazon Machine Learning

How to Create a Residual Plot by Hand

How to Create a Residual Plot in Python

An ecommerce company sends a weekly email newsletter to all of its customers. Management has hired a team of writers to create additional targeted content. A data scientist needs to identify five customer segments based on age, income, and location. The customers’ current segmentation is unknown. The data scientist previously built an XGBoost model to predict the likelihood of a customer responding to an email based on age, income, and location.

Why does the XGBoost model NOT meet the current requirements, and how can this be fixed?

- A . The XGBoost model provides a true/false binary output. Apply principal component analysis (PCA) with five feature dimensions to predict a segment.

- B . The XGBoost model provides a true/false binary output. Increase the number of classes the XGBoost model predicts to five classes to predict a segment.

- C . The XGBoost model is a supervised machine learning algorithm. Train a k-Nearest-Neighbors (kNN) model with K = 5 on the same dataset to predict a segment.

- D . The XGBoost model is a supervised machine learning algorithm. Train a k-means model with K = 5 on the same dataset to predict a segment.

D

Explanation:

The XGBoost model is a supervised machine learning algorithm, which means it requires labeled data to learn from. The customers’ current segmentation is unknown, so there is no label to train the XGBoost model on. Moreover, the XGBoost model is designed for classification or regression tasks, not for clustering. Clustering is a type of unsupervised machine learning, which means it does not require labeled data. Clustering algorithms try to find natural groups or clusters in the data based on their similarity or distance. A common clustering algorithm is k-means, which partitions the data into K clusters, where each data point belongs to the cluster with the nearest mean. To meet the current requirements, the data scientist should train a k-means model with K = 5 on the same dataset to predict a segment for each customer. This way, the data scientist can identify five customer segments based on age, income, and location, without needing any labels.

References:

What is XGBoost? – Amazon SageMaker

What is Clustering? – Amazon SageMaker

K-Means Algorithm – Amazon SageMaker

A retail company wants to build a recommendation system for the company’s website. The system needs to provide recommendations for existing users and needs to base those recommendations on each user’s past browsing history. The system also must filter out any items that the user previously purchased.

Which solution will meet these requirements with the LEAST development effort?

- A . Train a model by using a user-based collaborative filtering algorithm on Amazon SageMaker. Host the model on a SageMaker real-time endpoint. Configure an Amazon API Gateway API and an AWS Lambda function to handle real-time inference requests that the web application sends. Exclude the items that the user previously purchased from the results before sending the results back to the web application.

- B . Use an Amazon Personalize PERSONALIZED_RANKING recipe to train a model. Create a real-time filter to exclude items that the user previously purchased. Create and deploy a campaign on Amazon

Personalize. Use the GetPersonalizedRanking API operation to get the real-time recommendations. - C . Use an Amazon Personalize USER_ PERSONAL IZATION recipe to train a model Create a real-time filter to exclude items that the user previously purchased. Create and deploy a campaign on Amazon Personalize. Use the GetRecommendations API operation to get the real-time recommendations.

- D . Train a neural collaborative filtering model on Amazon SageMaker by using GPU instances. Host the model on a SageMaker real-time endpoint. Configure an Amazon API Gateway API and an AWS Lambda function to handle real-time inference requests that the web application sends. Exclude the items that the user previously purchased from the results before sending the results back to the web application.

C

Explanation:

Amazon Personalize is a fully managed machine learning service that makes it easy for developers to create personalized user experiences at scale. It uses the same recommender system technology that Amazon uses to create its own personalized recommendations. Amazon Personalize provides several pre-built recipes that can be used to train models for different use cases. The USER_PERSONALIZATION recipe is designed to provide personalized recommendations for existing users based on their past interactions with items. The PERSONALIZED_RANKING recipe is designed to re-rank a list of items for a user based on their preferences. The USER_PERSONALIZATION recipe is more suitable for this use case because it can generate recommendations for each user without requiring a list of candidate items. To filter out the items that the user previously purchased, a real-time filter can be created and applied to the campaign. A real-time filter is a dynamic filter that uses the latest interaction data to exclude items from the recommendations. By using Amazon Personalize, the development effort is minimized because it handles the data processing, model training, and deployment automatically. The web application can use the GetRecommendations API operation to get the real-time recommendations from the campaign.

References:

Amazon Personalize

What is Amazon Personalize?

USER_PERSONALIZATION recipe

PERSONALIZED_RANKING recipe

Filtering recommendations

GetRecommendations API operation