Practice Free DP-420 Exam Online Questions

HOTSPOT

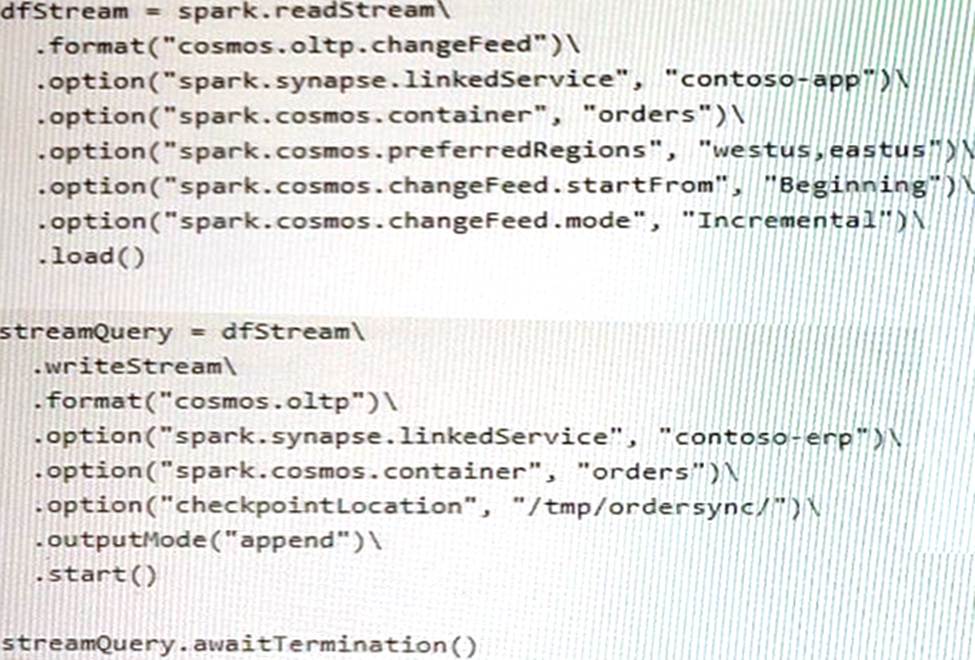

You have an Apache Spark pool in Azure Synapse Analytics that runs the following Python code in a notebook.

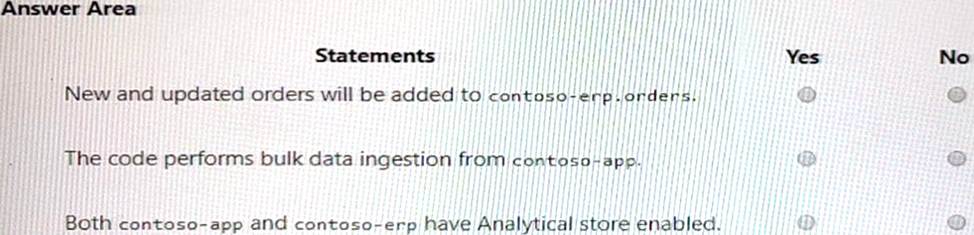

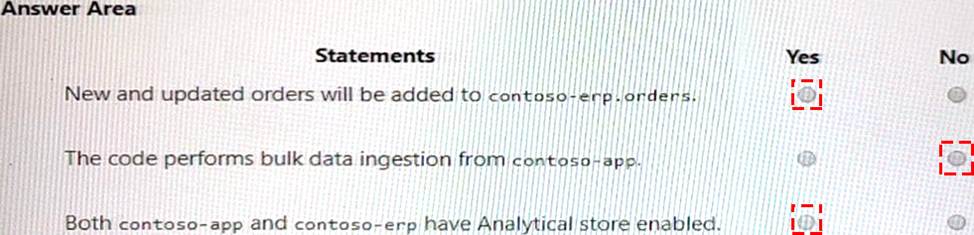

For each of the following statements, select Yes if the statement is true. Otherwise, select No. NOTE: Each correct selection is worth one point.

Explanation:

New and updated orders will be added to contoso-erp.orders: Yes

The code performs bulk data ingestion from contoso-app: No

Both contoso-app and contoso-erp have Analytics store enabled: Yes

The code uses the spark.readStream method to read data from a container named orders in a database named contoso-app. The data is then filtered by a condition and written to another

container named orders in a database named contoso-erp using the spark.writeStream method. The write mode is set to “append”, which means that new and updated orders will be added to the destination container1.

The code does not perform bulk data ingestion from contoso-app, but rather stream processing. Bulk data ingestion is a process of loading large amounts of data into a data store in batches. Stream processing is a process of continuously processing data as it arrives in real-time2.

Both contoso-app and contoso-erp have Analytics store enabled, because they are both accessed by Spark pools using the spark.cosmos.oltp method. This method requires that the containers have Analytics store enabled, which is a feature that allows Spark pools to query data stored in Azure Cosmos DB containers using SQL APIs3.

You have an Azure subscription.

You plan to create an Azure Cosmos DB for NoSQL database named DB1 that will store author and book data for authors that have each published up to ten books.

Typical and frequent queries of the data will include:

• All books written by an individual author

• The synopsis of individual books

You need to recommend a data model for DB1.

The solution must meet the following requirements:

• Support transactional updates of the author and book data.

• Minimize read operation costs.

What should you recommend?

- A . Create a single container that stores author items and book items, and then items that represent the relationship between the authors and their books.

- B . Create three containers, one that stores author items, a second that stores book items, and a third that stores items that represent the relationship between the authors and their books.

- C . Create two containers, one that stores author items and another that stores book items. Embed a list of each author’s books in the corresponding author item.

- D . Create a single container that stores author items and book items. Embed a list of each author’s books in the

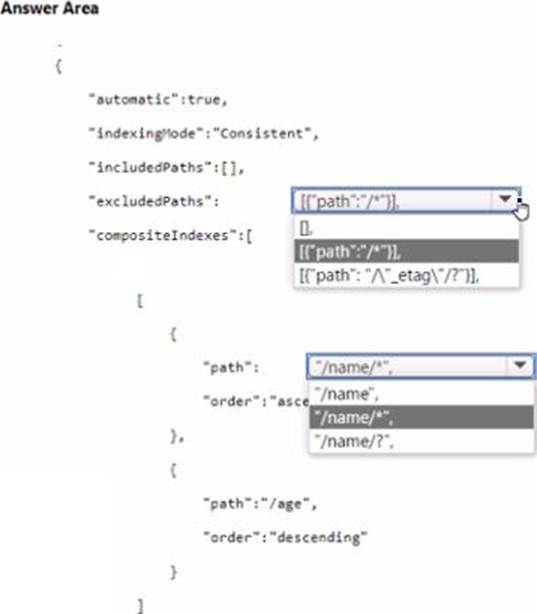

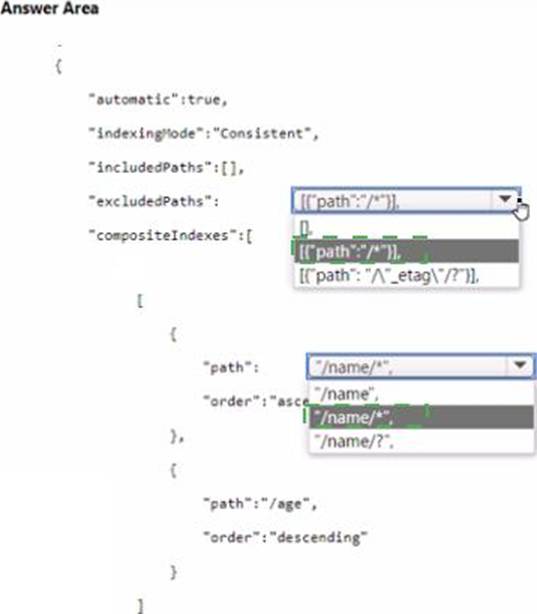

HOTSPOT

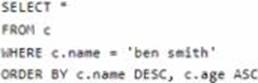

You have an Azure Cosmos DB for NoSQL account.

You plan 10 create a container named container1. The container1 container will store items that include two properties named nm and age

The most commonly executed queries will query container1 for a specific name.

The following is a sample of the query.

You need to define an opt-in Indexing policy for container1.

The solution must meet the following requirements:

• Minimize the number of request units consumed by the queries.

• Ensure that the _etag property is excluded from indexing.

How should you define the indexing poky? To answer, select the appropriate options in the answer area. NOTE: Each correct selection Is worth one point.

You need to identify which connectivity mode to use when implementing App2. The solution must support the planned changes and meet the business requirements.

Which connectivity mode should you identify?

- A . Direct mode over HTTPS

- B . Gateway mode (using HTTPS)

- C . Direct mode over TCP

C

Explanation:

Scenario: Develop an app named App2 that will run from the retail stores and query the data in account2. App2 must be limited to a single DNS endpoint when accessing account2.

By using Azure Private Link, you can connect to an Azure Cosmos account via a private endpoint. The private endpoint is a set of private IP addresses in a subnet within your virtual network.

When you’re using Private Link with an Azure Cosmos account through a direct mode connection, you can use only the TCP protocol. The HTTP protocol is not currently supported.

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/how-to-configure-private-endpoints

You have a container named container1 in an Azure Cosmos DB Core (SQL) API account.

You need to provide a user named User1 with the ability to insert items into container1 by using role-based access control (RBAC). The solution must use the principle of least privilege.

Which roles should you assign to User1?

- A . CosmosDB Operator only

- B . DocumentDB Account Contributor and Cosmos DB Built-in Data Contributor

- C . DocumentDB Account Contributor only

- D . Cosmos DB Built-in Data Contributor only

A

Explanation:

Cosmos DB Operator: Can provision Azure Cosmos accounts, databases, and containers. Cannot access any data or use Data Explorer.

Incorrect Answers:

B: DocumentDB Account Contributor can manage Azure Cosmos DB accounts. Azure Cosmos DB is formerly known as DocumentDB.

C: DocumentDB Account Contributor: Can manage Azure Cosmos DB accounts.

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/role-based-access-control

You need to configure an Apache Kafka instance to ingest data from an Azure Cosmos DB Core (SQL)

API account. The data from a container named telemetry must be added to a Kafka topic named iot.

The solution must store the data in a compact binary format.

Which three configuration items should you include in the solution? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

- A . "connector.class": "com.azure.cosmos.kafka.connect.source.CosmosDBSourceConnector"

- B . "key.converter": "org.apache.kafka.connect.json.JsonConverter"

- C . "key.converter": "io.confluent.connect.avro.AvroConverter"

- D . "connect.cosmos.containers.topicmap": "iot#telemetry"

- E . "connect.cosmos.containers.topicmap": "iot"

- F . "connector.class": "com.azure.cosmos.kafka.connect.source.CosmosDBSinkConnector"

C, D, F

Explanation:

C: Avro is binary format, while JSON is text.

F: Kafka Connect for Azure Cosmos DB is a connector to read from and write data to Azure Cosmos DB. The Azure Cosmos DB sink connector allows you to export data from Apache Kafka topics to an Azure Cosmos DB database. The connector polls data from Kafka to write to containers in the database based on the topics subscription.

D: Create the Azure Cosmos DB sink connector in Kafka Connect. The following JSON body defines config for the sink connector.

Extract:

"connector.class": "com.azure.cosmos.kafka.connect.sink.CosmosDBSinkConnector",

"key.converter": "org.apache.kafka.connect.json.AvroConverter"

"connect.cosmos.containers.topicmap": "hotels#kafka" Incorrect Answers:

B: JSON is plain text. Note, full example:

{

"name": "cosmosdb-sink-connector",

"config": {

"connector.class": "com.azure.cosmos.kafka.connect.sink.CosmosDBSinkConnector",

"tasks.max": "1",

"topics": [ "hotels" ],

"value.converter": "org.apache.kafka.connect.json.AvroConverter",

"value.converter.schemas.enable": "false",

"key.converter": "org.apache.kafka.connect.json.AvroConverter",

"key.converter.schemas.enable": "false",

"connect.cosmos.connection.endpoint": "https://<cosmosinstance-name>.documents.azure.com:443/",

"connect.cosmos.master.key": "<cosmosdbprimarykey>",

"connect.cosmos.databasename": "kafkaconnect",

"connect.cosmos.containers.topicmap": "hotels#kafka"

}

}

Reference:

https://docs.microsoft.com/en-us/azure/cosmos-db/sql/kafka-connector-sink

https://www.confluent.io/blog/kafka-connect-deep-dive-converters-serialization-explained/

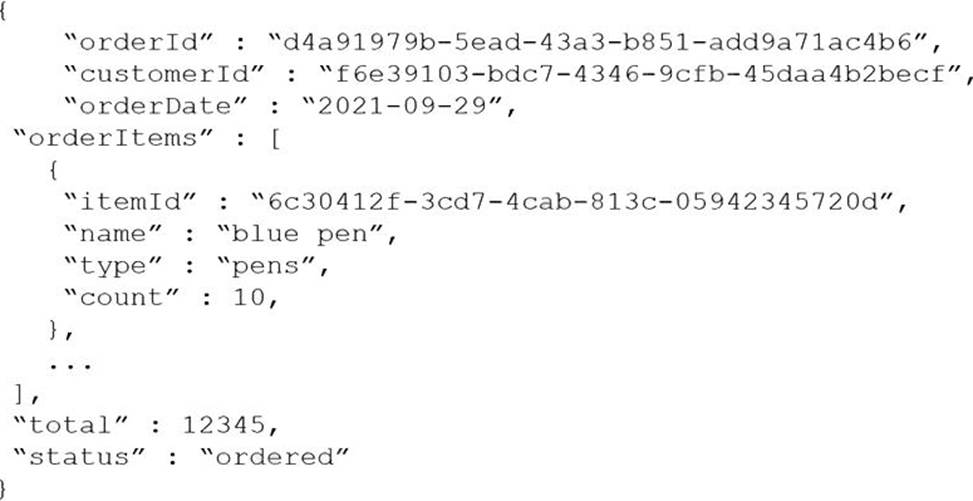

The following is a sample of a document in orders.

The orders container uses customer as the partition key.

You need to provide a report of the total items ordered per month by item type.

The solution must meet the following requirements:

Ensure that the report can run as quickly as possible.

Minimize the consumption of request units (RUs).

What should you do?

- A . Configure the report to query orders by using a SQL query.

- B . Configure the report to query a new aggregate container. Populate the aggregates by using the change feed.

- C . Configure the report to query orders by using a SQL query through a dedicated gateway.

- D . Configure the report to query a new aggregate container. Populate the aggregates by using SQL queries that run daily.

B

Explanation:

You can facilitate aggregate data by using Change Feed and Azure Functions, and then use it for reporting.

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/change-feed

Which Azure service is recommended to use with Cosmos DB to send notifications about product updates?

- A . Azure Logic Apps

- B . Azure Functions

- C . Azure Service Bus

- D . Azure Event Grid

You have an Azure Cosmos DB Core (SQL) API account.

You run the following query against a container in the account.

SELECT

IS_NUMBER("1234") AS A,

IS_NUMBER(1234) AS B,

IS_NUMBER({prop: 1234}) AS C

What is the output of the query?

- A . [{"A": false, "B": true, "C": false}]

- B . [{"A": true, "B": false, "C": true}]

- C . [{"A": true, "B": true, "C": false}]

- D . [{"A": true, "B": true, "C": true}]

A

Explanation:

IS_NUMBER returns a Boolean value indicating if the type of the specified expression is a number.

"1234" is a string, not a number.

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/sql/sql-query-is-number

You have a container in an Azure Cosmos DB for NoSQL account.

You need to create an alert based on a custom Log Analytics query.

Which signal type should you use?

- A . Metrics

- B . Log

- C . Activity Log

- D . Resource Health