Practice Free DP-203 Exam Online Questions

HOTSPOT

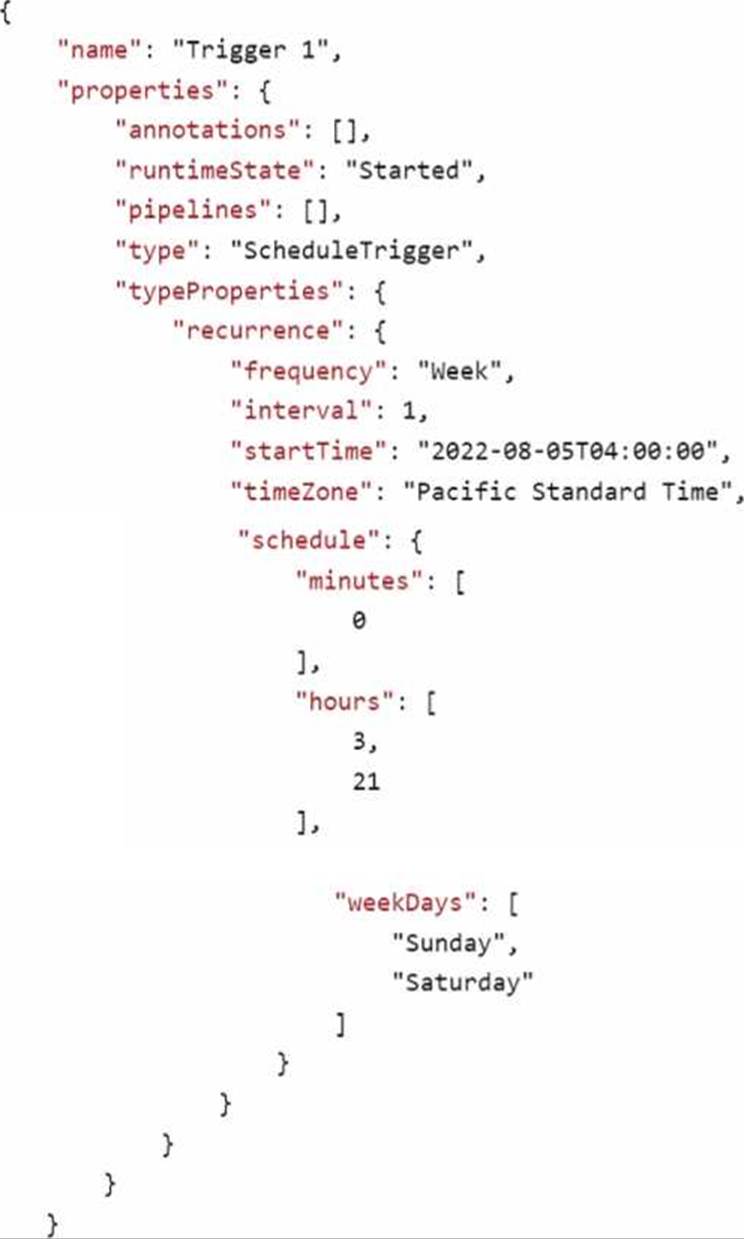

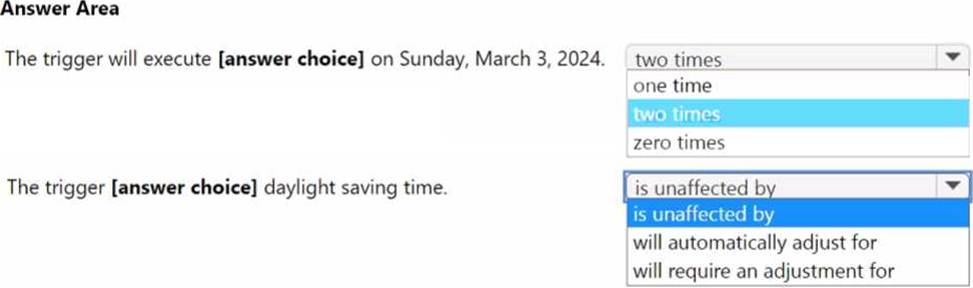

In Azure Data Factory, you have a schedule trigger that is scheduled in Pacific Time.

Pacific Time observes daylight saving time.

The trigger has the following JSON file.

Use the drop-down menus to select the answer choice that completes each statement based on the information presented. NOTE: Each correct selection is worth one point.

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this scenario, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure Storage account that contains 100 GB of files. The files contain text and numerical values. 75% of the rows contain description data that has an average length of 1.1 MB. You plan to copy the data from the storage account to an Azure SQL data warehouse.

You need to prepare the files to ensure that the data copies quickly.

Solution: You modify the files to ensure that each row is more than 1 MB.

Does this meet the goal?

- A . Yes

- B . No

A

Explanation:

Instead modify the files to ensure that each row is less than 1 MB.

Reference: https://docs.microsoft.com/en-us/azure/sql-data-warehouse/guidance-for-loading-data

You have an Azure Synapse Analytics dedicated SQL pool.

You need to Create a fact table named Table1 that will store sales data from the last three years. The solution must be optimized for the following query operations:

Show order counts by week.

• Calculate sales totals by region.

• Calculate sales totals by product.

• Find all the orders from a given month.

Which data should you use to partition Table1?

- A . region

- B . product

- C . week

- D . month

D

Explanation:

Table partitions enable you to divide your data into smaller groups of data. In most cases, table partitions are created on a date column.

Benefits to queries

Partitioning can also be used to improve query performance. A query that applies a filter to partitioned data can limit the scan to only the qualifying partitions. This method of filtering can avoid a full table scan and only scan a smaller subset of data. With the introduction of clustered columnstore indexes, the predicate elimination performance benefits are less beneficial, but in some

cases there can be a benefit to queries.

For example, if the sales fact table is partitioned into 36 months using the sales date field, then queries that filter on the sale date can skip searching in partitions that don’t match the filter.

Note: Benefits to loads

The primary benefit of partitioning in dedicated SQL pool is to improve the efficiency and performance of loading data by use of partition deletion, switching and merging. In most cases data is partitioned on a date column that is closely tied to the order in which the data is loaded into the SQL pool. One of the greatest benefits of using partitions to maintain data is the avoidance of transaction logging. While simply inserting, updating, or deleting data can be the most straightforward approach, with a little thought and effort, using partitioning during your load process can substantially improve performance.

Reference: https://learn.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/sql-data-warehouse-tables-partition

You have an Azure Synapse Analytics dedicated SQL pool.

You need to Create a fact table named Table1 that will store sales data from the last three years. The solution must be optimized for the following query operations:

Show order counts by week.

• Calculate sales totals by region.

• Calculate sales totals by product.

• Find all the orders from a given month.

Which data should you use to partition Table1?

- A . region

- B . product

- C . week

- D . month

D

Explanation:

Table partitions enable you to divide your data into smaller groups of data. In most cases, table partitions are created on a date column.

Benefits to queries

Partitioning can also be used to improve query performance. A query that applies a filter to partitioned data can limit the scan to only the qualifying partitions. This method of filtering can avoid a full table scan and only scan a smaller subset of data. With the introduction of clustered columnstore indexes, the predicate elimination performance benefits are less beneficial, but in some

cases there can be a benefit to queries.

For example, if the sales fact table is partitioned into 36 months using the sales date field, then queries that filter on the sale date can skip searching in partitions that don’t match the filter.

Note: Benefits to loads

The primary benefit of partitioning in dedicated SQL pool is to improve the efficiency and performance of loading data by use of partition deletion, switching and merging. In most cases data is partitioned on a date column that is closely tied to the order in which the data is loaded into the SQL pool. One of the greatest benefits of using partitions to maintain data is the avoidance of transaction logging. While simply inserting, updating, or deleting data can be the most straightforward approach, with a little thought and effort, using partitioning during your load process can substantially improve performance.

Reference: https://learn.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/sql-data-warehouse-tables-partition

You are creating a new notebook in Azure Databricks that will support R as the primary language but will also support Scale and SOL.

Which switch should you use to switch between languages?

- A . @<Language>

- B . %<Language>

- C . \(<Language>)

- D . \(<Language>)

B

Explanation:

To change the language in Databricks’ cells to either Scala, SQL, Python or R, prefix the cell with ‘%’, followed by the language.

%python //or r, scala, sql

Reference: https://www.theta.co.nz/news-blogs/tech-blog/enhancing-digital-twins-part-3-predictive-

maintenance-with-azure-databricks

You are designing an Azure Databricks table. The table will ingest an average of 20 million streaming events per day.

You need to persist the events in the table for use in incremental load pipeline jobs in Azure Databricks. The solution must minimize storage costs and incremental load times.

What should you include in the solution?

- A . Partition by DateTime fields.

- B . Sink to Azure Queue storage.

- C . Include a watermark column.

- D . Use a JSON format for physical data storage.

A

Explanation:

The Databricks ABS-AQS connector uses Azure Queue Storage (AQS) to provide an optimized file source that lets you find new files written to an Azure Blob storage (ABS) container without repeatedly listing all of the files.

This provides two major advantages:

✑ Lower latency: no need to list nested directory structures on ABS, which is slow and resource intensive.

✑ Lower costs: no more costly LIST API requests made to ABS.

Reference: https://docs.microsoft.com/en-us/azure/databricks/spark/latest/structured-streaming/aqs

HOTSPOT

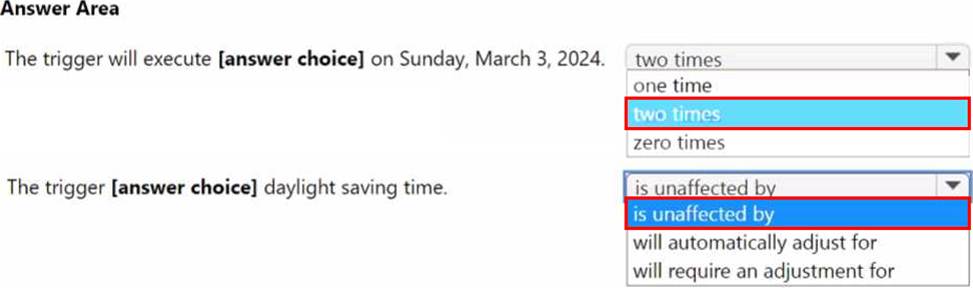

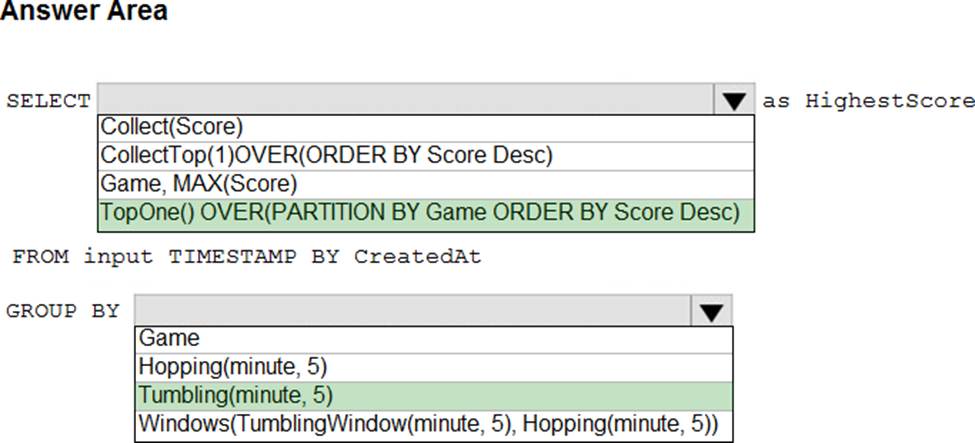

You are building an Azure Stream Analytics job to retrieve game data.

You need to ensure that the job returns the highest scoring record for each five-minute time interval of each game.

How should you complete the Stream Analytics query? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: TopOne OVER (PARTITION BY Game ORDER BY Score Desc)

TopOne returns the top-rank record, where rank defines the ranking position of the event in the window according to the specified ordering. Ordering/ranking is based on event columns and can be specified in ORDER BY clause.

Box 2: Hopping(minute,5)

Hopping window functions hop forward in time by a fixed period. It may be easy to think of them as Tumbling windows that can overlap and be emitted more often than the window size. Events can belong to more than one Hopping window result set. To make a Hopping window the same as a Tumbling window, specify the hop size to be the same as the window size.

Reference:

https://docs.microsoft.com/en-us/stream-analytics-query/topone-azure-stream-analytics

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-window-functions

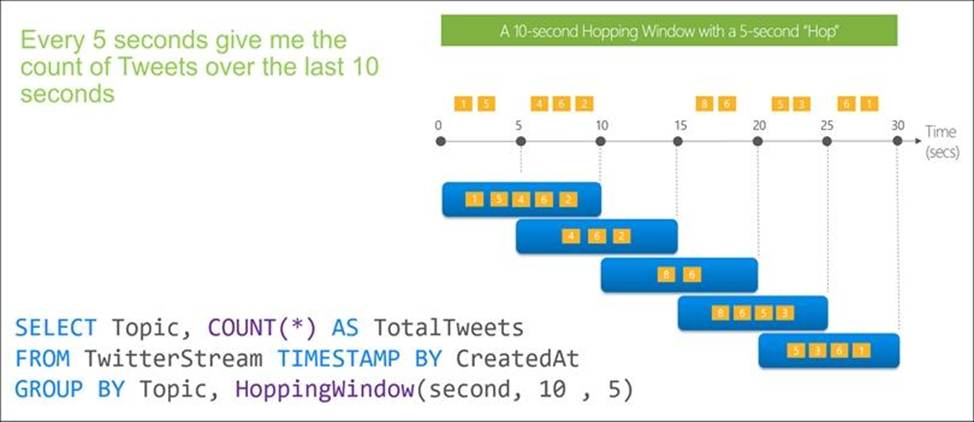

You use Azure Stream Analytics to receive Twitter data from Azure Event Hubs and to output the data to an Azure Blob storage account.

You need to output the count of tweets during the last five minutes every five minutes. Each tweet must only be counted once.

Which windowing function should you use?

- A . a five-minute Session window

- B . a five-minute Sliding window

- C . a five-minute Tumbling window

- D . a five-minute Hopping window that has one-minute hop

C

Explanation:

Tumbling window functions are used to segment a data stream into distinct time segments and perform a function against them, such as the example below. The key differentiators of a Tumbling window are that they repeat, do not overlap, and an event cannot belong to more than one tumbling window.

Reference: https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-window-functions

A company purchases IoT devices to monitor manufacturing machinery. The company uses an Azure IoTHub to communicate with the IoT devices.

The company must be able to monitor the devices in real-time.

You need to design the solution.

What should you recommend?

- A . Azure Stream Analytics Edge application using Microsoft Visual Studio.

- B . Azure Analytics Services using Azure portal

- C . Azure Analysis Services using Microsoft visual Studio

- D . Azure Data Factory instance using Microsoft visual Studio

You are designing a security model for an Azure Synapse Analytics dedicated SQL pool that will support multiple companies. You need to ensure that users from each company can view only the data of their respective company.

Which two objects should you include in the solution? Each correct answer presents part of the solution

NOTE: Each correct selection it worth one point.

- A . a custom role-based access control (RBAC) role.

- B . asymmetric keys

- C . a predicate function

- D . a column encryption key

- E . a security policy

A, E

Explanation:

Reference:

https://docs.microsoft.com/en-us/sql/relational-databases/security/row-level-security

https://docs.microsoft.com/en-us/azure/synapse-analytics/security/synapse-workspace-access-control-overview