Practice Free DP-203 Exam Online Questions

You plan to create an Azure Synapse Analytics dedicated SQL pool.

You need to minimize the time it takes to identify queries that return confidential information as defined by the company’s data privacy regulations and the users who executed the queues.

Which two components should you include in the solution? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

- A . sensitivity-classification labels applied to columns that contain confidential information

- B . resource tags for databases that contain confidential information

- C . audit logs sent to a Log Analytics workspace

- D . dynamic data masking for columns that contain confidential information

AC

Explanation:

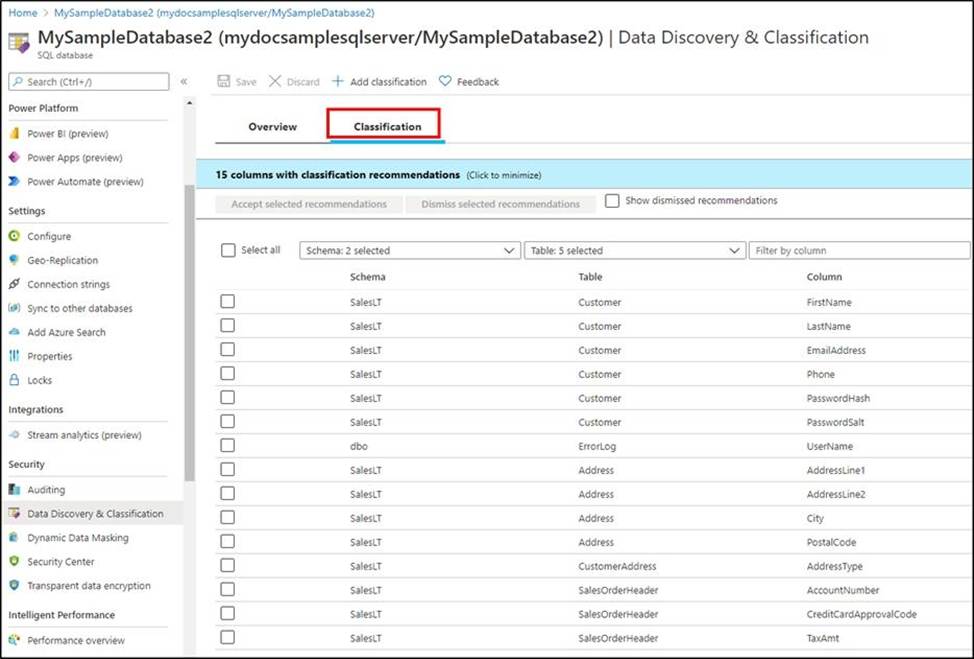

A: You can classify columns manually, as an alternative or in addition to the recommendation-based classification:

✑ Select Add classification in the top menu of the pane.

✑ In the context window that opens, select the schema, table, and column that you want to classify, and the information type and sensitivity label.

✑ Select Add classification at the bottom of the context window.

C: An important aspect of the information-protection paradigm is the ability to monitor access to sensitive data. Azure SQL Auditing has been enhanced to include a new field in the audit log called data_sensitivity_information. This field logs the sensitivity classifications (labels) of the data that was returned by a query.

Here’s an example:

Reference: https://docs.microsoft.com/en-us/azure/azure-sql/database/data-discovery-and-classification-overview

You are building a data flow in Azure Data Factory that upserts data into a table in an Azure Synapse Analytics dedicated SQL pool.

You need to add a transformation to the data flow. The transformation must specify logic indicating when a row from the input data must be upserted into the sink.

Which type of transformation should you add to the data flow?

- A . join

- B . select

- C . surrogate key

- D . alter row

D

Explanation:

The alter row transformation allows you to specify insert, update, delete, and upsert policies on rows based on expressions. You can use the alter row transformation to perform upserts on a sink table by matching on a key column and setting the appropriate row policy

You have an Azure data solution that contains an enterprise data warehouse in Azure Synapse Analytics named DW1.

Several users execute ad hoc queries to DW1 concurrently.

You regularly perform automated data loads to DW1.

You need to ensure that the automated data loads have enough memory available to complete quickly and successfully when the adhoc queries run.

What should you do?

- A . Hash distribute the large fact tables in DW1 before performing the automated data loads.

- B . Assign a smaller resource class to the automated data load queries.

- C . Assign a larger resource class to the automated data load queries.

- D . Create sampled statistics for every column in each table of DW1.

C

Explanation:

The performance capacity of a query is determined by the user’s resource class. Resource classes are pre-determined resource limits in Synapse SQL pool that govern compute resources and concurrency for query execution.

Resource classes can help you configure resources for your queries by setting limits on the number of queries that run concurrently and on the compute-resources assigned to each query. There’s a trade-off between memory and concurrency.

Smaller resource classes reduce the maximum memory per query, but increase concurrency.

Larger resource classes increase the maximum memory per query, but reduce concurrency.

Reference: https://docs.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/resource-classes-for-workload-management

HOTSPOT

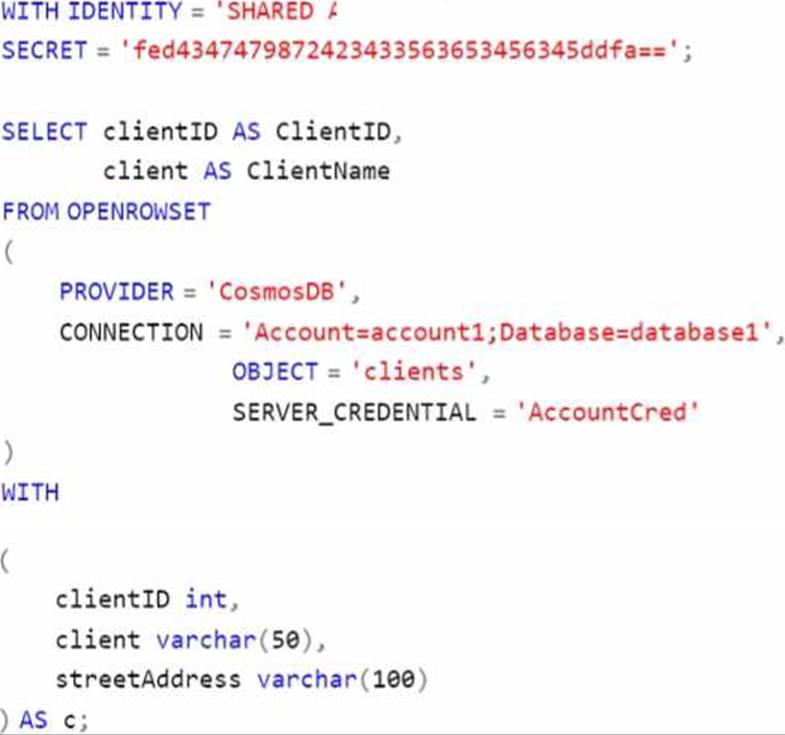

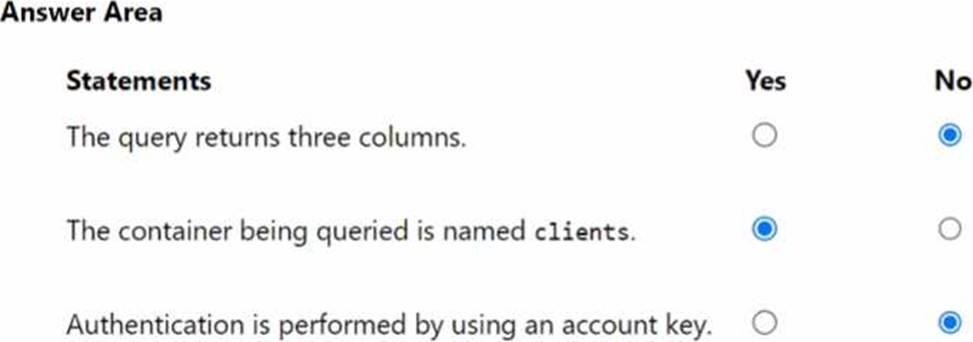

You have an Azure subscription that contains an Azure Cosmos DB analytical store and an Azure Synapse Analytics workspace named WS 1. WS1 has a serverless SQL pool name Pool1.

You execute the following query by using Pool1.

For each of the following statements, select Yes if the statement is true. Otherwise, select No. NOTE: Each correct selection is worth one point.

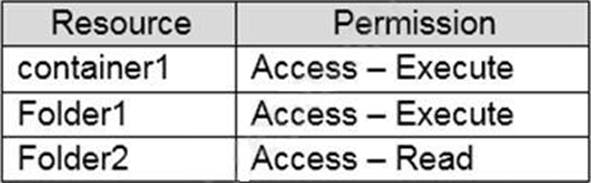

You have an Azure subscription linked to an Azure Active Directory (Azure AD) tenant that contains a service principal named ServicePrincipal1. The subscription contains an Azure Data Lake Storage account named adls1. Adls1 contains a folder named Folder2 that has a URI of https://adls1.dfs.core.windows.net/container1/Folder1/Folder2/.

ServicePrincipal1 has the access control list (ACL) permissions shown in the following table.

You need to ensure that ServicePrincipal1 can perform the following actions:

✑ Traverse child items that are created in Folder2.

✑ Read files that are created in Folder2.

The solution must use the principle of least privilege.

Which two permissions should you grant to ServicePrincipal1 for Folder2? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

- A . Access – Read

- B . Access – Write

- C . Access – Execute

- D . Default-Read

- E . Default – Write

- F . Default – Execute

DF

Explanation:

Execute (X) permission is required to traverse the child items of a folder.

There are two kinds of access control lists (ACLs), Access ACLs and Default ACLs.

Access ACLs: These control access to an object. Files and folders both have Access ACLs.

Default ACLs: A "template" of ACLs associated with a folder that determine the Access ACLs for any child items that are created under that folder. Files do not have Default ACLs.

Reference: https://docs.microsoft.com/en-us/azure/data-lake-store/data-lake-store-access-control

You are monitoring an Azure Stream Analytics job.

The Backlogged Input Events count has been 20 for the last hour.

You need to reduce the Backlogged Input Events count.

What should you do?

- A . Drop late arriving events from the job.

- B . Add an Azure Storage account to the job.

- C . Increase the streaming units for the job.

- D . Stop the job.

C

Explanation:

General symptoms of the job hitting system resource limits include:

✑ If the backlog event metric keeps increasing, it’s an indicator that the system resource is constrained (either because of output sink throttling, or high CPU).

Note: Backlogged Input Events: Number of input events that are backlogged. A non-zero value for this metric implies that your job isn’t able to keep up with the number of incoming events. If this value is slowly increasing or consistently non-zero, you should scale out your job: adjust Streaming Units.

Reference:

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-scale-jobs

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-monitoring

You are designing a highly available Azure Data Lake Storage solution that will include geo-zone-redundant storage (GZRS).

You need to monitor for replication delays that can affect the recovery point objective (RPO).

What should you include in the monitoring solution?

- A . availability

- B . Average Success E2E Latency

- C . 5xx: Server Error errors

- D . Last Sync Time

D

Explanation:

Because geo-replication is asynchronous, it is possible that data written to the primary region has not yet been written to the secondary region at the time an outage occurs. The Last Sync Time property indicates the last time that data from the primary region was written successfully to the secondary region. All writes made to the primary region before the last sync time are available to be read from the secondary location. Writes made to the primary region after the last sync time property may or may not be available for reads yet.

Reference: https://docs.microsoft.com/en-us/azure/storage/common/last-sync-time-get

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You plan to create an Azure Databricks workspace that has a tiered structure.

The workspace will contain the following three workloads:

✑ A workload for data engineers who will use Python and SQL.

✑ A workload for jobs that will run notebooks that use Python, Scala, and SOL.

✑ A workload that data scientists will use to perform ad hoc analysis in Scala and R.

The enterprise architecture team at your company identifies the following standards for Databricks environments:

✑ The data engineers must share a cluster.

✑ The job cluster will be managed by using a request process whereby data scientists and data engineers provide packaged notebooks for deployment to the cluster.

✑ All the data scientists must be assigned their own cluster that terminates automatically after 120 minutes of inactivity. Currently, there are three data scientists.

You need to create the Databricks clusters for the workloads.

Solution: You create a Standard cluster for each data scientist, a Standard cluster for the data engineers, and a High Concurrency cluster for the jobs.

Does this meet the goal?

- A . Yes

- B . No

B

Explanation:

We would need a High Concurrency cluster for the jobs.

Note: Standard clusters are recommended for a single user. Standard can run workloads developed in any language:

Python, R, Scala, and SQL.

A high concurrency cluster is a managed cloud resource. The key benefits of high concurrency clusters are that they provide Apache Spark-native fine-grained sharing for maximum resource utilization and minimum query latencies.

Reference: https://docs.azuredatabricks.net/clusters/configure.html

You plan to implement an Azure Data Lake Gen2 storage account.

You need to ensure that the data lake will remain available if a data center fails in the primary Azure region.

The solution must minimize costs.

Which type of replication should you use for the storage account?

- A . geo-redundant storage (GRS)

- B . zone-redundant storage (ZRS)

- C . locally-redundant storage (LRS)

- D . geo-zone-redundant storage (GZRS)

C

Explanation:

Locally redundant storage (LRS) copies your data synchronously three times within a single physical location in the primary region. LRS is the least expensive replication option

Reference: https://docs.microsoft.com/en-us/azure/storage/common/storage-redundancy

You have an Azure Stream Analytics job that receives clickstream data from an Azure event hub. You need to define a query in the Stream Analytics job.

The query must meet the following requirements:

✑ Count the number of clicks within each 10-second window based on the country of a visitor.

✑ Ensure that each click is NOT counted more than once.

How should you define the Query?

- A . SELECT Country, Avg(*) AS Average

FROM ClickStream TIMESTAMP BY CreatedAt

GROUP BY Country, SlidingWindow(second, 10) - B . SELECT Country, Count(*) AS Count

FROM ClickStream TIMESTAMP BY CreatedAt

GROUP BY Country, TumblingWindow(second, 10) - C . SELECT Country, Avg(*) AS Average

FROM ClickStream TIMESTAMP BY CreatedAt

GROUP BY Country, HoppingWindow(second, 10, 2) - D . SELECT Country, Count(*) AS Count

FROM ClickStream TIMESTAMP BY CreatedAt

GROUP BY Country, SessionWindow(second, 5, 10)

B

Explanation:

Tumbling window functions are used to segment a data stream into distinct time segments and perform a function against them, such as the example below. The key differentiators of a Tumbling window are that they repeat, do not overlap, and an event cannot belong to more than one tumbling window.

Example:

Reference: https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-window-functions