Practice Free DP-203 Exam Online Questions

You are designing the folder structure for an Azure Data Lake Storage Gen2 container.

Users will query data by using a variety of services including Azure Databricks and Azure Synapse Analytics serverless SQL pools. The data will be secured by subject area. Most queries will include data from the current year or current month.

Which folder structure should you recommend to support fast queries and simplified folder security?

- A . /{SubjectArea}/{DataSource}/{DD}/{MM}/{YYYY}/{FileData}_{YYYY}_{MM}_{DD}.csv

- B . /{DD}/{MM}/{YYYY}/{SubjectArea}/{DataSource}/{FileData}_{YYYY}_{MM}_{DD}.csv

- C . /{YYYY}/{MM}/{DD}/{SubjectArea}/{DataSource}/{FileData}_{YYYY}_{MM}_{DD}.csv

- D . /{SubjectArea}/{DataSource}/{YYYY}/{MM}/{DD}/{FileData}_{YYYY}_{MM}_{DD}.csv

D

Explanation:

There’s an important reason to put the date at the end of the directory structure. If you want to lock down certain regions or subject matters to users/groups, then you can easily do so with the POSIX permissions. Otherwise, if there was a need to restrict a certain security group to viewing just the UK data or certain planes, with the date structure in front a separate permission would be required for numerous directories under every hour directory. Additionally, having the date structure in front would exponentially increase the number of directories as time went on.

Note: In IoT workloads, there can be a great deal of data being landed in the data store that spans across numerous products, devices, organizations, and customers. It’s important to pre-plan the directory layout for organization, security, and efficient processing of the data for down-stream consumers. A general template to consider might be the following layout:

{Region}/{SubjectMatter(s)}/{yyyy}/{mm}/{dd}/{hh}/

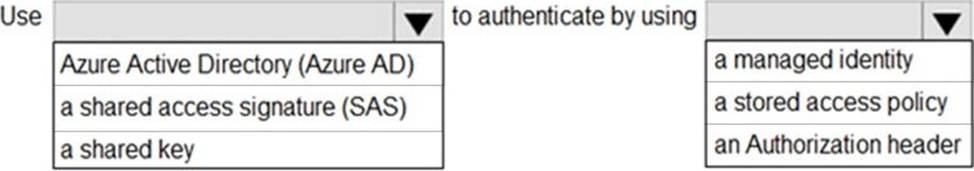

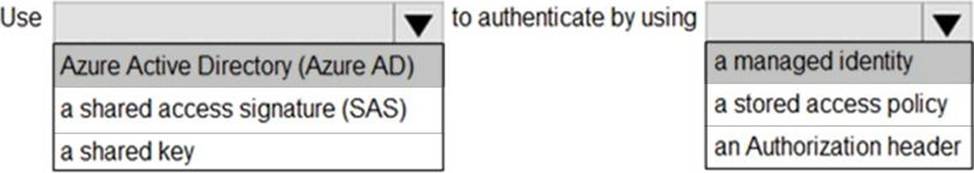

HOTSPOT

You have an Azure subscription that contains an Azure Data Lake Storage account. The storage account contains a data lake named DataLake1.

You plan to use an Azure data factory to ingest data from a folder in DataLake1, transform the data, and land the data in another folder.

You need to ensure that the data factory can read and write data from any folder in the DataLake1 file system.

The solution must meet the following requirements:

✑ Minimize the risk of unauthorized user access.

✑ Use the principle of least privilege.

✑ Minimize maintenance effort.

How should you configure access to the storage account for the data factory? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: Azure Active Directory (Azure AD)

On Azure, managed identities eliminate the need for developers having to manage credentials by providing an identity for the Azure resource in Azure AD and using it to obtain Azure Active Directory (Azure AD) tokens.

Box 2: a managed identity

A data factory can be associated with a managed identity for Azure resources, which represents this specific data factory. You can directly use this managed identity for Data Lake Storage Gen2 authentication, similar to using your own service principal. It allows this designated factory to access and copy data to or from your Data Lake Storage Gen2.

Note: The Azure Data Lake Storage Gen2 connector supports the following authentication types.

✑ Account key authentication

✑ Service principal authentication

✑ Managed identities for Azure resources authentication

Reference:

https://docs.microsoft.com/en-us/azure/active-directory/managed-identities-azure-resources/overview

https://docs.microsoft.com/en-us/azure/data-factory/connector-azure-data-lake-storage

You are creating an Azure Data Factory data flow that will ingest data from a CSV file, cast columns to specified types of data, and insert the data into a table in an Azure Synapse Analytics dedicated SQL pool. The CSV file contains columns named username, comment and date.

The data flow already contains the following:

• A source transformation

• A Derived Column transformation to set the appropriate types of data

• A sink transformation to land the data in the pool

You need to ensure that the data flow meets the following requirements;

• All valid rows must be written to the destination table.

• Truncation errors in the comment column must be avoided proactively.

• Any rows containing comment values that will cause truncation errors upon insert must be written to a file in blob storage.

Which two actions should you perform? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point

- A . Add a select transformation that selects only the rows which will cause truncation errors.

- B . Add a sink transformation that writes the rows to a file in blob storage.

- C . Add a filter transformation that filters out rows which will cause truncation errors.

- D . Add a Conditional Split transformation that separates the rows which will cause truncation errors.

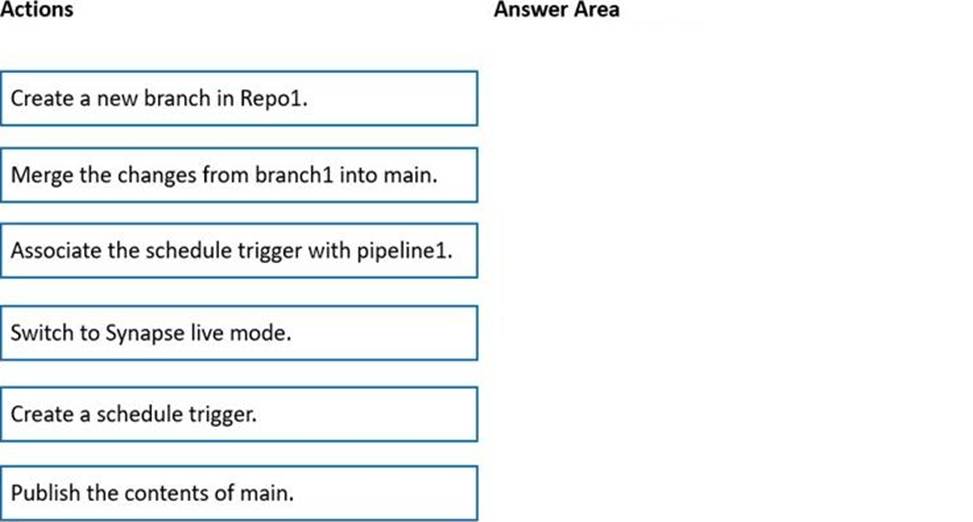

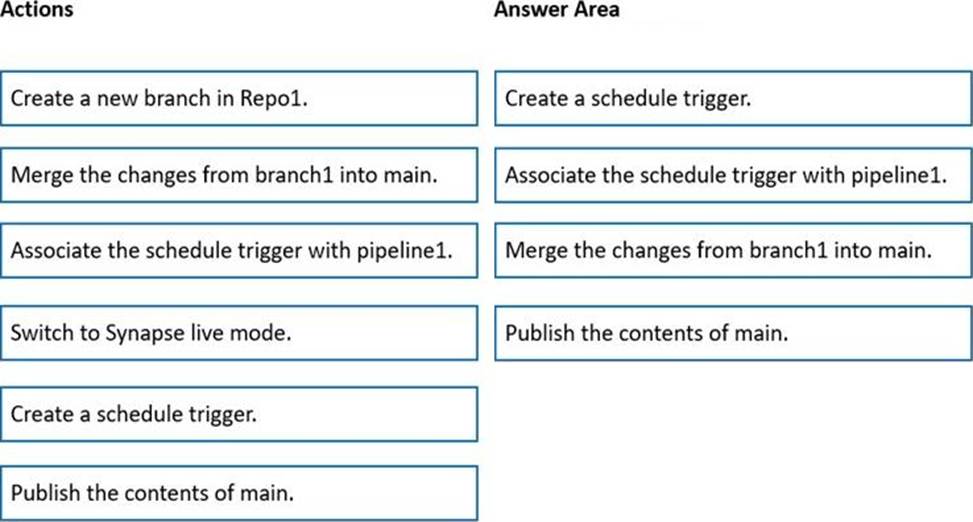

DRAG DROP

You have an Azure subscription that contains an Azure Synapse Analytics workspace named workspace1. Workspace1 connects to an Azure DevOps repository named repo1. Repo1 contains a collaboration branch named main and a development branch named branch1. Branch1 contains an Azure Synapse pipeline named pipeline1.

In workspace1, you complete testing of pipeline1.

You need to schedule pipeline1 to run daily at 6 AM.

Which four actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order. NOTE: More than one order of answer choices is correct. You will receive credit for any of the correct orders you select.

You are designing an Azure Databricks interactive cluster. The cluster will be used infrequently and will be configured for auto-termination.

You need to ensure that the cluster configuration is retained indefinitely after the cluster is terminated. The solution must minimize costs.

What should you do?

- A . Clone the cluster after it is terminated.

- B . Terminate the cluster manually when processing completes.

- C . Create an Azure runbook that starts the cluster every 90 days.

- D . Pin the cluster.

D

Explanation:

To keep an interactive cluster configuration even after it has been terminated for more than 30 days, an

administrator can pin a cluster to the cluster list.

Reference: https://docs.azuredatabricks.net/clusters/clusters-manage.html#automatic-termination

You are designing an anomaly detection solution for streaming data from an Azure IoT hub.

The solution must meet the following requirements:

✑ Send the output to Azure Synapse.

✑ Identify spikes and dips in time series data.

✑ Minimize development and configuration effort.

Which should you include in the solution?

- A . Azure Databricks

- B . Azure Stream Analytics

- C . Azure SQL Database

B

Explanation:

You can identify anomalies by routing data via IoT Hub to a built-in ML model in Azure Stream Analytics.

Reference: https://docs.microsoft.com/en-us/learn/modules/data-anomaly-detection-using-azure-iot-hub/

You are creating an Azure Data Factory data flow that will ingest data from a CSV file, cast columns to specified types of data, and insert the data into a table in an Azure Synapse Analytic dedicated SQL pool. The CSV file contains three columns named username, comment, and date.

The data flow already contains the following:

✑ A source transformation.

✑ A Derived Column transformation to set the appropriate types of data.

✑ A sink transformation to land the data in the pool.

You need to ensure that the data flow meets the following requirements:

✑ All valid rows must be written to the destination table.

✑ Truncation errors in the comment column must be avoided proactively.

✑ Any rows containing comment values that will cause truncation errors upon insert must be written to a file in blob storage.

Which two actions should you perform? Each correct answer presents part of the solution . NOTE: Each correct selection is worth one point.

- A . To the data flow, add a sink transformation to write the rows to a file in blob storage.

- B . To the data flow, add a Conditional Split transformation to separate the rows that will cause truncation errors.

- C . To the data flow, add a filter transformation to filter out rows that will cause truncation errors.

- D . Add a select transformation to select only the rows that will cause truncation errors.

AB

Explanation:

B: Example:

You need to integrate the on-premises data sources and Azure Synapse Analytics. The solution must meet the data integration requirements.

Which type of integration runtime should you use?

- A . Azure-SSIS integration runtime

- B . self-hosted integration runtime

- C . Azure integration runtime

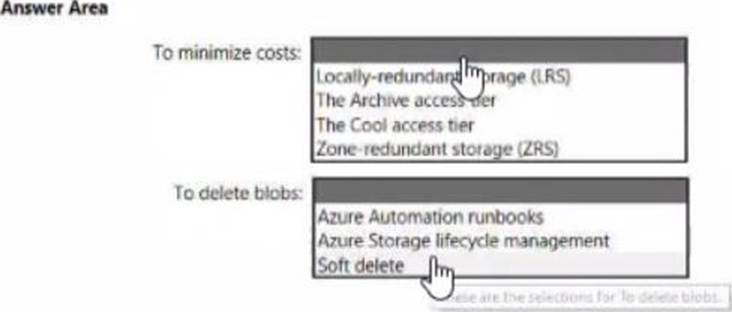

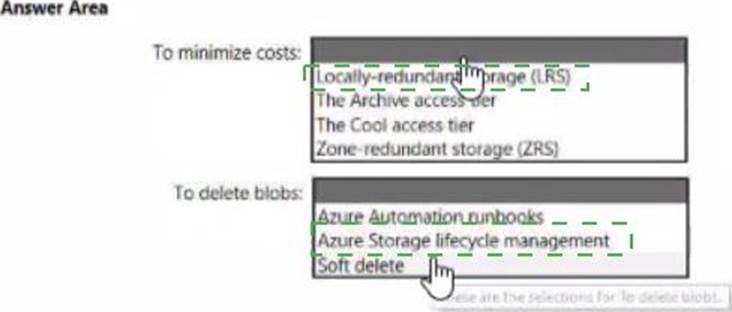

HOTSPOT

You have an Azure subscription.

You need to deploy an Azure Data Lake Storage Gen2 Premium account.

The solution must meet the following requirements:

• Blobs that are older than 365 days must be deleted.

• Administrator efforts must be minimized.

• Costs must be minimized

What should you use? To answer, select the appropriate options in the answer area. NOTE Each correct selection is worth one point.

Explanation:

https://learn.microsoft.com/en-us/azure/storage/blobs/premium-tier-for-data-lake-storage

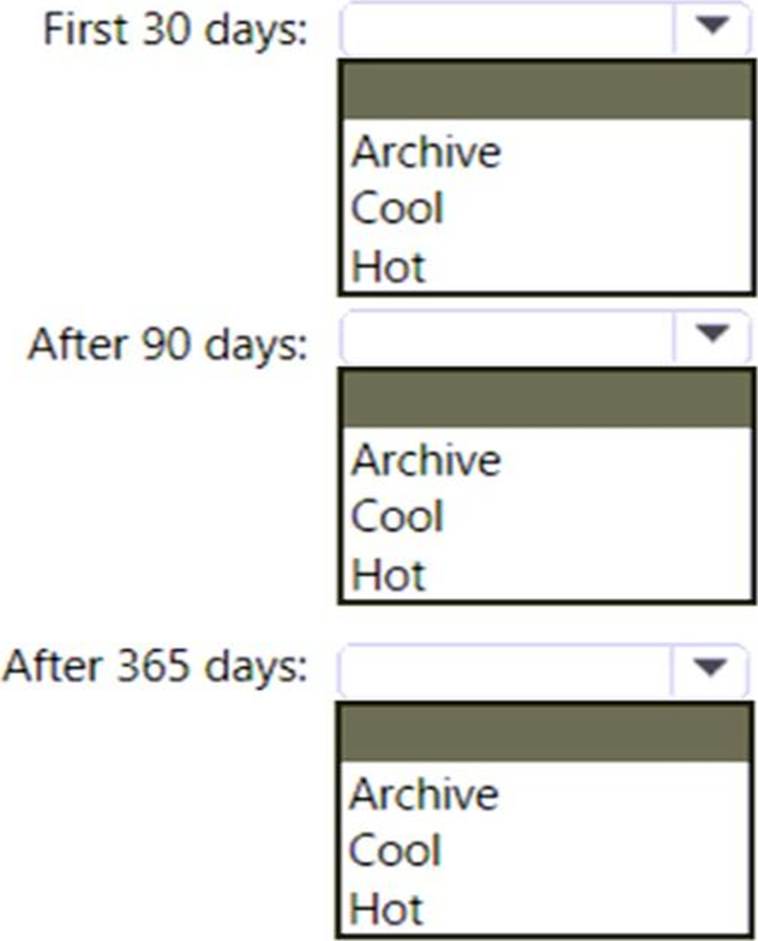

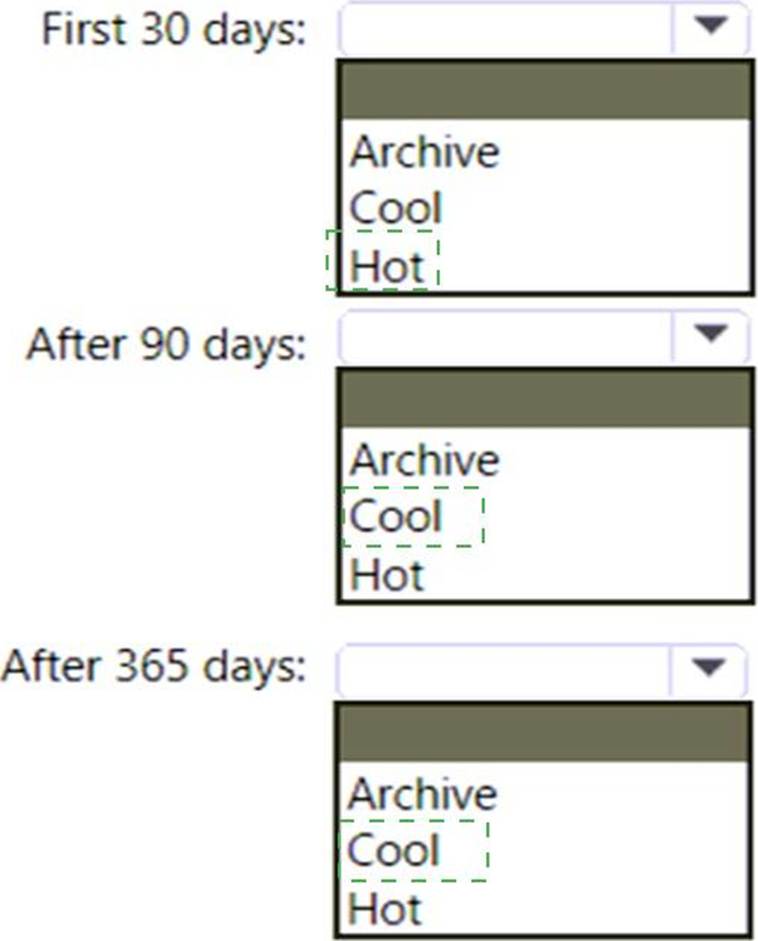

HOTSPOT

You are designing an application that will use an Azure Data Lake Storage Gen 2 account to store petabytes of license plate photos from toll booths. The account will use zone-redundant storage (ZRS).

You identify the following usage patterns:

• The data will be accessed several times a day during the first 30 days after the data is created. The data must meet an availability SU of 99.9%.

• After 90 days, the data will be accessed infrequently but must be available within 30 seconds.

• After 365 days, the data will be accessed infrequently but must be available within five minutes.

Explanation:

Box 1: Hot

The data will be accessed several times a day during the first 30 days after the data is created. The data must meet an availability SLA of 99.9%.

Box 2: Cool

After 90 days, the data will be accessed infrequently but must be available within 30 seconds.

Data in the Cool tier should be stored for a minimum of 30 days.

When your data is stored in an online access tier (either Hot or Cool), users can access it immediately. The Hot tier is the best choice for data that is in active use, while the Cool tier is ideal for data that is accessed less frequently, but that still must be available for reading and writing.

Box 3: Cool

After 365 days, the data will be accessed infrequently but must be available within five minutes.

Reference:

https://docs.microsoft.com/en-us/azure/storage/blobs/access-tiers-overview https://docs.microsoft.com/en-us/azure/storage/blobs/archive-rehydrate-overview