Practice Free DP-203 Exam Online Questions

You have an Azure Synapse Analytics dedicated SQL pool.

You plan to create a fact table named Table1 that will contain a clustered columnstore index.

You need to optimize data compression and query performance for Table1.

What is the minimum number of rows that Table1 should contain before you create partitions?

- A . 100.000

- B . 600,000

- C . 1 million

- D . 60 million

You have an Azure Synapse Analytics dedicated SQL pool named Pcol1. Pool1 contains a table named tablet

You load 5 TB of data into table1.

You need to ensure that column store compression is maximized for table1.

Which statement should you execute?

- A . ALTER INDEX ALL on table REBUILD

- B . DBCC DBREINOEX (table)

- C . DBCC IIDEXDEFRAG (pool1, table1)

- D . ALTER INDEX ALL on table REORGANIZE

You have an Azure data factory that connects to a Microsoft Purview account. The data ‘factory is

registered in Microsoft Purview.

You update a Data Factory pipeline.

You need to ensure that the updated lineage is available in Microsoft Purview.

What should you do first?

- A . Disconnect the Microsoft Purview account from the data factory.

- B . Locate the related asset in the Microsoft Purview portal.

- C . Execute an Azure DevOps build pipeline.

- D . Execute the pipeline.

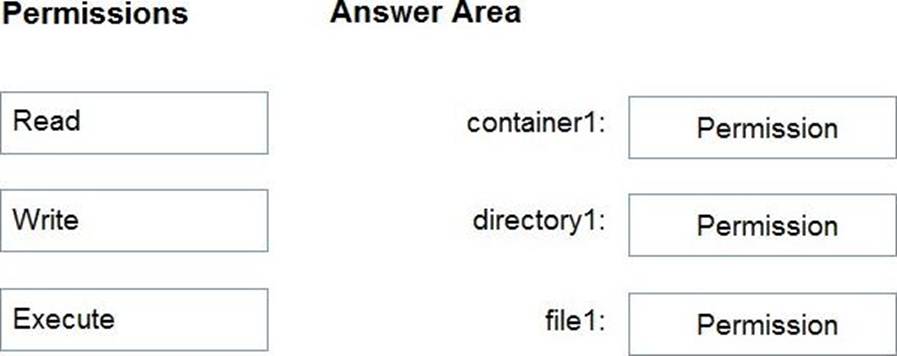

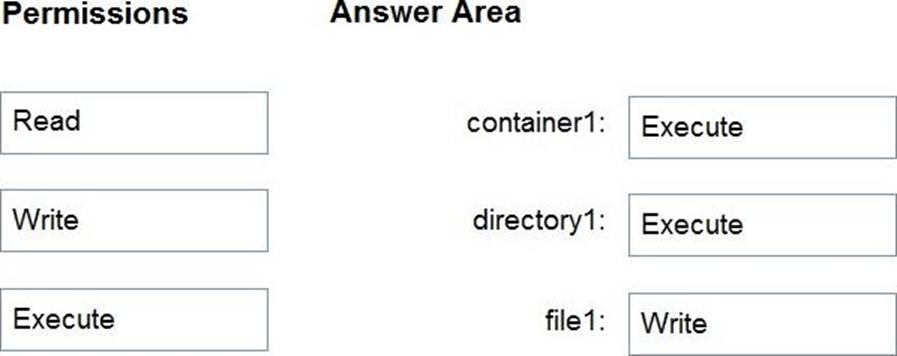

DRAG DROP

You have an Azure subscription that contains an Azure Data Lake Storage Gen2 account named storage1. Storage1 contains a container named container1. Container1 contains a directory named directory1. Directory1 contains a file named file1.

You have an Azure Active Directory (Azure AD) user named User1 that is assigned the Storage Blob Data Reader role for storage1.

You need to ensure that User1 can append data to file1. The solution must use the principle of least privilege.

Which permissions should you grant? To answer, drag the appropriate permissions to the correct resources. Each permission may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

Explanation:

Box 1: Execute

If you are granting permissions by using only ACLs (no Azure RBAC), then to grant a security principal

read or write access to a file, you’ll need to give the security principal Execute permissions to the root folder of the container, and to each folder in the hierarchy of folders that lead to the file.

Box 2: Execute

On Directory: Execute (X): Required to traverse the child items of a directory

Box 3: Write

On file: Write (W): Can write or append to a file.

Reference: https://docs.microsoft.com/en-us/azure/storage/blobs/data-lake-storage-access-control

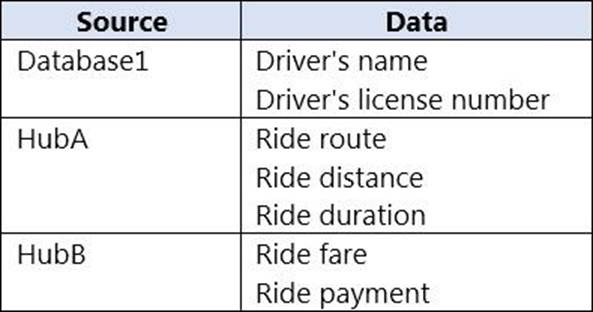

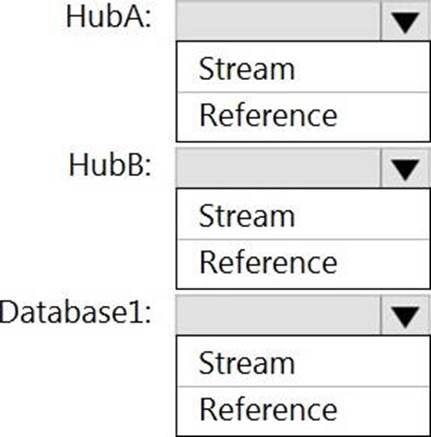

HOTSPOT

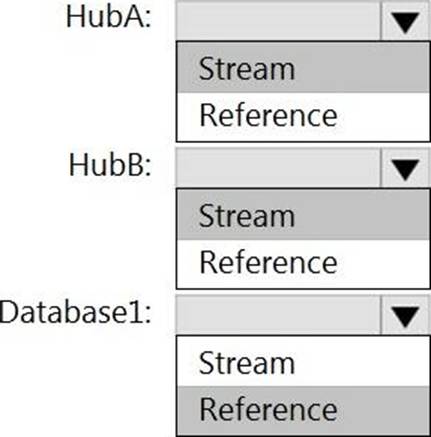

You have an Azure SQL database named Database1 and two Azure event hubs named HubA and HubB.

The data consumed from each source is shown in the following table.

You need to implement Azure Stream Analytics to calculate the average fare per mile by driver.

How should you configure the Stream Analytics input for each source? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Explanation:

HubA: Stream

HubB: Stream

Database1: Reference

Reference data (also known as a lookup table) is a finite data set that is static or slowly changing in nature, used to perform a lookup or to augment your data streams. For example, in an IoT scenario, you could store metadata about sensors (which don’t change often) in reference data and join it with real time IoT data streams. Azure Stream Analytics loads reference data in memory to achieve low latency stream processing

Reference: https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-use-reference-data

You have an Azure Factory instance named DF1 that contains a pipeline named PL1.PL1 includes a tumbling window trigger.

You create five clones of PL1. You configure each clone pipeline to use a different data source.

You need to ensure that the execution schedules of the clone pipeline match the execution schedule of PL1.

What should you do?

- A . Add a new trigger to each cloned pipeline

- B . Associate each cloned pipeline to an existing trigger.

- C . Create a tumbling window trigger dependency for the trigger of PL1.

- D . Modify the Concurrency setting of each pipeline.

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this scenario, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure Storage account that contains 100 GB of files. The files contain text and numerical values. 75% of the rows contain description data that has an average length of 1.1 MB. You plan to copy the data from the storage account to an Azure SQL data warehouse.

You need to prepare the files to ensure that the data copies quickly.

Solution: You modify the files to ensure that each row is less than 1 MB.

Does this meet the goal?

- A . Yes

- B . No

A

Explanation:

When exporting data into an ORC File Format, you might get Java out-of-memory errors when there are large text columns. To work around this limitation, export only a subset of the columns.

Reference: https://docs.microsoft.com/en-us/azure/sql-data-warehouse/guidance-for-loading-data

HOTSPOT

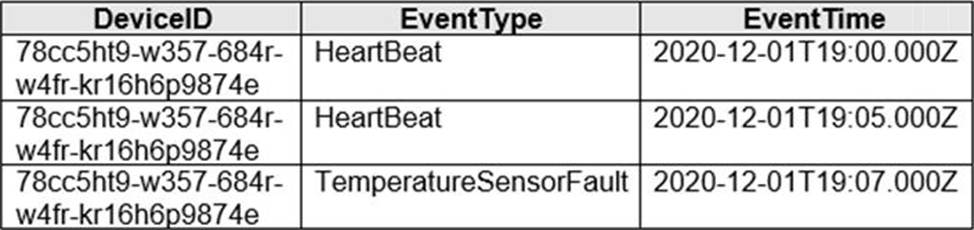

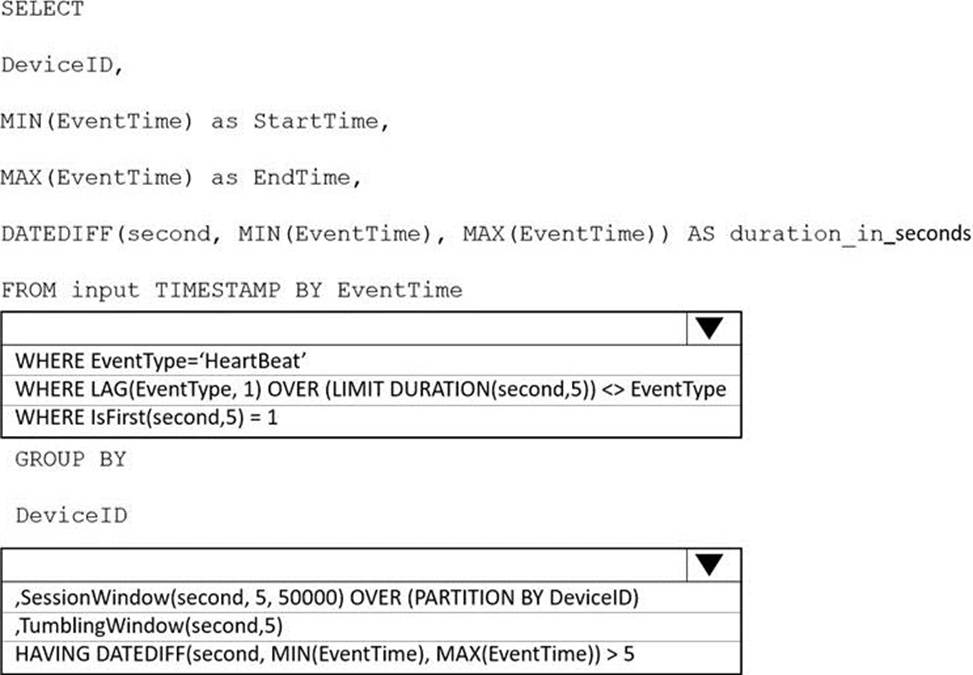

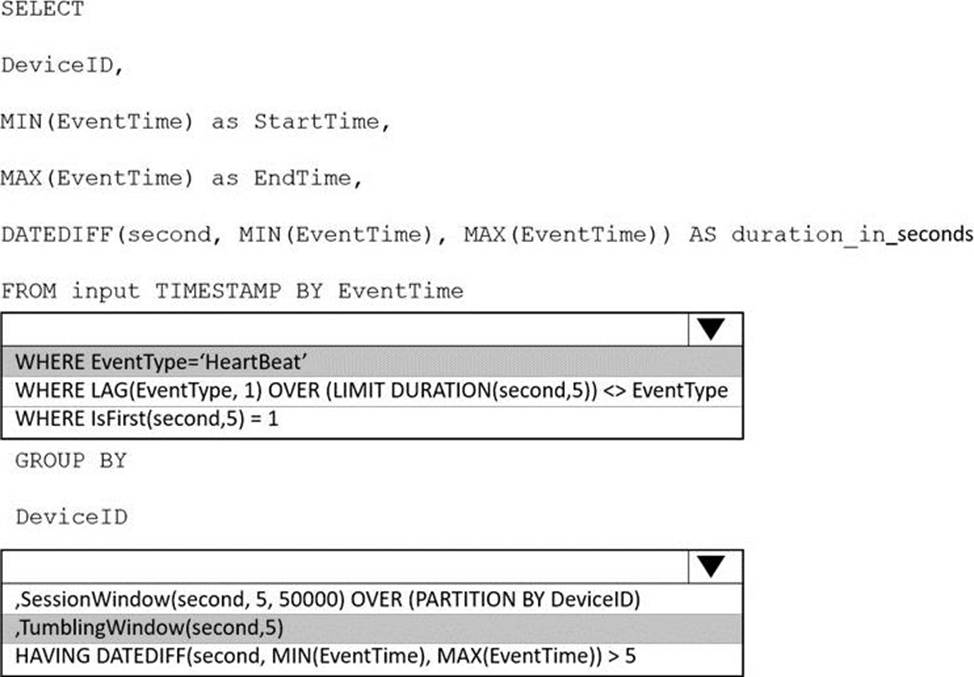

You are implementing an Azure Stream Analytics solution to process event data from devices.

The devices output events when there is a fault and emit a repeat of the event every five seconds until the fault is resolved. The devices output a heartbeat event every five seconds after a previous event if there are no faults present.

A sample of the events is shown in the following table.

You need to calculate the uptime between the faults.

How should you complete the Stream Analytics SQL query? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: WHERE EventType=’HeartBeat’

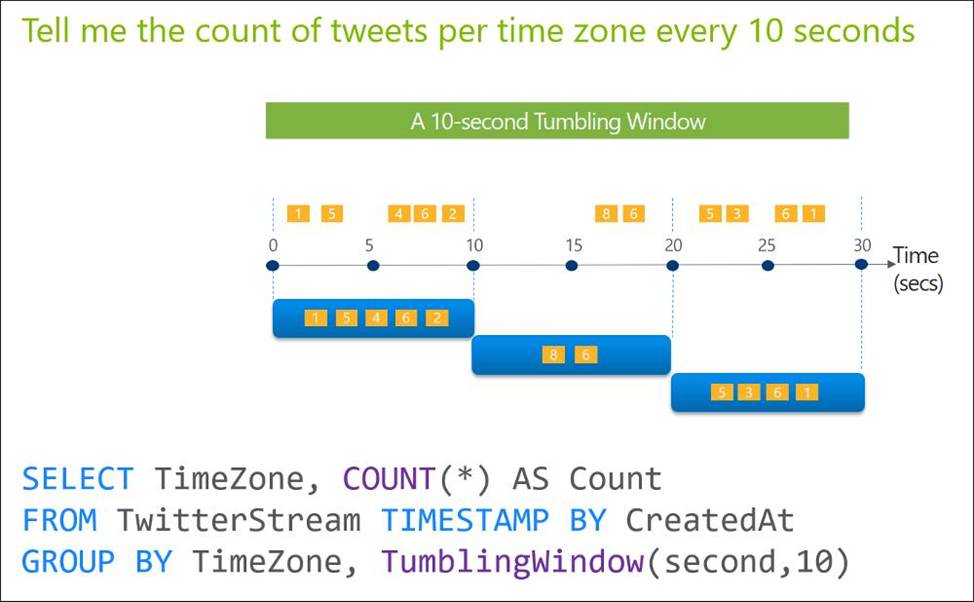

Box 2: ,TumblingWindow(Second, 5)

Tumbling windows are a series of fixed-sized, non-overlapping and contiguous time intervals.

The following diagram illustrates a stream with a series of events and how they are mapped into 10-second tumbling windows.

Reference:

https://docs.microsoft.com/en-us/stream-analytics-query/session-window-azure-stream-analytics

https://docs.microsoft.com/en-us/stream-analytics-query/tumbling-window-azure-stream-analytics

You have an Azure subscription that contains an Azure data factory named ADF1.

From Azure Data Factory Studio, you build a complex data pipeline in ADF1.

You discover that the Save button is unavailable and there are validation errors that prevent the pipeline from being published.

You need to ensure that you can save the logic of the pipeline.

Solution: You enable Git integration for ADF1.

- A . Yes

- B . No

You have an Azure Synapse workspace named MyWorkspace that contains an Apache Spark database named mytestdb.

You run the following command in an Azure Synapse Analytics Spark pool in MyWorkspace.

CREATE TABLE mytestdb.myParquetTable(

EmployeeID int,

EmployeeName string,

EmployeeStartDate date)

USING Parquet

You then use Spark to insert a row into mytestdb.myParquetTable.

The row contains the following data.

One minute later, you execute the following query from a serverless SQL pool in MyWorkspace.

SELECT EmployeeID

FROM mytestdb.dbo.myParquetTable

WHERE name = ‘Alice’;

What will be returned by the query?

- A . 24

- B . an error

- C . a null value

B

Explanation:

Once a database has been created by a Spark job, you can create tables in it with Spark that use Parquet as the storage format. Table names will be converted to lower case and need to be queried using the lower case name. These tables will immediately become available for querying by any of the Azure Synapse workspace Spark pools. They can also be used from any of the Spark jobs subject to permissions.

Note: For external tables, since they are synchronized to serverless SQL pool asynchronously, there will be a delay until they appear.

Reference: https://docs.microsoft.com/en-us/azure/synapse-analytics/metadata/table