Practice Free DOP-C02 Exam Online Questions

A company wants to migrate its content sharing web application hosted on Amazon EC2 to a serverless architecture. The company currently deploys changes to its application by creating a new Auto Scaling group of EC2 instances and a new Elastic Load Balancer, and then shifting the traffic away using an Amazon Route 53 weighted routing policy.

For its new serverless application, the company is planning to use Amazon API Gateway and AWS Lambda. The company will need to update its deployment processes to work with the new application. It will also need to retain the ability to test new features on a small number of users before rolling the features out to the entire user base.

Which deployment strategy will meet these requirements?

- A . Use AWS CDK to deploy API Gateway and Lambda functions. When code needs to be changed, update the AWS CloudFormation stack and deploy the new version of the APIs and Lambda functions. Use a Route 53 failover routing policy for the canary release strategy.

- B . Use AWS CloudFormation to deploy API Gateway and Lambda functions using Lambda function versions. When code needs to be changed, update the CloudFormation stack with the new Lambda code and update the API versions using a canary release strategy. Promote the new version when testing is complete.

- C . Use AWS Elastic Beanstalk to deploy API Gateway and Lambda functions. When code needs to be changed, deploy a new version of the API and Lambda functions. Shift traffic gradually using an Elastic Beanstalk blue/green deployment.

- D . Use AWS OpsWorks to deploy API Gateway in the service layer and Lambda functions in a custom layer. When code needs to be changed, use OpsWorks to perform a blue/green deployment and shift traffic gradually.

A company has an organization in AWS Organizations for its multi-account environment. A DevOps engineer is developing an AWS CodeArtifact based strategy for application package management across the organization. Each application team at the company has its own account in the organization. Each application team also has limited access to a centralized shared services account. Each application team needs full access to download, publish, and grant access to its own packages. Some common library packages that the application teams use must also be shared with the entire organization.

Which combination of steps will meet these requirements with the LEAST administrative overhead? (Select THREE.)

- A . Create a domain in each application team’s account. Grant each application team’s account lull read access and write access to the application team’s domain

- B . Create a domain in the shared services account Grant the organization read access and CreateRepository access.

- C . Create a repository in each application team’s account. Grant each application team’s account lull read access and write access to its own repository.

- D . Create a repository in the shared services account. Grant the organization read access to the repository in the shared services account. Set the repository as the upstream repository in each application team’s repository.

- E . For teams that require shared packages, create resource-based policies that allow read access to

the repository from other application teams’ accounts. - F . Set the other application teams’ repositories as upstream repositories.

BCD

Explanation:

Step 1: Creating a Centralized Domain in the Shared Services Account

To manage application package dependencies across multiple accounts, the most efficient solution is to create a centralized domain in the shared services account. This allows all application teams to access and manage package repositories within the same domain, ensuring consistency and centralization.

Action: Create a domain in the shared services account.

Why: A single, centralized domain reduces the need for redundant management in each application team’s account.

Reference: AWS documentation on AWS CodeArtifact domains and repositories.

This corresponds to Option B: Create a domain in the shared services account. Grant the organization read access and CreateRepository access.

Step 2: Sharing Repositories Across Teams with Upstream Configurations

To share common library packages across the organization, each application team’s repository can point to the shared services repository as an upstream repository. This enables teams to access shared packages without managing them individually in each team’s account.

Action: Create a repository in the shared services account and set it as the upstream repository for each application team.

Why: Upstream repositories allow package sharing while maintaining individual team repositories for managing their own packages.

Reference: AWS documentation on Upstream repositories in CodeArtifact.

This corresponds to Option D: Create a repository in the shared services account. Grant the organization read access to the repository in the shared services account. Set the repository as the upstream repository in each application team’s repository.

Step 3: Using Resource-Based Policies for Cross-Account Access

For teams that need to share their packages with other application teams, resource-based policies can be applied to grant the necessary permissions. These policies allow cross-account access without having to manage permissions at the individual account level.

Action: Create resource-based policies that allow read access to the repositories across application teams.

Why: This simplifies management by centralizing permissions in the shared services account while allowing cross-team collaboration.

Reference: AWS documentation on CodeArtifact resource-based policies.

This corresponds to Option E: For teams that require shared packages, create resource-based policies that allow read access to the repository from other application teams’ accounts.

A company has chosen AWS to host a new application. The company needs to implement a multi-account strategy. A DevOps engineer creates a new AWS account and an organization in AWS Organizations. The DevOps engineer also creates the OU structure for the organization and sets up a landing zone by using AWS Control Tower.

The DevOps engineer must implement a solution that automatically deploys resources for new accounts that users create through AWS Control Tower Account Factory. When a user creates a new account, the solution must apply AWS CloudFormation templates and SCPs that are customized for the OU or the account to automatically deploy all the resources that are attached to the account. All the OUs are enrolled in AWS Control Tower.

Which solution will meet these requirements in the MOST automated way?

- A . Use AWS Service Catalog with AWS Control Tower. Create portfolios and products in AWS Service Catalog. Grant granular permissions to provision these resources. Deploy SCPs by using the AWS CLI and JSON documents.

- B . Deploy CloudFormation stack sets by using the required templates. Enable automatic deployment. Deploy stack instances to the required accounts. Deploy a CloudFormation stack set to the organization’s management account to deploy SCPs.

- C . Create an Amazon EventBridge rule to detect the CreateManagedAccount event.

Configure AWS Service Catalog as the target to deploy resources to any new accounts.

Deploy SCPs by using the AWS CLI and JSON documents. - D . Deploy the Customizations for AWS Control Tower (CfCT) solution. Use an AWS CodeCommit repository as the source. In the repository, create a custom package that includes the CloudFormation templates and the SCP JSON documents.

A company is migrating its container-based workloads to an AWS Organizations multi-account environment. The environment consists of application workload accounts that the company uses to deploy and run the containerized workloads. The company has also provisioned a shared services account tor shared workloads in the organization.

The company must follow strict compliance regulations. All container images must receive security scanning before they are deployed to any environment. Images can be consumed by downstream deployment mechanisms after the images pass a scan with no critical vulnerabilities. Pre-scan and post-scan images must be isolated from one another so that a deployment can never use pre-scan images.

A DevOps engineer needs to create a strategy to centralize this process.

Which combination of steps will meet these requirements with the LEAST administrative overhead? (Select TWO.)

- A . Create Amazon Elastic Container Registry (Amazon ECR) repositories in the shared services account: one repository for each pre-scan image and one repository for each post-scan image. Configure Amazon ECR image scanning to run on new image pushes to the pre-scan repositories. Use resource-based policies to grant the organization write access to the pre-scan repositories and read access to the post-scan repositories.

- B . Create pre-scan Amazon Elastic Container Registry (Amazon ECR) repositories in each account that publishes container images. Create repositories for post-scan images in the shared services account. Configure Amazon ECR image scanning to run on new image pushes to the pre-scan repositories. Use resource-based policies to grant the organization read access to the post-scan repositories.

- C . Configure image replication for each image from the image’s pre-scan repository to the image’s post-scan repository.

- D . Create a pipeline in AWS CodePipeline for each pre-scan repository. Create a source stage that runs when new images are pushed to the pre-scan repositories. Create a stage that uses AWS CodeBuild as the action provider. Write a buildspec.yaml definition that determines the image scanning status and pushes images without critical vulnerabilities lo the post-scan repositories.

- E . Create an AWS Lambda function. Create an Amazon EventBridge rule that reacts to image scanning completed events and invokes the Lambda function. Write function code that determines the image scanning status and pushes images without critical vulnerabilities to the post-scan repositories.

A, E

Explanation:

Step 1: Centralizing Image Scanning in a Shared Services Account

The first requirement is to centralize the image scanning process, ensuring pre-scan and post-scan images are stored separately. This can be achieved by creating separate pre-scan and post-scan repositories in the shared services account, with the appropriate resource-based policies to control access.

Action: Create separate ECR repositories for pre-scan and post-scan images in the shared services account. Configure resource-based policies to allow write access to pre-scan repositories and read access to post-scan repositories.

Why: This ensures that images are isolated before and after the scan, following the compliance requirements.

Reference: AWS documentation on Amazon ECR and resource-based policies.

This corresponds to Option A: Create Amazon Elastic Container Registry (Amazon ECR) repositories in the shared services account: one repository for each pre-scan image and one repository for each post-scan image. Configure Amazon ECR image scanning to run on new image pushes to the pre-scan repositories. Use resource-based policies to grant the organization write access to the pre-scan repositories and read access to the post-scan repositories.

Step 2: Replication between Pre-Scan and Post-Scan Repositories

To automate the transfer of images from the pre-scan repositories to the post-scan repositories (after they pass the security scan), you can configure image replication between the two repositories. Action: Set up image replication between the pre-scan and post-scan repositories to move images that have passed the security scan.

Why: Replication ensures that only scanned and compliant images are available for deployment, streamlining the process with minimal administrative overhead.

Reference: AWS documentation on Amazon ECR image replication.

This corresponds to Option C: Configure image replication for each image from the image’s pre-scan repository to the image’s post-scan repository.

A company has an application that runs on Amazon EC2 instances behind an Application Load Balancer (ALB) The EC2 Instances are in multiple Availability Zones The application was misconfigured in a single Availability Zone, which caused a partial outage of the application.

A DevOps engineer made changes to ensure that the unhealthy EC2 instances in one Availability Zone do not affect the healthy EC2 instances in the other Availability Zones. The DevOps engineer needs to test the application’s failover and shift where the ALB sends traffic During failover. the ALB must avoid sending traffic to the Availability Zone where the failure has occurred.

Which solution will meet these requirements?

- A . Turn off cross-zone load balancing on the ALB Use Amazon Route 53 Application Recovery Controller to start a zonal shift away from the Availability Zone

- B . Turn off cross-zone load balancing on the ALB’s target group Use Amazon Route 53 Application Recovery Controller to start a zonal shift away from the Availability Zone

- C . Create an Amazon Route 53 Application Recovery Controller resource set that uses the DNS hostname of the ALB Start a zonal shift for the resource set away from the Availability Zone

- D . Create an Amazon Route 53 Application Recovery Controller resource set that uses the ARN of the ALB’s target group Create a readiness check that uses the ElbV2TargetGroupsCanServeTraffic rule

B

Explanation:

Turn off cross-zone load balancing on the ALB’s target group: Cross-zone load balancing distributes traffic evenly across all registered targets in all enabled Availability Zones. Turning this off will ensure that each target group only handles requests from its respective Availability Zone.

To disable cross-zone load balancing:

Go to the Amazon EC2 console.

Navigate to Load Balancers and select the ALB.

Choose the Target Groups tab, select the target group, and then select the Group details tab.

Click on Edit and turn off Cross-zone load balancing.

Use Amazon Route 53 Application Recovery Controller to start a zonal shift away from the Availability Zone:

Amazon Route 53 Application Recovery Controller provides the ability to control traffic flow to ensure high availability and disaster recovery.

By using Route 53 Application Recovery Controller, you can perform a zonal shift to redirect traffic away from the unhealthy Availability Zone.

To start a zonal shift:

Configure Route 53 Application Recovery Controller by creating a cluster and control panel.

Create routing controls to manage traffic shifts between Availability Zones.

Use the routing control to shift traffic away from the affected Availability Zone.

Reference: Disabling cross-zone load balancing

Route 53 Application Recovery Controller

A DevOps engineer is researching the least expensive way to implement an image batch processing cluster on AWS. The application cannot run in Docker containers and must run on Amazon EC2. The batch job stores checkpoint data on an NFS volume and can tolerate interruptions. Configuring the cluster software from a generic EC2 Linux image takes 30 minutes.

What is the MOST cost-effective solution?

- A . Use Amazon EFS (or checkpoint data. To complete the job, use an EC2 Auto Scaling group and an On-Demand pricing model to provision EC2 instances temporally.

- B . Use GlusterFS on EC2 instances for checkpoint data. To run the batch job configure EC2 instances manually When the job completes shut down the instances manually.

- C . Use Amazon EFS for checkpoint data Use EC2 Fleet to launch EC2 Spot Instances and utilize user data to configure the EC2 Linux instance on startup.

- D . Use Amazon EFS for checkpoint data Use EC2 Fleet to launch EC2 Spot Instances Create a custom AMI for the cluster and use the latest AMI when creating instances.

A company has configured Amazon RDS storage autoscaling for its RDS DB instances. A DevOps team needs to visualize the autoscaling events on an Amazon CloudWatch dashboard.

Which solution will meet this requirement?

- A . Create an Amazon EventBridge rule that reacts to RDS storage autoscaling events from RDS events. Create an AWS Lambda function that publishes a CloudWatch custom metric. Configure the EventBridge rule to invoke the Lambda function. Visualize the custom metric by using the CloudWatch dashboard.

- B . Create a trail by using AWS CloudTrail with management events configured. Configure the trail to send the management events to Amazon CloudWatch Logs. Create a metric filter in CloudWatch Logs to match the RDS storage autoscaling events. Visualize the metric filter by using the CloudWatch dashboard.

- C . Create an Amazon EventBridge rule that reacts to RDS storage autoscaling events (rom the RDS events. Create a CloudWatch alarm. Configure the EventBridge rule to change the status of the CloudWatch alarm. Visualize the alarm status by using the CloudWatch dashboard.

- D . Create a trail by using AWS CloudTrail with data events configured. Configure the trail to send the data events to Amazon CloudWatch Logs. Create a metric filter in CloudWatch Logs to match the RDS storage autoscaling events. Visualize the metric filter by using the CloudWatch dashboard.

A

Explanation:

Step 1: Reacting to RDS Storage Autoscaling Events Using Amazon EventBridge

Amazon RDS emits events when storage autoscaling occurs. To visualize these events in a CloudWatch dashboard, you can create an EventBridge rule that listens for these specific autoscaling events.

Action: Create an EventBridge rule that reacts to RDS storage autoscaling events from the RDS event stream.

Why: EventBridge allows you to listen to RDS events and route them to specific AWS services for processing.

Step 2: Creating a Custom CloudWatch Metric via Lambda

Once the EventBridge rule detects a storage autoscaling event, you can use a Lambda function to publish a custom metric to CloudWatch. This metric can then be visualized in a CloudWatch dashboard.

Action: Use a Lambda function to publish custom metrics to CloudWatch based on the RDS storage autoscaling events.

Why: Custom metrics allow you to track specific events like autoscaling and visualize them easily on a CloudWatch dashboard.

Reference: AWS documentation on Publishing Custom Metrics to CloudWatch.

This corresponds to Option A: Create an Amazon EventBridge rule that reacts to RDS storage autoscaling events from RDS events. Create an AWS Lambda function that publishes a CloudWatch custom metric. Configure the EventBridge rule to invoke the Lambda function. Visualize the custom metric by using the CloudWatch dashboard.

An Amazon EC2 instance is running in a VPC and needs to download an object from a restricted Amazon S3 bucket. When the DevOps engineer tries to download the object, an AccessDenied error is received,

What are the possible causes tor this error? (Select TWO,)

- A . The 53 bucket default encryption is enabled.

- B . There is an error in the S3 bucket policy.

- C . The object has been moved to S3 Glacier.

- D . There is an error in the IAM role configuration.

- E . S3 Versioning is enabled.

BD

Explanation:

These are the possible causes for the AccessDenied error because they affect the permissions to access the S3 object from the EC2 instance. An S3 bucket policy is a resource-based policy that defines who can access the bucket and its objects, and what actions they can perform. An IAM role is an identity that can be assumed by an EC2 instance to grant it permissions to access AWS services and resources. If there is an error in the S3 bucket policy or the IAM role configuration, such as a missing or incorrect statement, condition, or principal, then the EC2 instance may not have the necessary permissions to download the object from the S3 bucket. https://docs.aws.amazon.com/AmazonS3/latest/userguide/example-bucket-policies.html https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/iam-roles-for-amazon-ec2.html

A company builds a container image in an AWS CodeBuild project by running Docker commands. After the container image is built, the CodeBuild project uploads the container image to an Amazon S3 bucket. The CodeBuild project has an IAM service role that has permissions to access the S3 bucket.

A DevOps engineer needs to replace the S3 bucket with an Amazon Elastic Container Registry (Amazon ECR) repository to store the container images. The DevOps engineer creates an ECR private image repository in the same AWS Region of the CodeBuild project. The DevOps engineer adjusts the IAM service role with the permissions that are necessary to work with the new ECR repository. The DevOps engineer also places new repository information into the docker build command and the docker push command that are used in the buildspec.yml file.

When the CodeBuild project runs a build job, the job fails when the job tries to access the ECR repository.

Which solution will resolve the issue of failed access to the ECR repository?

- A . Update the buildspec.yml file to log in to the ECR repository by using the aws ecr get-login-password AWS CLI command to obtain an authentication token. Update the docker login command to use the authentication token to access the ECR repository.

- B . Add an environment variable of type SECRETS_MANAGER to the CodeBuild project. In the environment variable, include the ARN of the CodeBuild project’s IAM service role. Update the buildspec.yml file to use the new environment variable to log in with the docker login command to access the ECR repository.

- C . Update the ECR repository to be a public image repository. Add an ECR repository policy that allows the IAM service role to have access.

- D . Update the buildspec.yml file to use the AWS CLI to assume the IAM service role for ECR operations. Add an ECR repository policy that allows the IAM service role to have access.

A

Explanation:

Update the buildspec.yml file to log in to the ECR repository by using the aws ecr get-login-password AWS CLI command to obtain an authentica-tion token. Update the docker login command to use the authentication token to access the ECR repository.

This is the correct solution. The aws ecr get-login-password AWS CLI command retrieves and displays an authentication token that can be used to log in to an ECR repository. The docker login command can use this token as a password to authenticate with the ECR repository. This way, the CodeBuild project can push and pull images from the ECR repository without any errors. For more information, see Using Amazon ECR with the AWS CLI and get-login-password.

A company has an organization in AWS Organizations. A DevOps engineer needs to maintain multiple AWS accounts that belong to different OUs in the organization. All resources, including 1AM policies and Amazon S3 policies within an account, are deployed through AWS CloudFormation. All templates and code are maintained in an AWS CodeCommit repository Recently, some developers have not been able to access an S3 bucket from some accounts in the organization.

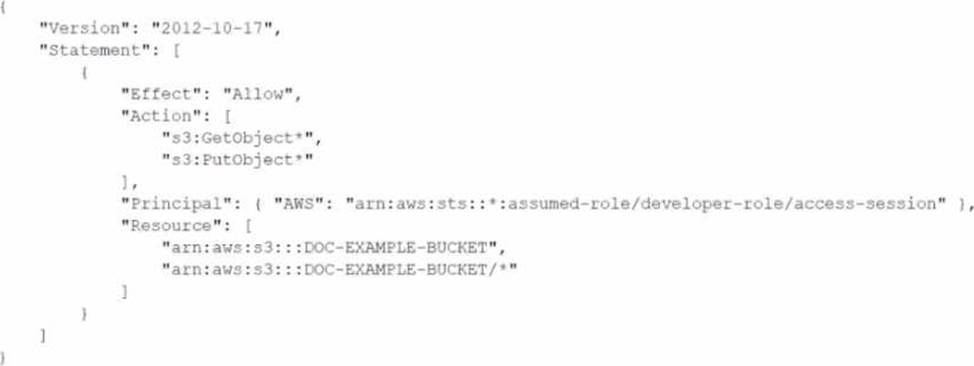

The following policy is attached to the S3 bucket.

What should the DevOps engineer do to resolve this access issue?

- A . Modify the S3 bucket policy Turn off the S3 Block Public Access setting on the S3 bucket In the S3 policy, add the awsSourceAccount condition. Add the AWS account IDs of all developers who are experiencing the issue.

- B . Verify that no 1AM permissions boundaries are denying developers access to the S3 bucket Make the necessary changes to IAM permissions boundaries. Use an AWS Config recorder in the individual developer accounts that are experiencing the issue to revert any changes that are blocking access.

Commit the fix back into the CodeCommit repository. Invoke deployment through Cloud Formation to apply the changes. - C . Configure an SCP that stops anyone from modifying 1AM resources in developer OUs. In the S3 policy, add the awsSourceAccount condition. Add the AWS account IDs of all developers who are experiencing the issue Commit the fix back into the CodeCommit repository Invoke deployment through CloudFormation to apply the changes

- D . Ensure that no SCP is blocking access for developers to the S3 bucket Ensure that no 1AM policy permissions boundaries are denying access to developer 1AM users Make the necessary changes to the SCP and 1AM policy permissions boundaries in the CodeCommit repository Invoke deployment through CloudFormation to apply the changes

D

Explanation:

Verify No SCP Blocking Access:

Ensure that no Service Control Policy (SCP) is blocking access for developers to the S3 bucket. SCPs are applied at the organization or organizational unit (OU) level in AWS Organizations and can restrict what actions users and roles in the affected accounts can perform.

Verify No IAM Policy Permissions Boundaries Blocking Access:

IAM permissions boundaries can limit the maximum permissions that a user or role can have. Verify that these boundaries are not restricting access to the S3 bucket.

Make Necessary Changes to SCP and IAM Policy Permissions Boundaries:

Adjust the SCPs and IAM permissions boundaries if they are found to be the cause of the access issue. Make sure these changes are reflected in the code maintained in the AWS CodeCommit repository.

Invoke Deployment Through CloudFormation:

Commit the updated policies to the CodeCommit repository.

Use AWS CloudFormation to deploy the changes across the relevant accounts and resources to ensure that the updated permissions are applied consistently.

By ensuring no SCPs or IAM policy permissions boundaries are blocking access and making necessary

changes if they are, the DevOps engineer can resolve the access issue for developers trying to access

the S3 bucket.

Reference: AWS SCPs

IAM Permissions Boundaries

Deploying CloudFormation Templates