Practice Free DOP-C02 Exam Online Questions

A company has an application that runs on AWS Lambda and sends logs to Amazon CloudWatch Logs. An Amazon Kinesis data stream is subscribed to the log groups in CloudWatch Logs. A single consumer Lambda function processes the logs from the data stream and stores the logs in an Amazon S3 bucket.

The company’s DevOps team has noticed high latency during the processing and ingestion of some logs.

Which combination of steps will reduce the latency? (Select THREE.)

- A . Create a data stream consumer with enhanced fan-out. Set the Lambda function that processes the logs as the consumer.

- B . Increase the ParallelizationFactor setting in the Lambda event source mapping.

- C . Configure reserved concurrency for the Lambda function that processes the logs. Increase the batch size in the Kinesis data stream.

- D . Turn off the ReportBatchltemFailures setting in the Lambda event source mapping. Increase the number of shards in the Kinesis data stream.

ABD

Explanation:

Enhanced fan-out provides dedicated bandwidth for each consumer, reducing latency when sharing data. This will speed up the processing of logs from the Kinesis data stream by the Lambda function.

ParallelizationFactor allows a single shard to process more records at the same time. Increasing this setting improves the ability of your Lambda function to process records from a single shard in parallel, reducing latency.

Increasing the number of shards for Kinesis data streams provides more parallel processing power and throughput, which helps reduce latency when processing large amounts of log data.

An AWS CodePipeline pipeline has implemented a code release process. The pipeline is integrated with AWS CodeDeploy to deploy versions of an application to multiple Amazon EC2 instances for each CodePipeline stage.

During a recent deployment the pipeline failed due to a CodeDeploy issue. The DevOps team wants to improve monitoring and notifications during deployment to decrease resolution times.

What should the DevOps engineer do to create notifications. When issues are discovered?

- A . Implement Amazon CloudWatch Logs for CodePipeline and CodeDeploy create an AWS Config rule to evaluate code deployment issues, and create an Amazon Simple Notification Service (Amazon SNS) topic to notify stakeholders of deployment issues.

- B . Implement Amazon EventBridge for CodePipeline and CodeDeploy create an AWS Lambda function to evaluate code deployment issues, and create an Amazon Simple Notification Service (Amazon SNS) topic to notify stakeholders of deployment issues.

- C . Implement AWS CloudTrail to record CodePipeline and CodeDeploy API call information create an AWS Lambda function to evaluate code deployment issues and create an Amazon Simple Notification Service (Amazon SNS) topic to notify stakeholders of deployment issues.

- D . Implement Amazon EventBridge for CodePipeline and CodeDeploy create an Amazon. Inspector assessment target to evaluate code deployment issues and create an Amazon Simple. Notification Service (Amazon SNS) topic to notify stakeholders of deployment issues.

B

Explanation:

AWS CloudWatch Events can be used to monitor events across different AWS resources, and a CloudWatch Event Rule can be created to trigger an AWS Lambda function when a deployment issue is detected in the pipeline. The Lambda function can then evaluate the issue and send a notification to the appropriate stakeholders through an Amazon SNS topic. This approach allows for real-time notifications and faster resolution times.

A DevOps engineer is setting up an Amazon Elastic Container Service (Amazon ECS) blue/green deployment for an application by using AWS CodeDeploy and AWS CloudFormation. During the deployment window, the application must be highly available and CodeDeploy must shift 10% of traffic to a new version of the application every minute until all traffic is shifted.

Which configuration should the DevOps engineer add in the CloudFormation template to meet these requirements?

- A . Add an AppSpec file with the CodeDeployDefault.ECSLineaMOPercentEverylMinutes deployment configuration.

- B . Add the AWS::CodeDeployBlueGreen transform and the AWS::CodeDeploy::BlueGreen hook parameter with the CodeDeployDefault.ECSLinear10PercentEvery1 Minutes deployment configuration.

- C . Add an AppSpec file with the ECSCanary10Percent5Minutes deployment configuration.

- D . Add the AWS::CodeDeployBlueGroen transform and the AWS::CodeDeploy::BlueGreen hook parameter with the ECSCanary10Percent5Minutes deployment configuration.

B

Explanation:

Step 1: Using AWS CloudFormation with ECS Blue/Green Deployments

The requirement is to implement an ECS blue/green deployment where traffic is shifted gradually. AWS CodeDeploy supports such blue/green deployments with predefined configurations, like ECSLinear10PercentEvery1Minute, which shifts 10% of traffic every minute.

Action: Use the AWS::CodeDeployBlueGreen transform and the appropriate hooks in the CloudFormation template. The ECSLinear10PercentEvery1Minute deployment configuration meets the requirement of shifting 10% of traffic every minute.

Why: The transform and hook parameters in CloudFormation are essential for configuring the blue/green deployment with the desired traffic-shifting behavior.

Reference: AWS documentation on ECS Blue/Green deployments.

This corresponds to Option B: Add the AWS::CodeDeployBlueGreen transform and the AWS::CodeDeploy::BlueGreen hook parameter with the CodeDeployDefault.ECSLinear10PercentEvery1Minutes deployment configuration.

A company provides an application to customers. The application has an Amazon API Gateway REST API that invokes an AWS Lambda function. On initialization, the Lambda function loads a large amount of data from an Amazon DynamoDB table. The data load process results in long cold-start times of 8-10 seconds. The DynamoDB table has DynamoDB Accelerator (DAX) configured.

Customers report that the application intermittently takes a long time to respond to requests. The application receives thousands of requests throughout the day. In the middle of the day, the application experiences 10 times more requests than at any other time of the day. Near the end of the day, the application’s request volume decreases to 10% of its normal total.

A DevOps engineer needs to reduce the latency of the Lambda function at all times of the day.

Which solution will meet these requirements?

- A . Configure provisioned concurrency on the Lambda function with a concurrency value of 1. Delete the DAX cluster for the DynamoDB table.

- B . Configure reserved concurrency on the Lambda function with a concurrency value of 0.

- C . Configure provisioned concurrency on the Lambda function. Configure AWS Application Auto Scaling on the Lambda function with provisioned concurrency values set to a minimum of 1 and a maximum of 100.

- D . Configure reserved concurrency on the Lambda function. Configure AWS Application Auto Scaling on the API Gateway API with a reserved concurrency maximum value of 100.

A company deploys its corporate infrastructure on AWS across multiple AWS Regions and Availability Zones. The infrastructure is deployed on Amazon EC2 instances and connects with AWS loT Greengrass devices. The company deploys additional resources on on-premises servers that are located in the corporate headquarters.

The company wants to reduce the overhead involved in maintaining and updating its resources. The company’s DevOps team plans to use AWS Systems Manager to implement automated management and application of patches. The DevOps team confirms that Systems Manager is available in the Regions that the resources are deployed m Systems Manager also is available in a Region near the corporate headquarters.

Which combination of steps must the DevOps team take to implement automated patch and configuration management across the company’s EC2 instances loT devices and on-premises infrastructure? (Select THREE.)

- A . Apply tags lo all the EC2 instances. AWS loT Greengrass devices, and on-premises servers. Use Systems Manager Session Manager to push patches to all the tagged devices.

- B . Use Systems Manager Run Command to schedule patching for the EC2 instances AWS loT Greengrass devices and on-premises servers.

- C . Use Systems Manager Patch Manager to schedule patching loT the EC2 instances AWS loT Greengrass devices and on-premises servers as a Systems Manager maintenance window task.

- D . Configure Amazon EventBridge to monitor Systems Manager Patch Manager for updates to patch baselines. Associate Systems Manager Run Command with the event lo initiate a patch action for all EC2 instances AWS loT Greengrass devices and on-premises servers.

- E . Create an IAM instance profile for Systems Manager Attach the instance profile to all the EC2 instances in the AWS account. For the AWS loT Greengrass devices and on-premises servers create an IAM service role for Systems Manager.

- F . Generate a managed-instance activation Use the Activation Code and Activation ID to install Systems Manager Agent (SSM Agent) on each server in the on-premises environment Update the AWS loT Greengrass IAM token exchange role Use the role to deploy SSM Agent on all the loT devices.

BEF

Explanation:

To implement automated patch and configuration management across the company’s EC2 instances, IoT devices, and on-premises infrastructure using AWS Systems Manager, the DevOps team should take the following steps:

B. Use Systems Manager Run Command to schedule patching for the EC2 instances, AWS IoT Greengrass devices, and on-premises servers.

Explanation: Systems Manager Run Command allows you to remotely and securely manage the configuration of your managed instances. It can be utilized to schedule patch management activities across EC2 instances, IoT devices, and on-premises servers.

E. Create an IAM instance profile for Systems Manager. Attach the instance profile to all the EC2 instances in the AWS account. For the AWS IoT Greengrass devices and on-premises servers, create an IAM service role for Systems Manager.

Explanation: IAM roles and profiles are necessary for Systems Manager to have the necessary permissions to manage EC2 instances, IoT Greengrass devices, and on-premises servers. Setting up proper roles will ensure secure and authorized operations.

F. Generate a managed-instance activation. Use the Activation Code and Activation ID to install Systems Manager Agent (SSM Agent) on each server in the on-premises environment. Update the AWS IoT Greengrass IAM token exchange role. Use the role to deploy SSM Agent on all the IoT devices.

Explanation: To manage servers and IoT devices using Systems Manager, you need to install the SSM Agent on these entities. Managed-instance activation will help in registering the on-premises servers with Systems Manager, and the IAM token exchange role will facilitate the deployment of the SSM Agent on IoT devices.

By combining these steps, the DevOps team can establish a solution for automated patch and configuration management across the various environments in which the company operates.

A company manages multiple AWS accounts in AWS Organizations. The company’s security policy states that AWS account root user credentials for member accounts must not be used. The company monitors access to the root user credentials.

A recent alert shows that the root user in a member account launched an Amazon EC2 instance. A DevOps engineer must create an SCP at the organization’s root level that will prevent the root user in member accounts from making any AWS service API calls.

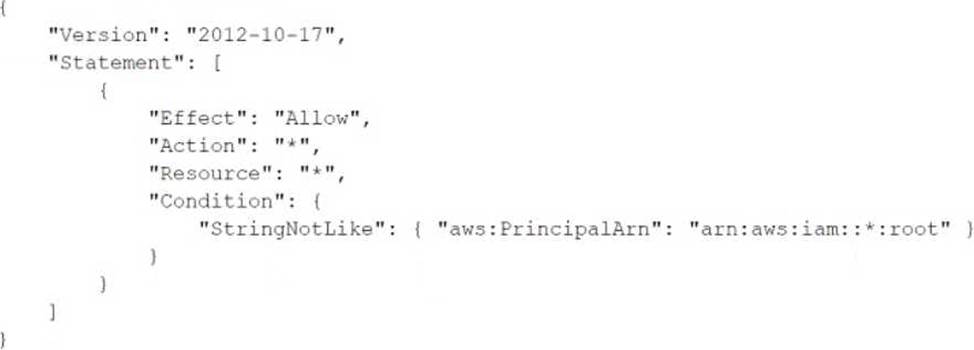

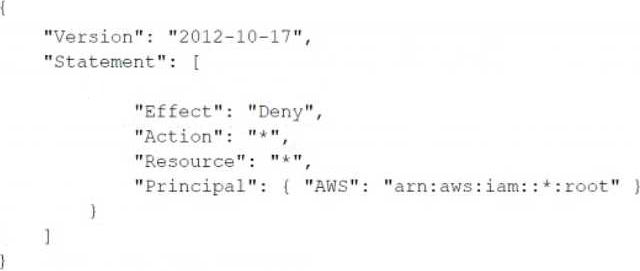

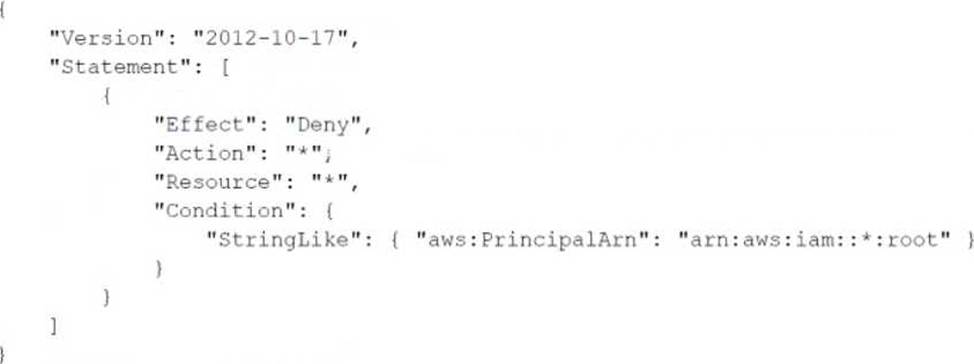

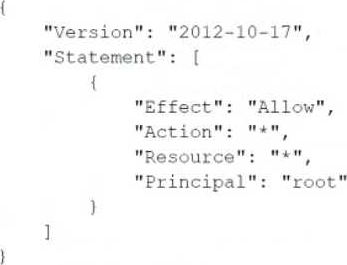

Which SCP will meet these requirements?

A)

B)

C)

D)

- A . Option A

- B . Option B

- C . Option C

- D . Option D

D

Explanation:

A company runs an application with an Amazon EC2 and on-premises configuration. A DevOps engineer needs to standardize patching across both environments. Company policy dictates that patching only happens during non-business hours.

Which combination of actions will meet these requirements? (Choose three.)

- A . Add the physical machines into AWS Systems Manager using Systems Manager Hybrid Activations.

- B . Attach an IAM role to the EC2 instances, allowing them to be managed by AWS Systems Manager.

- C . Create IAM access keys for the on-premises machines to interact with AWS Systems Manager.

- D . Run an AWS Systems Manager Automation document to patch the systems every hour.

- E . Use Amazon EventBridge scheduled events to schedule a patch window.

- F . Use AWS Systems Manager Maintenance Windows to schedule a patch window.

A company’s developers use Amazon EC2 instances as remote workstations. The company is concerned that users can create or modify EC2 security groups to allow unrestricted inbound access.

A DevOps engineer needs to develop a solution to detect when users create unrestricted security group rules. The solution must detect changes to security group rules in near real time, remove unrestricted rules, and send email notifications to the security team. The DevOps engineer has created an AWS Lambda function that checks for security group ID from input, removes rules that grant unrestricted access, and sends notifications through Amazon Simple Notification Service (Amazon SNS).

What should the DevOps engineer do next to meet the requirements?

- A . Configure the Lambda function to be invoked by the SNS topic. Create an AWS CloudTrail subscription for the SNS topic. Configure a subscription filter for security group modification events.

- B . Create an Amazon EventBridge scheduled rule to invoke the Lambda function. Define a schedule pattern that runs the Lambda function every hour.

- C . Create an Amazon EventBridge event rule that has the default event bus as the source. Define the rule’s event pattern to match EC2 security group creation and modification events. Configure the rule to invoke the Lambda function.

- D . Create an Amazon EventBridge custom event bus that subscribes to events from all AWS services. Configure the Lambda function to be invoked by the custom event bus.

A company uses containers for its applications The company learns that some container Images are missing required security configurations

A DevOps engineer needs to implement a solution to create a standard base image The solution must publish the base image weekly to the us-west-2 Region, us-east-2 Region, and eu-central-1 Region.

Which solution will meet these requirements?

- A . Create an EC2 Image Builder pipeline that uses a container recipe to build the image. Configure the pipeline to distribute the image to an Amazon Elastic Container Registry (Amazon ECR) repository in us-west-2. Configure ECR replication from us-west-2 to us-east-2 and from us-east-2 to eu-central-1 Configure the pipeline to run weekly

- B . Create an AWS CodePipeline pipeline that uses an AWS CodeBuild project to build the image Use AWS CodeOeploy to publish the image to an Amazon Elastic Container Registry (Amazon ECR) repository in us-west-2 Configure ECR replication from us-west-2 to us-east-2 and from us-east-2 to eu-central-1 Configure the pipeline to run weekly

- C . Create an EC2 Image Builder pipeline that uses a container recipe to build the Image Configure the

pipeline to distribute the image to Amazon Elastic Container Registry (Amazon ECR) repositories in all three Regions. Configure the pipeline to run weekly. - D . Create an AWS CodePipeline pipeline that uses an AWS CodeBuild project to build the image Use AWS CodeDeploy to publish the image to Amazon Elastic Container Registry (Amazon ECR) repositories in all three Regions. Configure the pipeline to run weekly.

C

Explanation:

Create an EC2 Image Builder Pipeline that Uses a Container Recipe to Build the Image:

EC2 Image Builder simplifies the creation, maintenance, validation, and sharing of container images. By using a container recipe, you can define the base image, components, and validation tests for your container image.

Configure the Pipeline to Distribute the Image to Amazon Elastic Container Registry (Amazon ECR)

Repositories in All Three Regions:

Amazon ECR provides a secure, scalable, and reliable container registry.

Configuring the pipeline to distribute the image to ECR repositories in us-west-2, us-east-2, and eu-central-1 ensures that the image is available in all required regions. Configure the Pipeline to Run Weekly:

Setting the pipeline to run on a weekly schedule ensures that the base image is regularly updated and published, incorporating any new security configurations or updates.

By using EC2 Image Builder to automate the creation and distribution of the container image, the solution ensures that the base image is consistently maintained and available across multiple regions with minimal management overhead.

Reference: EC2 Image Builder

Amazon ECR

Setting Up EC2 Image Builder Pipelines

A company is deploying a new application that uses Amazon EC2 instances. The company needs a solution to query application logs and AWS account API activity.

Which solution will meet these requirements?

- A . Use the Amazon CloudWatch agent to send logs from the EC2 instances to Amazon CloudWatch Logs Configure AWS CloudTrail to deliver the API logs to Amazon S3 Use CloudWatch to query both sets of logs.

- B . Use the Amazon CloudWatch agent to send logs from the EC2 instances to Amazon CloudWatch Logs Configure AWS CloudTrail to deliver the API logs to CloudWatch Logs Use CloudWatch Logs Insights to query both sets of logs.

- C . Use the Amazon CloudWatch agent to send logs from the EC2 instances to Amazon Kinesis Configure AWS CloudTrail to deliver the API logs to Kinesis Use Kinesis to load the data into Amazon Redshift Use Amazon Redshift to query both sets of logs.

- D . Use the Amazon CloudWatch agent to send logs from the EC2 instances to Amazon S3 Use AWS

CloudTrail to deliver the API togs to Amazon S3 Use Amazon Athena to query both sets of logs in Amazon S3.

D

Explanation:

This solution will meet the requirements because it will use Amazon S3 as a common data lake for both the application logs and the API logs. Amazon S3 is a service that provides scalable, durable, and secure object storage for any type of data. You can use the Amazon CloudWatch agent to send logs from your EC2 instances to S3 buckets, and use AWS CloudTrail to deliver the API logs to S3 buckets as well. You can also use Amazon Athena to query both sets of logs in S3 using standard SQL, without loading or transforming them. Athena is a serverless interactive query service that allows you to analyze data in S3 using a variety of data formats, such as JSON, CSV, Parquet, and ORC.