Practice Free Databricks Generative AI Engineer Associate Exam Online Questions

A Generative AI Engineer is developing a chatbot designed to assist users with insurance-related queries. The chatbot is built on a large language model (LLM) and is conversational. However, to maintain the chatbot’s focus and to comply with company policy, it must not provide responses to questions about politics.

Instead, when presented with political inquiries, the chatbot should respond with a standard message:

“Sorry, I cannot answer that. I am a chatbot that can only answer questions around insurance.”

Which framework type should be implemented to solve this?

- A . Safety Guardrail

- B . Security Guardrail

- C . Contextual Guardrail

- D . Compliance Guardrail

A

Explanation:

In this scenario, the chatbot must avoid answering political questions and instead provide a standard message for such inquiries.

Implementing a Safety Guardrail is the appropriate solution for this:

What is a Safety Guardrail?

Safety guardrails are mechanisms implemented in Generative AI systems to ensure the model behaves within specific bounds. In this case, it ensures the chatbot does not answer politically sensitive or irrelevant questions, which aligns with the business rules.

Preventing Responses to Political Questions:

The Safety Guardrail is programmed to detect specific types of inquiries (like political questions) and prevent the model from generating responses outside its intended domain. When such queries are detected, the guardrail intervenes and provides a pre-defined response: “Sorry, I cannot answer that. I am a chatbot that can only answer questions around insurance.”

How It Works in Practice:

The LLM system can include a classification layer or trigger rules based on specific keywords related to politics. When such terms are detected, the Safety Guardrail blocks the normal generation flow and responds with the fixed message.

Why Other Options Are Less Suitable:

B (Security Guardrail): This is more focused on protecting the system from security vulnerabilities or data breaches, not controlling the conversational focus.

C (Contextual Guardrail): While context guardrails can limit responses based on context, safety guardrails are specifically about ensuring the chatbot stays within a safe conversational scope.

D (Compliance Guardrail): Compliance guardrails are often related to legal and regulatory adherence, which is not directly relevant here.

Therefore, a Safety Guardrail is the right framework to ensure the chatbot only answers insurance-related queries and avoids political discussions.

A Generative AI Engineer has been asked to design an LLM-based application that accomplishes the

following business objective: answer employee HR questions using HR PDF documentation.

Which set of high level tasks should the Generative AI Engineer’s system perform?

- A . Calculate averaged embeddings for each HR document, compare embeddings to user query to find the best document. Pass the best document with the user query into an LLM with a large context window to generate a response to the employee.

- B . Use an LLM to summarize HR documentation. Provide summaries of documentation and user query into an LLM with a large context window to generate a response to the user.

- C . Create an interaction matrix of historical employee questions and HR documentation. Use ALS to factorize the matrix and create embeddings. Calculate the embeddings of new queries and use them to find the best HR documentation. Use an LLM to generate a response to the employee question based upon the documentation retrieved.

- D . Split HR documentation into chunks and embed into a vector store. Use the employee question to retrieve best matched chunks of documentation, and use the LLM to generate a response to the employee based upon the documentation retrieved.

D

Explanation:

To design an LLM-based application that can answer employee HR questions using HR PDF documentation, the most effective approach is option D.

Here’s why:

Chunking and Vector Store Embedding:

HR documentation tends to be lengthy, so splitting it into smaller, manageable chunks helps optimize retrieval. These chunks are then embedded into a vector store (a database that stores vector representations of text). Each chunk of text is transformed into an embedding using a transformer-based model, which allows for efficient similarity-based retrieval.

Using Vector Search for Retrieval:

When an employee asks a question, the system converts their query into an embedding as well. This embedding is then compared with the embeddings of the document chunks in the vector store. The most semantically similar chunks are retrieved, which ensures that the answer is based on the most relevant parts of the documentation.

LLM to Generate a Response:

Once the relevant chunks are retrieved, these chunks are passed into the LLM, which uses them as context to generate a coherent and accurate response to the employee’s question.

Why Other Options Are Less Suitable:

A (Calculate Averaged Embeddings): Averaging embeddings might dilute important information. It doesn’t provide enough granularity to focus on specific sections of documents.

B (Summarize HR Documentation): Summarization loses the detail necessary for HR-related queries, which are often specific. It would likely miss the mark for more detailed inquiries.

C (Interaction Matrix and ALS): This approach is better suited for recommendation systems and not for HR queries, as it’s focused on collaborative filtering rather than text-based retrieval.

Thus, option D is the most effective solution for providing precise and contextual answers based on HR documentation.

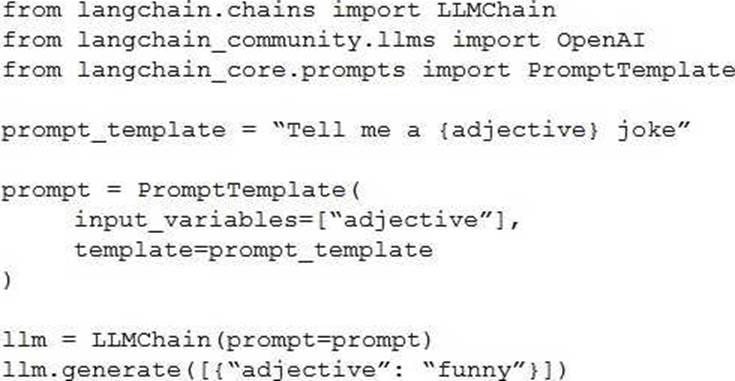

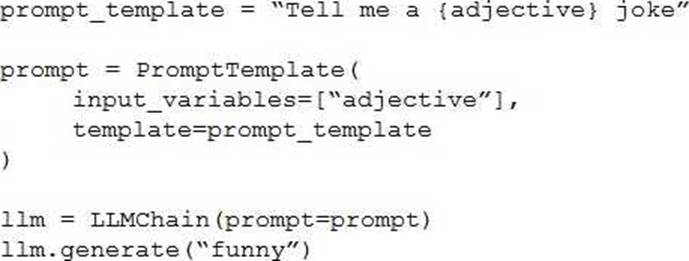

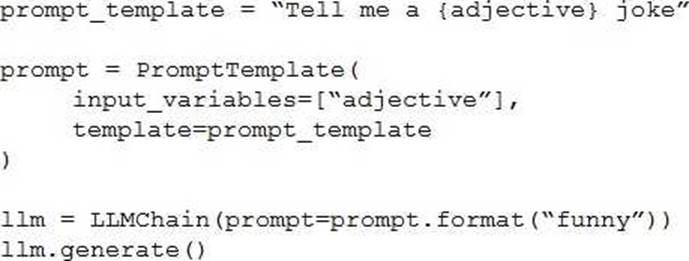

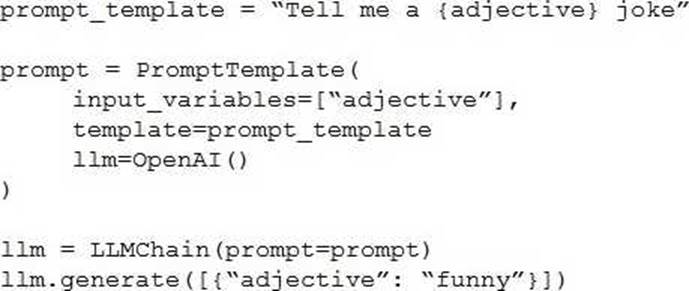

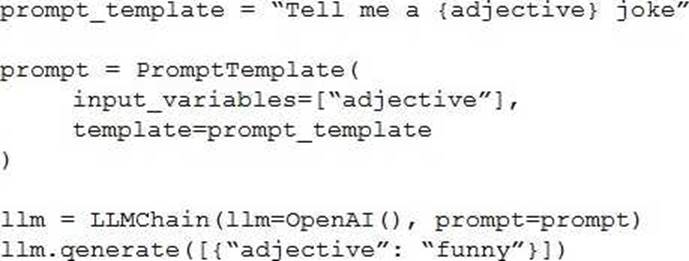

A Generative AI Engineer is testing a simple prompt template in LangChain using the code below, but is getting an error.

Assuming the API key was properly defined, what change does the Generative AI Engineer need to make to fix their chain?

A)

B)

C)

D)

- A . Option A

- B . Option B

- C . Option C

- D . Option D

C

Explanation:

To fix the error in the LangChain code provided for using a simple prompt template, the correct approach is Option C.

Here’s a detailed breakdown of why Option C is the right choice and how it addresses the issue:

Proper Initialization: In Option C, the LLMChain is correctly initialized with the LLM instance specified as OpenAI(), which likely represents a language model (like GPT) from OpenAI. This is crucial as it specifies which model to use for generating responses.

Correct Use of Classes and Methods:

The PromptTemplate is defined with the correct format, specifying that adjective is a variable within the template. This allows dynamic insertion of values into the template when generating text.

The prompt variable is properly linked with the PromptTemplate, and the final template string is passed correctly.

The LLMChain correctly references the prompt and the initialized OpenAI() instance, ensuring that the template and the model are properly linked for generating output.

Why Other Options Are Incorrect:

Option A: Misuses the parameter passing in generate method by incorrectly structuring the dictionary.

Option B: Incorrectly uses prompt.format method which does not exist in the context of LLMChain and PromptTemplate configuration, resulting in potential errors.

Option D: Incorrect order and setup in the initialization parameters for LLMChain, which would likely lead to a failure in recognizing the correct configuration for prompt and LLM usage.

Thus, Option C is correct because it ensures that the LangChain components are correctly set up and integrated, adhering to proper syntax and logical flow required by LangChain’s architecture. This setup avoids common pitfalls such as type errors or method misuses, which are evident in other options.

A Generative AI Engineer is creating an LLM-powered application that will need access to up-to-date news articles and stock prices.

The design requires the use of stock prices which are stored in Delta tables and finding the latest relevant news articles by searching the internet.

How should the Generative AI Engineer architect their LLM system?

- A . Use an LLM to summarize the latest news articles and lookup stock tickers from the summaries to find stock prices.

- B . Query the Delta table for volatile stock prices and use an LLM to generate a search query to investigate potential causes of the stock volatility.

- C . Download and store news articles and stock price information in a vector store. Use a RAG architecture to retrieve and generate at runtime.

- D . Create an agent with tools for SQL querying of Delta tables and web searching, provide retrieved values to an LLM for generation of response.

D

Explanation:

To build an LLM-powered system that accesses up-to-date news articles and stock prices, the best approach is to create an agent that has access to specific tools (option D).

Agent with SQL and Web Search Capabilities:

By using an agent-based architecture, the LLM can interact with external tools. The agent can query Delta tables (for up-to-date stock prices) via SQL and perform web searches to retrieve the latest news articles. This modular approach ensures the system can access both structured (stock prices) and unstructured (news) data sources dynamically.

Why This Approach Works:

SQL Queries for Stock Prices: Delta tables store stock prices, which the agent can query directly for the latest data.

Web Search for News: For news articles, the agent can generate search queries and retrieve the most relevant and recent articles, then pass them to the LLM for processing.

Why Other Options Are Less Suitable:

A (Summarizing News for Stock Prices): This convoluted approach would not ensure accuracy when retrieving stock prices, which are already structured and stored in Delta tables.

B (Stock Price Volatility Queries): While this could retrieve relevant information, it doesn’t address how to obtain the most up-to-date news articles.

C (Vector Store): Storing news articles and stock prices in a vector store might not capture the real-time nature of stock data and news updates, as it relies on pre-existing data rather than dynamic querying.

Thus, using an agent with access to both SQL for querying stock prices and web search for retrieving news articles is the best approach for ensuring up-to-date and accurate responses.