Practice Free Databricks Certified Professional Data Engineer Exam Online Questions

A data pipeline uses Structured Streaming to ingest data from kafka to Delta Lake. Data is being stored in a bronze table, and includes the Kafka_generated timesamp, key, and value. Three months after the pipeline is deployed the data engineering team has noticed some latency issued during certain times of the day.

A senior data engineer updates the Delta Table’s schema and ingestion logic to include the current timestamp (as recoded by Apache Spark) as well the Kafka topic and partition. The team plans to use the additional metadata fields to diagnose the transient processing delays:

Which limitation will the team face while diagnosing this problem?

- A . New fields not be computed for historic records.

- B . Updating the table schema will invalidate the Delta transaction log metadata.

- C . Updating the table schema requires a default value provided for each file added.

- D . Spark cannot capture the topic partition fields from the kafka source.

A

Explanation:

When adding new fields to a Delta table’s schema, these fields will not be retrospectively applied to historical records that were ingested before the schema change. Consequently, while the team can use the new metadata fields to investigate transient processing delays moving forward, they will be unable to apply this diagnostic approach to past data that lacks these fields.

Reference: Databricks documentation on Delta Lake schema management:

https://docs.databricks.com/delta/delta-batch.html#schema-management

An upstream system has been configured to pass the date for a given batch of data to the Databricks Jobs API as a parameter.

The notebook to be scheduled will use this parameter to load data with the following code:

df = spark.read.format("parquet").load(f"/mnt/source/(date)")

Which code block should be used to create the date Python variable used in the above code block?

- A . date = spark.conf.get("date")

- B . input_dict = input()

date= input_dict["date"] - C . import sys

date = sys.argv[1] - D . date = dbutils.notebooks.getParam("date")

- E . dbutils.widgets.text("date", "null")

date = dbutils.widgets.get("date")

E

Explanation:

The code block that should be used to create the date Python variable used in the above code block is:

dbutils.widgets.text(“date”, “null”) date = dbutils.widgets.get(“date”)

This code block uses the dbutils.widgets API to create and get a text widget named “date” that can accept a string value as a parameter1. The default value of the widget is “null”, which means that if no parameter is passed, the date variable will be “null”. However, if a parameter is passed through the Databricks Jobs API, the date variable will be assigned the value of the parameter. For example, if the parameter is “2021-11-01”, the date variable will be “2021-11-01”. This way, the notebook can use the date variable to load data from the specified path. The other options are not correct, because:

Option A is incorrect because spark.conf.get(“date”) is not a valid way to get a parameter passed through the Databricks Jobs API. The spark.conf API is used to get or set Spark configuration properties, not notebook parameters2.

Option B is incorrect because input() is not a valid way to get a parameter passed through the

Databricks Jobs API. The input() function is used to get user input from the standard input stream, not from the API request3.

Option C is incorrect because sys.argv1 is not a valid way to get a parameter passed through the Databricks Jobs API. The sys.argv list is used to get the command-line arguments passed to a Python script, not to a notebook4.

Option D is incorrect because dbutils.notebooks.getParam(“date”) is not a valid way to get a parameter passed through the Databricks Jobs API. The dbutils.notebooks API is used to get or set notebook parameters when running a notebook as a job or as a subnotebook, not when passing parameters through the API5.

Reference: Widgets, Spark Configuration, input(), sys.argv, Notebooks

Review the following error traceback:

Which statement describes the error being raised?

- A . The code executed was PvSoark but was executed in a Scala notebook.

- B . There is no column in the table named heartrateheartrateheartrate

- C . There is a type error because a column object cannot be multiplied.

- D . There is a type error because a DataFrame object cannot be multiplied.

- E . There is a syntax error because the heartrate column is not correctly identified as a column.

E

Explanation:

The error being raised is an AnalysisException, which is a type of exception that occurs when Spark SQL cannot analyze or execute a query due to some logical or semantic error1. In this case, the error message indicates that the query cannot resolve the column name ‘heartrateheartrateheartrate’ given the input columns ‘heartrate’ and ‘age’. This means that there is no column in the table named ‘heartrateheartrateheartrate’, and the query is invalid. A possible cause of this error is a typo or a copy-paste mistake in the query. To fix this error, the query should use a valid column name that exists in the table, such as ‘heartrate’.

Reference: AnalysisException

Which is a key benefit of an end-to-end test?

- A . It closely simulates real world usage of your application.

- B . It pinpoint errors in the building blocks of your application.

- C . It provides testing coverage for all code paths and branches.

- D . It makes it easier to automate your test suite

A

Explanation:

End-to-end testing is a methodology used to test whether the flow of an application, from start to finish, behaves as expected. The key benefit of an end-to-end test is that it closely simulates real-world, user behavior, ensuring that the system as a whole operates correctly.

Reference: Software Testing: End-to-End Testing

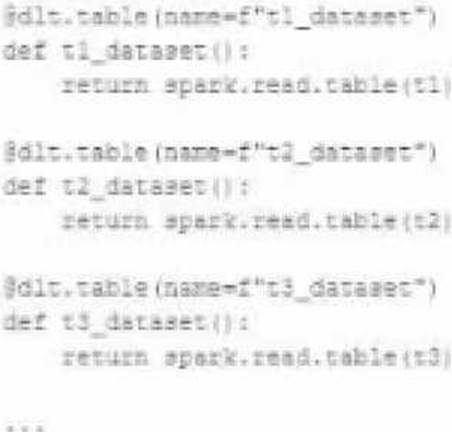

A data engineer wants to reflector the following DLT code, which includes multiple definition with very similar code:

In an attempt to programmatically create these tables using a parameterized table definition, the data engineer writes the following code.

The pipeline runs an update with this refactored code, but generates a different DAG showing

incorrect configuration values for tables.

How can the data engineer fix this?

- A . Convert the list of configuration values to a dictionary of table settings, using table names as keys.

- B . Convert the list of configuration values to a dictionary of table settings, using different input the for loop.

- C . Load the configuration values for these tables from a separate file, located at a path provided by a pipeline parameter.

- D . Wrap the loop inside another table definition, using generalized names and properties to replace with those from the inner table

A

Explanation:

The issue with the refactored code is that it tries to use string interpolation to dynamically create table names within the dlc.table decorator, which will not correctly interpret the table names. Instead, by using a dictionary with table names as keys and their configurations as values, the data engineer can iterate over the dictionary items and use the keys (table names) to properly configure the table settings. This way, the decorator can correctly recognize each table name, and the corresponding configuration settings can be applied appropriately.

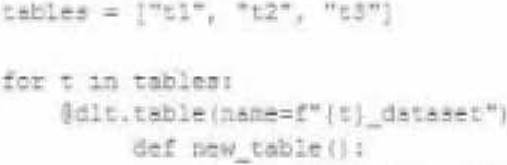

A junior member of the data engineering team is exploring the language interoperability of Databricks notebooks. The intended outcome of the below code is to register a view of all sales that occurred in countries on the continent of Africa that appear in the geo_lookup table.

Before executing the code, running SHOW TABLES on the current database indicates the database contains only two tables: geo_lookup and sales.

Which statement correctly describes the outcome of executing these command cells in order in an interactive notebook?

- A . Both commands will succeed. Executing show tables will show that countries at and sales at have been registered as views.

- B . Cmd 1 will succeed. Cmd 2 will search all accessible databases for a table or view named countries af: if this entity exists, Cmd 2 will succeed.

- C . Cmd 1 will succeed and Cmd 2 will fail, countries at will be a Python variable representing a PySpark DataFrame.

- D . Both commands will fail. No new variables, tables, or views will be created.

- E . Cmd 1 will succeed and Cmd 2 will fail, countries at will be a Python variable containing a list of strings.

E

Explanation:

This is the correct answer because Cmd 1 is written in Python and uses a list comprehension to extract the country names from the geo_lookup table and store them in a Python variable named countries af. This variable will contain a list of strings, not a PySpark DataFrame or a SQL view. Cmd 2 is written in SQL and tries to create a view named sales af by selecting from the sales table where city is in countries af. However, this command will fail because countries af is not a valid SQL entity and cannot be used in a SQL query. To fix this, a better approach would be to use spark.sql() to execute a SQL query in Python and pass the countries af variable as a parameter.

Verified Reference: [Databricks Certified Data Engineer Professional], under “Language Interoperability” section; Databricks Documentation, under “Mix languages” section.

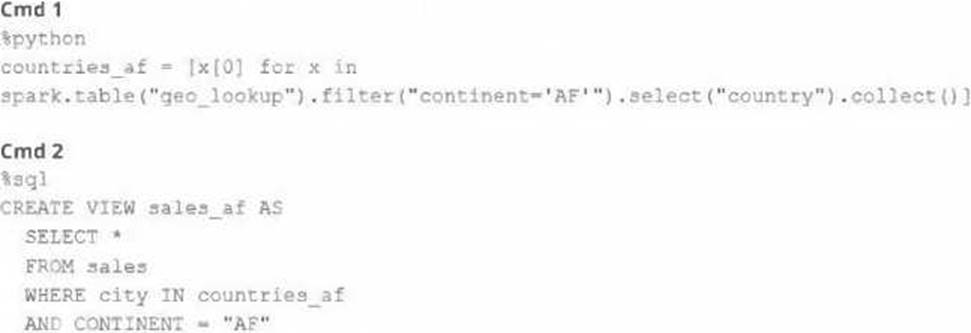

The data engineering team maintains the following code:

Assuming that this code produces logically correct results and the data in the source table has been de-duplicated and validated, which statement describes what will occur when this code is executed?

- A . The silver_customer_sales table will be overwritten by aggregated values calculated from all records in the gold_customer_lifetime_sales_summary table as a batch job.

- B . A batch job will update the gold_customer_lifetime_sales_summary table, replacing only those rows that have different values than the current version of the table, using customer_id as the primary key.

- C . The gold_customer_lifetime_sales_summary table will be overwritten by aggregated values calculated from all records in the silver_customer_sales table as a batch job.

- D . An incremental job will leverage running information in the state store to update aggregate values in the gold_customer_lifetime_sales_summary table.

- E . An incremental job will detect if new rows have been written to the silver_customer_sales table; if new rows are detected, all aggregates will be recalculated and used to overwrite the gold_customer_lifetime_sales_summary table.

C

Explanation:

This code is using the pyspark.sql.functions library to group the silver_customer_sales table by

customer_id and then aggregate the data using the minimum sale date, maximum sale total, and

sum of distinct order ids. The resulting aggregated data is then written to the

gold_customer_lifetime_sales_summary table, overwriting any existing data in that table. This is a

batch job that does not use any incremental or streaming logic, and does not perform any merge or

update operations. Therefore, the code will overwrite the gold table with the aggregated values from

the silver table every time it is executed.

Reference:

https://docs.databricks.com/spark/latest/dataframes-datasets/introduction-to-dataframes-python.html

https://docs.databricks.com/spark/latest/dataframes-datasets/transforming-data-with-dataframes.html

https://docs.databricks.com/spark/latest/dataframes-datasets/aggregating-data-with-dataframes.html

The data architect has mandated that all tables in the Lakehouse should be configured as external Delta Lake tables.

Which approach will ensure that this requirement is met?

- A . Whenever a database is being created, make sure that the location keyword is used

- B . When configuring an external data warehouse for all table storage. leverage Databricks for all ELT.

- C . Whenever a table is being created, make sure that the location keyword is used.

- D . When tables are created, make sure that the external keyword is used in the create table statement.

- E . When the workspace is being configured, make sure that external cloud object storage has been mounted.

C

Explanation:

This is the correct answer because it ensures that this requirement is met. The requirement is that all tables in the Lakehouse should be configured as external Delta Lake tables. An external table is a table that is stored outside of the default warehouse directory and whose metadata is not managed by Databricks. An external table can be created by using the location keyword to specify the path to an existing directory in a cloud storage system, such as DBFS or S3. By creating external tables, the data engineering team can avoid losing data if they drop or overwrite the table, as well as leverage existing data without moving or copying it.

Verified Reference: [Databricks Certified Data Engineer Professional], under “Delta Lake” section; Databricks Documentation, under “Create an external table” section.

A nightly job ingests data into a Delta Lake table using the following code:

The next step in the pipeline requires a function that returns an object that can be used to manipulate new records that have not yet been processed to the next table in the pipeline.

Which code snippet completes this function definition?

A) def new_records():

B) return spark.readStream.table("bronze")

C) return spark.readStream.load("bronze")

D) return spark.read.option("readChangeFeed", "true").table ("bronze")

E)

- A . Option A

- B . Option B

- C . Option C

- D . Option D

- E . Option E

E

Explanation:

https://docs.databricks.com/en/delta/delta-change-data-feed.html

A table is registered with the following code:

Both users and orders are Delta Lake tables.

Which statement describes the results of querying recent_orders?

- A . All logic will execute at query time and return the result of joining the valid versions of the source tables at the time the query finishes.

- B . All logic will execute when the table is defined and store the result of joining tables to the DBFS; this stored data will be returned when the table is queried.

- C . Results will be computed and cached when the table is defined; these cached results will incrementally update as new records are inserted into source tables.

- D . All logic will execute at query time and return the result of joining the valid versions of the source tables at the time the query began.

- E . The versions of each source table will be stored in the table transaction log; query results will be saved to DBFS with each query.