Practice Free Databricks Certified Professional Data Engineer Exam Online Questions

The Databricks workspace administrator has configured interactive clusters for each of the data engineering groups. To control costs, clusters are set to terminate after 30 minutes of inactivity. Each user should be able to execute workloads against their assigned clusters at any time of the day.

Assuming users have been added to a workspace but not granted any permissions, which of the following describes the minimal permissions a user would need to start and attach to an already configured cluster.

- A . "Can Manage" privileges on the required cluster

- B . Workspace Admin privileges, cluster creation allowed. "Can Attach To" privileges on the required cluster

- C . Cluster creation allowed. "Can Attach To" privileges on the required cluster

- D . "Can Restart" privileges on the required cluster

- E . Cluster creation allowed. "Can Restart" privileges on the required cluster

D

Explanation:

https://learn.microsoft.com/en-us/azure/databricks/security/auth-authz/access-control/cluster-acl

https://docs.databricks.com/en/security/auth-authz/access-control/cluster-acl.html

A distributed team of data analysts share computing resources on an interactive cluster with autoscaling configured. In order to better manage costs and query throughput, the workspace administrator is hoping to evaluate whether cluster upscaling is caused by many concurrent users or resource-intensive queries.

In which location can one review the timeline for cluster resizing events?

- A . Workspace audit logs

- B . Driver’s log file

- C . Ganglia

- D . Cluster Event Log

- E . Executor’s log file

A junior data engineer has been asked to develop a streaming data pipeline with a grouped aggregation using DataFrame df. The pipeline needs to calculate the average humidity and average temperature for each non-overlapping five-minute interval. Incremental state information should be maintained for 10 minutes for late-arriving data.

Streaming DataFrame df has the following schema:

"device_id INT, event_time TIMESTAMP, temp FLOAT, humidity FLOAT"

Code block:

Choose the response that correctly fills in the blank within the code block to complete this task.

- A . withWatermark("event_time", "10 minutes")

- B . awaitArrival("event_time", "10 minutes")

- C . await("event_time + ‘10 minutes’")

- D . slidingWindow("event_time", "10 minutes")

- E . delayWrite("event_time", "10 minutes")

A

Explanation:

The correct answer is A. withWatermark(“event_time”, “10 minutes”). This is because the question asks for incremental state information to be maintained for 10 minutes for late-arriving data. The withWatermark method is used to define the watermark for late data. The watermark is a timestamp column and a threshold that tells the system how long to wait for late data. In this case, the watermark is set to 10 minutes. The other options are incorrect because they are not valid methods or syntax for watermarking in Structured Streaming.

Reference: Watermarking: https://docs.databricks.com/spark/latest/structured-streaming/watermarks.html Windowed aggregations: https://docs.databricks.com/spark/latest/structured-streaming/window-operations.html

An upstream source writes Parquet data as hourly batches to directories named with the current date.

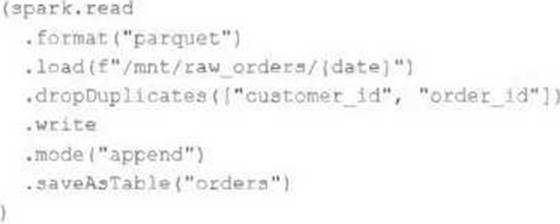

A nightly batch job runs the following code to ingest all data from the previous day as indicated by the date variable:

Assume that the fields customer_id and order_id serve as a composite key to uniquely identify each order.

If the upstream system is known to occasionally produce duplicate entries for a single order hours apart, which statement is correct?

- A . Each write to the orders table will only contain unique records, and only those records without duplicates in the target table will be written.

- B . Each write to the orders table will only contain unique records, but newly written records may have duplicates already present in the target table.

- C . Each write to the orders table will only contain unique records; if existing records with the same key are present in the target table, these records will be overwritten.

- D . Each write to the orders table will only contain unique records; if existing records with the same key are present in the target table, the operation will tail.

- E . Each write to the orders table will run deduplication over the union of new and existing records,

ensuring no duplicate records are present.

B

Explanation:

This is the correct answer because the code uses the dropDuplicates method to remove any duplicate records within each batch of data before writing to the orders table. However, this method does not check for duplicates across different batches or in the target table, so it is possible that newly written records may have duplicates already present in the target table. To avoid this, a better approach would be to use Delta Lake and perform an upsert operation using mergeInto.

Verified Reference: [Databricks Certified Data Engineer Professional], under “Delta Lake” section; Databricks Documentation, under “DROP DUPLICATES” section.

The data governance team is reviewing code used for deleting records for compliance with GDPR.

They note the following logic is used to delete records from the Delta Lake table named users.

Assuming that user_id is a unique identifying key and that delete_requests contains all users that have requested deletion, which statement describes whether successfully executing the above logic guarantees that the records to be deleted are no longer accessible and why?

- A . Yes; Delta Lake ACID guarantees provide assurance that the delete command succeeded fully and permanently purged these records.

- B . No; the Delta cache may return records from previous versions of the table until the cluster is restarted.

- C . Yes; the Delta cache immediately updates to reflect the latest data files recorded to disk.

- D . No; the Delta Lake delete command only provides ACID guarantees when combined with the merge into command.

- E . No; files containing deleted records may still be accessible with time travel until a vacuum command is used to remove invalidated data files.

E

Explanation:

The code uses the DELETE FROM command to delete records from the users table that match a condition based on a join with another table called delete_requests, which contains all users that have requested deletion. The DELETE FROM command deletes records from a Delta Lake table by creating a new version of the table that does not contain the deleted records. However, this does not guarantee that the records to be deleted are no longer accessible, because Delta Lake supports time travel, which allows querying previous versions of the table using a timestamp or version number. Therefore, files containing deleted records may still be accessible with time travel until a vacuum command is used to remove invalidated data files from physical storage.

Verified Reference: [Databricks Certified Data Engineer Professional], under “Delta Lake” section; Databricks Documentation, under “Delete from a table” section; Databricks Documentation, under “Remove files no longer referenced by a Delta table” section.

A user wants to use DLT expectations to validate that a derived table report contains all records from the source, included in the table validation_copy.

The user attempts and fails to accomplish this by adding an expectation to the report table definition.

Which approach would allow using DLT expectations to validate all expected records are present in

this table?

- A . Define a SQL UDF that performs a left outer join on two tables, and check if this returns null values for report key values in a DLT expectation for the report table.

- B . Define a function that performs a left outer join on validation_copy and report and report, and check against the result in a DLT expectation for the report table

- C . Define a temporary table that perform a left outer join on validation_copy and report, and define an expectation that no report key values are null

- D . Define a view that performs a left outer join on validation_copy and report, and reference this view in DLT expectations for the report table

D

Explanation:

To validate that all records from the source are included in the derived table, creating a view that performs a left outer join between the validation_copy table and the report table is effective. The view can highlight any discrepancies, such as null values in the report table’s key columns, indicating missing records. This view can then be referenced in DLT (Delta Live Tables) expectations for the report table to ensure data integrity. This approach allows for a comprehensive comparison between the source and the derived table.

Reference: Databricks Documentation on Delta Live Tables and Expectations: Delta Live Tables Expectations

A Delta Lake table representing metadata about content from user has the following schema:

Based on the above schema, which column is a good candidate for partitioning the Delta Table?

- A . Date

- B . Post_id

- C . User_id

- D . Post_time

A

Explanation:

Partitioning a Delta Lake table improves query performance by organizing data into partitions based on the values of a column. In the given schema, the date column is a good candidate for partitioning for several reasons:

Time-Based Queries: If queries frequently filter or group by date, partitioning by the date column can significantly improve performance by limiting the amount of data scanned.

Granularity: The date column likely has a granularity that leads to a reasonable number of partitions (not too many and not too few). This balance is important for optimizing both read and write performance.

Data Skew: Other columns like post_id or user_id might lead to uneven partition sizes (data skew), which can negatively impact performance.

Partitioning by post_time could also be considered, but typically date is preferred due to its more

manageable granularity.

Reference: Delta Lake Documentation on Table Partitioning: Optimizing Layout with Partitioning

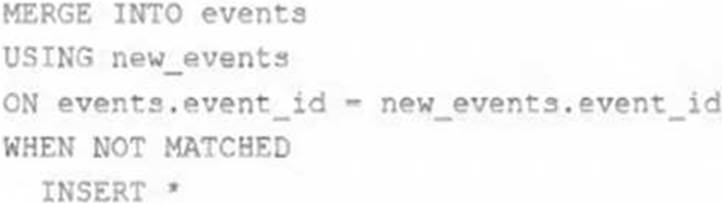

A junior data engineer on your team has implemented the following code block.

The view new_events contains a batch of records with the same schema as the events Delta table.

The event_id field serves as a unique key for this table.

When this query is executed, what will happen with new records that have the same event_id as an existing record?

- A . They are merged.

- B . They are ignored.

- C . They are updated.

- D . They are inserted.

- E . They are deleted.

B

Explanation:

This is the correct answer because it describes what will happen with new records that have the same event_id as an existing record when the query is executed. The query uses the INSERT INTO command to append new records from the view new_events to the table events. However, the INSERT INTO command does not check for duplicate values in the primary key column (event_id) and does not perform any update or delete operations on existing records. Therefore, if there are new records that have the same event_id as an existing record, they will be ignored and not inserted into the table events.

Verified Reference: [Databricks Certified Data Engineer Professional], under “Delta Lake” section; Databricks Documentation, under “Append data using INSERT INTO” section.

"If none of the WHEN MATCHED conditions evaluate to true for a source and target row pair that matches the merge_condition, then the target row is left unchanged." https://docs.databricks.com/en/sql/language-manual/delta-merge-into.html#:~:text=If%20none%20of%20the%20WHEN%20MATCHED%20conditions%20evaluate%20t o%20true%20for%20a%20source%20and%20target%20row%20pair%20that%20matches%20the%20 merge_condition%2C%20then%20the%20target%20row%20is%20left%20unchanged.

The data governance team has instituted a requirement that all tables containing Personal Identifiable Information (PH) must be clearly annotated. This includes adding column comments, table comments, and setting the custom table property "contains_pii" = true.

The following SQL DDL statement is executed to create a new table:

Which command allows manual confirmation that these three requirements have been met?

- A . DESCRIBE EXTENDED dev.pii test

- B . DESCRIBE DETAIL dev.pii test

- C . SHOW TBLPROPERTIES dev.pii test

- D . DESCRIBE HISTORY dev.pii test

- E . SHOW TABLES dev

A

Explanation:

This is the correct answer because it allows manual confirmation that these three requirements have been met. The requirements are that all tables containing Personal Identifiable Information (PII) must be clearly annotated, which includes adding column comments, table comments, and setting the custom table property “contains_pii” = true. The DESCRIBE EXTENDED command is used to display detailed information about a table, such as its schema, location, properties, and comments. By using this command on the dev.pii_test table, one can verify that the table has been created with the correct column comments, table comment, and custom table property as specified in the SQL DDL statement.

Verified Reference: [Databricks Certified Data Engineer Professional], under “Lakehouse” section; Databricks Documentation, under “DESCRIBE EXTENDED” section.

A small company based in the United States has recently contracted a consulting firm in India to implement several new data engineering pipelines to power artificial intelligence applications. All the company’s data is stored in regional cloud storage in the United States.

The workspace administrator at the company is uncertain about where the Databricks workspace used by the contractors should be deployed.

Assuming that all data governance considerations are accounted for, which statement accurately informs this decision?

- A . Databricks runs HDFS on cloud volume storage; as such, cloud virtual machines must be deployed in the region where the data is stored.

- B . Databricks workspaces do not rely on any regional infrastructure; as such, the decision should be made based upon what is most convenient for the workspace administrator.

- C . Cross-region reads and writes can incur significant costs and latency; whenever possible, compute should be deployed in the same region the data is stored.

- D . Databricks leverages user workstations as the driver during interactive development; as such, users should always use a workspace deployed in a region they are physically near.

- E . Databricks notebooks send all executable code from the user’s browser to virtual machines over the open internet; whenever possible, choosing a workspace region near the end users is the most secure.

C

Explanation:

This is the correct answer because it accurately informs this decision. The decision is about where the Databricks workspace used by the contractors should be deployed. The contractors are based in India, while all the company’s data is stored in regional cloud storage in the United States. When choosing a region for deploying a Databricks workspace, one of the important factors to consider is the proximity to the data sources and sinks. Cross-region reads and writes can incur significant costs and latency due to network bandwidth and data transfer fees. Therefore, whenever possible, compute should be deployed in the same region the data is stored to optimize performance and reduce costs.

Verified Reference: [Databricks Certified Data Engineer Professional], under “Databricks Workspace” section; Databricks Documentation, under “Choose a region” section.