Practice Free SPLK-2002 Exam Online Questions

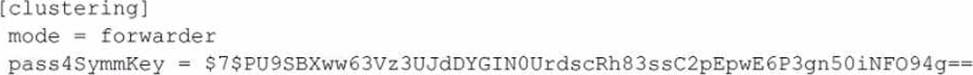

Which of the following server. conf stanzas indicates the Indexer Discovery feature has not been fully configured (restart pending) on the Master Node?

A)

![]()

B)

C)

D)

![]()

- A . Option A

- B . Option B

- C . Option C

- D . Option D

A

Explanation:

The Indexer Discovery feature enables forwarders to dynamically connect to the available peer nodes in an indexer cluster. To use this feature, the manager node must be configured with the [indexer_discovery] stanza and a pass4SymmKey value. The forwarders must also be configured with the same pass4SymmKey value and the master_uri of the manager node. The pass4SymmKey value must be encrypted using the splunk _encrypt command. Therefore, option A indicates that the Indexer Discovery feature has not been fully configured on the manager node, because the pass4SymmKey value is not encrypted. The other options are not related to the Indexer Discovery feature.

Option B shows the configuration of a forwarder that is part of an indexer cluster.

Option C shows the configuration of a manager node that is part of an indexer cluster.

Option D shows an invalid configuration of the [indexer_discovery] stanza, because the pass4SymmKey value is not encrypted and does not match the forwarders’ pass4SymmKey value12

1: https://docs.splunk.com/Documentation/Splunk/9.1.2/Indexer/indexerdiscovery 2: https://docs.splunk.com/Documentation/Splunk/9.1.2/Security/Secureyourconfigurationfiles#Encry pt_the_pass4SymmKey_setting_in_server.conf

When adding or rejoining a member to a search head cluster, the following error is displayed: Error pulling configurations from the search head cluster captain; consider performing a destructive configuration resync on this search head cluster member.

What corrective action should be taken?

- A . Restart the search head.

- B . Run the splunk apply shcluster-bundle command from the deployer.

- C . Run the clean raft command on all members of the search head cluster.

- D . Run the splunk resync shcluster-replicated-config command on this member.

D

Explanation:

When adding or rejoining a member to a search head cluster, and the following error is displayed: Error pulling configurations from the search head cluster captain; consider performing a destructive configuration resync on this search head cluster member.

The corrective action that should be taken is to run the splunk resync shcluster-replicated-config command on this member. This command will delete the existing configuration files on this member and replace them with the latest configuration files from the captain. This will ensure that the member has the same configuration as the rest of the cluster. Restarting the search head, running the splunk apply shcluster-bundle command from the deployer, or running the clean raft command on all members of the search head cluster are not the correct actions to take in this scenario. For more information, see Resolve configuration inconsistencies across cluster members in the Splunk documentation.

Which Splunk Enterprise offering has its own license?

- A . Splunk Cloud Forwarder

- B . Splunk Heavy Forwarder

- C . Splunk Universal Forwarder

- D . Splunk Forwarder Management

C

Explanation:

The Splunk Universal Forwarder is the only Splunk Enterprise offering that has its own license. The Splunk Universal Forwarder license allows the forwarder to send data to any Splunk Enterprise or Splunk Cloud instance without consuming any license quota. The Splunk Heavy Forwarder does not have its own license, but rather consumes the license quota of the Splunk Enterprise or Splunk Cloud instance that it sends data to. The Splunk Cloud Forwarder and the Splunk Forwarder Management are not separate Splunk Enterprise offerings, but rather features of the Splunk Cloud service. For more information, see [About forwarder licensing] in the Splunk documentation.

Which command should be run to re-sync a stale KV Store member in a search head cluster?

- A . splunk clean kvstore -local

- B . splunk resync kvstore -remote

- C . splunk resync kvstore -local

- D . splunk clean eventdata -local

A

Explanation:

To resync a stale KV Store member in a search head cluster, you need to stop the search head that has the stale KV Store member, run the command splunk clean kvstore –local, and then restart the search head. This triggers the initial synchronization from other KV Store members12.

The command splunk resync kvstore [-source sourceId] is used to resync the entire KV Store cluster from one of the members, not a single member. This command can only be invoked from the node that is operating as search head cluster captain2.

The command splunk clean eventdata -local is used to delete all indexed data from a standalone indexer or a cluster peer node, not to resync the KV Store3.

Reference: 1: How to resolve error on a search head member in the search head cluster …

2: Resync the KV store – Splunk Documentation

3: Delete indexed data – Splunk Documentation

Which of the following items are important sizing parameters when architecting a Splunk environment? (select all that apply)

- A . Number of concurrent users.

- B . Volume of incoming data.

- C . Existence of premium apps.

- D . Number of indexes.

ABC

Explanation:

Number of concurrent users: This is an important factor because it affects the search performance and resource utilization of the Splunk environment. More users mean more concurrent searches, which require more CPU, memory, and disk I/O. The number of concurrent users also determines the search head capacity and the search head clustering configuration12

Volume of incoming data: This is another crucial factor because it affects the indexing performance and storage requirements of the Splunk environment. More data means more indexing throughput, which requires more CPU, memory, and disk I/O. The volume of incoming data also determines the indexer capacity and the indexer clustering configuration13

Existence of premium apps: This is a relevant factor because some premium apps, such as Splunk Enterprise Security and Splunk IT Service Intelligence, have additional requirements and recommendations for the Splunk environment. For example, Splunk Enterprise Security requires a dedicated search head cluster and a minimum of 12 CPU cores per search head. Splunk IT Service Intelligence requires a minimum of 16 CPU cores and 64 GB of RAM per search head45

Reference: 1: Splunk Validated Architectures 2: Search head capacity planning 3: Indexer capacity

planning 4: Splunk Enterprise Security Hardware and Software Requirements 5: [Splunk IT Service Intelligence Hardware and Software Requirements]

An index has large text log entries with many unique terms in the raw dat

a. Other than the raw data, which index components will take the most space?

- A . Index files (*. tsidx files).

- B . Bloom filters (bloomfilter files).

- C . Index source metadata (sources.data files).

- D . Index sourcetype metadata (SourceTypes. data files).

A

Explanation:

Index files (. tsidx files) are the main components of an index that store the raw data and the inverted index of terms. They take the most space in an index, especially if the raw data has many unique terms that increase the size of the inverted index. Bloom filters, source metadata, and sourcetype metadata are much smaller in comparison and do not depend on the number of unique terms in the raw data.

Reference: How the indexer stores indexes

Splunk Enterprise Certified Architect Study Guide, page 17

Users who receive a link to a search are receiving an "Unknown sid" error message when they open the link.

Why is this happening?

- A . The users have insufficient permissions.

- B . An add-on needs to be updated.

- C . The search job has expired.

- D . One or more indexers are down.

C

Explanation:

According to the Splunk documentation1, the “Unknown sid” error message means that the search job associated with the link has expired or been deleted. The sid (search ID) is a unique identifier for each search job, and it is used to retrieve the results of the search. If the sid is not found, the search cannot be displayed.

The other options are false because:

The users having insufficient permissions would result in a different error message, such as “You do not have permission to view this page” or "You do not have permission to run this search"1.

An add-on needing to be updated would not affect the validity of the sid, unless the add-on changes the search syntax or the data source in a way that makes the search invalid or inaccessible1.

One or more indexers being down would not cause the “Unknown sid” error, as the sid is stored on the search head, not the indexers. However, it could cause other errors, such as “Unable to distribute to peer” or "Search peer has the following message: not enough disk space"1.

To activate replication for an index in an indexer cluster, what attribute must be configured in indexes.conf on all peer nodes?

- A . repFactor = 0

- B . replicate = 0

- C . repFactor = auto

- D . replicate = auto

C

Explanation:

To activate replication for an index in an indexer cluster, the repFactor attribute must be configured in indexes.conf on all peer nodes. This attribute specifies the replication factor for the index, which determines how many copies of raw data are maintained by the cluster. Setting the repFactor attribute to auto will enable replication for the index. The replicate attribute in indexes.conf is not a valid Splunk attribute. The repFactor attribute in outputs.conf and the replicate attribute in deploymentclient.conf are not related to replication for an index in an indexer cluster. For more information, see Configure indexes for indexer clusters in the Splunk documentation.

Which of the following use cases would be made possible by multi-site clustering? (select all that apply)

- A . Use blockchain technology to audit search activity from geographically dispersed data centers.

- B . Enable a forwarder to send data to multiple indexers.

- C . Greatly reduce WAN traffic by preferentially searching assigned site (search affinity).

- D . Seamlessly route searches to a redundant site in case of a site failure.

CD

Explanation:

According to the Splunk documentation1, multi-site clustering is an indexer cluster that spans multiple physical sites, such as data centers. Each site has its own set of peer nodes and search heads. Each site also obeys site-specific replication and search factor rules. The use cases that are made possible by multi-site clustering are:

Greatly reduce WAN traffic by preferentially searching assigned site (search affinity). This means that if you configure each site so that it has both a search head and a full set of searchable data, the search head on each site will limit its searches to local peer nodes. This eliminates any need, under normal conditions, for search heads to access data on other sites, greatly reducing network traffic between sites2.

Seamlessly route searches to a redundant site in case of a site failure. This means that by storing copies of your data at multiple locations, you maintain access to the data if a disaster strikes at one location. Multisite clusters provide site failover capability. If a site goes down, indexing and searching can continue on the remaining sites, without interruption or loss of data2. The other options are false because:

Use blockchain technology to audit search activity from geographically dispersed data centers. This is not a use case of multi-site clustering, as Splunk does not use blockchain technology to audit search activity. Splunk uses its own internal logs and metrics to monitor and audit search activity3.

Enable a forwarder to send data to multiple indexers. This is not a use case of multi-site clustering, as forwarders can send data to multiple indexers regardless of whether they are in a single-site or multi-site cluster. This is a basic feature of forwarders that allows load balancing and high availability of data ingestion4.

What is needed to ensure that high-velocity sources will not have forwarding delays to the indexers?

- A . Increase the default value of sessionTimeout in server, conf.

- B . Increase the default limit for maxKBps in limits.conf.

- C . Decrease the value of forceTimebasedAutoLB in outputs. conf.

- D . Decrease the default value of phoneHomelntervallnSecs in deploymentclient .conf.

B

Explanation:

To ensure that high-velocity sources will not have forwarding delays to the indexers, the default limit for maxKBps in limits.conf should be increased. This parameter controls the maximum bandwidth that a forwarder can use to send data to the indexers. By default, it is set to 256 KBps, which may not be sufficient for high-volume data sources. Increasing this limit can reduce the forwarding latency and improve the performance of the forwarders. However, this should be done with caution, as it may affect the network bandwidth and the indexer load.

Option B is the correct answer.

Option A is incorrect because the sessionTimeout parameter in server.conf controls the duration of a TCP connection between a forwarder and an indexer, not the bandwidth limit.

Option C is incorrect because the forceTimebasedAutoLB parameter in outputs.conf controls the frequency of load balancing among the indexers, not the bandwidth limit.

Option D is incorrect because the phoneHomelntervallnSecs parameter in deploymentclient.conf controls the interval at which a forwarder contacts the deployment server, not the bandwidth limit12

1: https://docs.splunk.com/Documentation/Splunk/9.1.2/Admin/Limitsconf#limits.conf.spec 2: https://docs.splunk.com/Documentation/Splunk/9.1.2/Forwarding/Routeandfilterdatad#Set_the_m aximum_bandwidth_usage_for_a_forwarder